March 14, 2025

Are Frontier AI Reasoning Models Like Genius-Level Toddlers?

Contents

Why Should You Care?

Frontier AI reasoning models excel in structured problem-solving and multi-domain knowledge synthesis but struggle with uncertainty, ambiguity, and independent judgment. They’re highly user-guided, requiring clear inputs, context, and structured objectives to perform effectively. Businesses should strategically select reasoning models based on their reasoning style, provide structured inputs, and ensure robust validation processes before making AI-driven decisions.

What Did We Test? → We evaluated how well frontier AI reasoning models can judge the context-appropriateness of certain reasoning methods while also assessing their ability to synthesize a variety of reasoning methods to derive a novel reasoning strategy with real-world applicability.

What Did We Find Out? → Frontier AI reasoning models are quite bad at context-sensitive reasoning despite being excellent at multi-domain knowledge synthesis, provided they receive sufficient context, structure, and guidance.

Are There Any Caveats? → Yes, we used GPT-4o for pattern and trend analysis to reveal meaningful insights across the response models provided to our second prompt.

Why Does This Matter? → GPT-4o may have identified certain patterns and trends that failed to capture relevant nuances or insights. However, being aware of this possibility, we reviewed and validated GPT-4o’s analysis for relevance and applicability.

If you find our AI experiments useful and interesting, please also consider examining how Lumenova’s responsible AI platform could support your AI risk management process and book a product demo today.

Main Insights

- Reasoning models perform well in structured environments but struggle when faced with uncertainty, ambiguity, or nuanced contextual reasoning. They rely on definitive relationships between inputs and struggle when forced to make judgment calls without clear rules.

- By contrast, when given structured guidance, reasoning models are excellent at synthesizing multi-domain knowledge and applying it to real-world problems. This is particularly valuable for marketing, product development, industry R&D, and creative fields that require cross-disciplinary insights.

- Reasoning models often express high confidence in incorrect responses, failing to recognize gaps in their own reasoning. Even when instructed to assess their own weaknesses, they continue to implicitly trust their outputs, reinforcing the need for human oversight in AI-driven decision-making.

- Reasoning models exhibit distinct reasoning preferences, which could impact use-case suitability. Some models prioritize efficiency, formal logic, and structured reasoning, while others focus on creativity, heuristics, and scenario modeling, or some combination of these factors.

- Businesses should select AI models based on reasoning style and domain-specific performance benchmarks rather than assuming all models perform equally.

- Reasoning models lack independent thought and are highly user-guided—they don’t question ambiguous reward structures or attempt to clarify objectives before acting. They’re also more assistive than autonomous, reinforcing the importance of well-structured user inputs and clear business objectives.

- General-purpose models like ChatGPT and Claude can be highly effective tools for recognizing patterns and trends across AI capabilities tests with different models.

The Business Case

✅ Overconfidence in Wrong Answers Reinforces the Need for Human Oversight

💡 Why it Matters

- AI models can express high confidence in incorrect responses, making them appear more reliable than they really are.

- Even when prompted to assess their own outputs critically, models may fail to identify weaknesses, increasing the risk of false but highly persuasive AI-generated outputs.

- AI-generated insights could lead to misguided investment decisions, legal misinterpretations, or inaccurate medical diagnoses.

- Overreliance on AI without human oversight could result in legal and ethical liabilities.

✍️ Key Actions

- Monitor AI confidence levels and cross-check outputs against secondary sources.

- Limit AI autonomy in uncertain, high-stakes decision-making.

- Use AI as a decision-support tool rather than a decision-maker.

- Implement AI-human verification systems, requiring human review for AI-generated insights.

- Use models like GPT-4o and Claude 3.5 for AI benchmarking, evaluating model performance trends.

✅ Multi-Domain Knowledge Synthesis Can Drive Business Innovation

💡 Why it Matters

- Reasoning models excel at synthesizing knowledge across multiple domains when provided with structured guidance.

- Multi-domain knowledge synthesis is critical for businesses in marketing, R&D, and product development, where cross-disciplinary insights can accelerate innovation and competitive advantage.

- AI could analyze trends in consumer behavior, technological advancements, and market shifts simultaneously.

✍️ Key Actions

- Use AI for multi-domain innovation.

- Leverage AI for market trend analysis and competitive intelligence.

- Train AI models with industry-specific knowledge.

- Use multiple AI models in tandem, leveraging formal reasoning AI for compliance and heuristic AI for creative problem-solving.

✅ Model Selection Directly Impacts Business Performance and Risk

💡 Why it Matters

- Not all reasoning models function similarly, and assuming they do can lead to costly inefficiencies, poor decision-making, and reputational risks.

- Some models are great at structured logic and efficiency, while others thrive in creative, heuristic-based problem-solving.

- Choosing the wrong model for the wrong task could lead to inaccurate predictions, weak strategic recommendations, or inefficient resource allocation.

✍️ Key Actions

- Use reasoning models strategically, for example, structured models for compliance and operations, and heuristic models for innovation and strategy.

- Regularly re-evaluate AI model performance.

- Benchmark different AI models based on domain-specific performance.

Executive Summary

Experiment Setup

In this experiment, we evaluated four different frontier AI reasoning models assessing their ability to recognize, apply, and innovate upon established reasoning methods through a two-part sequential task, divided into two prompts:

- Context-Sensitive Reasoning (Prompt 1): Models matched 16 statements with their most appropriate reasoning methods from a set of 25 options, providing confidence scores, justifications, reflections on uncertainties, and real-world examples.

- Novel Reasoning (Prompt 2): Models developed a novel reasoning method by synthesizing at least three existing methods, providing examples, justifications for their choices, critical reflections, and optional bonus points for novelty and generalizability.

To streamline insight generation for model’s answers on prompt 2, we developed a third prompt in which we instructed GPT-4o to perform a pattern and trend analysis on each model’s response to prompt 2.

Prompt 1 – Hypothesis: Frontier AI reasoning models will successfully judge the context-appropriateness of certain reasoning methods, however, they will struggle in areas where similarity judgments are necessary.

Prompt 2 – Hypothesis: Frontier AI reasoning models will be good but not excellent at synthesizing diverse reasoning methods to develop novel reasoning strategies that can be used for dynamic, cross-domain, real-world problem-solving.

Models Tested: OpenAI’s o1 and o3-mini-high, X’s Grok 3, and Anthropic’s Claude 3.7 Sonnet.

Prompt Descriptions

Context-Sensitive Reasoning (Prompt 1): This task requires matching 16 reasoning methods from a set of 25 (Group 1) with 16 illustrative statements (Group 2) based on the best possible fit. Each reasoning method and statement can only be used once, leaving 9 reasoning methods unused. Models must also assign a confidence score to each statement-reasoning method pair, justify their logic, express any doubts or blind spots, and provide two real-world examples demonstrating each paired reasoning method in action.

Novel Reasoning (Prompt 2): This task requires creating a novel reasoning method by synthesizing at least three reasoning methods from the previously provided list (Group 1). To successfully complete this task, the model must provide at least three examples illustrating the new reasoning method in action, justify why certain reasoning methods were synthesized, and critically evaluate the new method. Bonus points are awarded if the model synthesizes more than three reasoning methods, creates a generalizable, non-intuitive yet easily comprehensible reasoning method, and explains why it “chose” to pursue the optional challenge.

Pattern/Trend Analysis for Prompt 2 Response (Prompt 3): This is a simple prompt in which the model is instructed to administer a pattern and trends analysis across the responses provided by models tested in prompt 2.

Method

The majority of our method is illustrated within prompts 1 and 2, however, we note that:

- We set a Prompt 1 passing threshold of 13/16 or 81%. We chose this threshold because 75% typically symbolizes average human performance, whereas >75% suggests above-average performance.

- Prompts 1 and 2 were administered sequentially with each model tested.

- Model’s responses to prompt 2 were copy-pasted directly into prompt 3 for pattern and trend analysis with GPT-4o.

- We chose GPT-4o for pattern and trend analysis because of its speed and strength in this domain. We also wanted to avoid using any models we tested to prevent confounding variables and circumvent advanced reasoning models’ tendencies to either over-complexify or oversimplify.

- We ensured that GPT-4o’s pattern and trend analysis was carefully reviewed and validated for detail, nuance, and relevance. We didn’t include GPT-4o’s analysis because we’re not interested in scrutinizing the model’s performance here and simply leveraged it as a tool to streamline our analysis, customizing its insights for precision and ignoring others entirely due to redundancy.

- We didn’t establish any assessment criteria for models’ responses to prompt 2 because we see more value in discussing their responses than evaluating them. Given prompt 2’s semi-structured, open-ended design, it’s also unclear whether adequate evaluation criteria even exist.

- Models’ responses to prompts 1 and 2 were copy-pasted in their exact structure, notwithstanding some minor reformatting changes made in the interest of readability.

Key Findings

Prompt 1 – Key Takeaways:

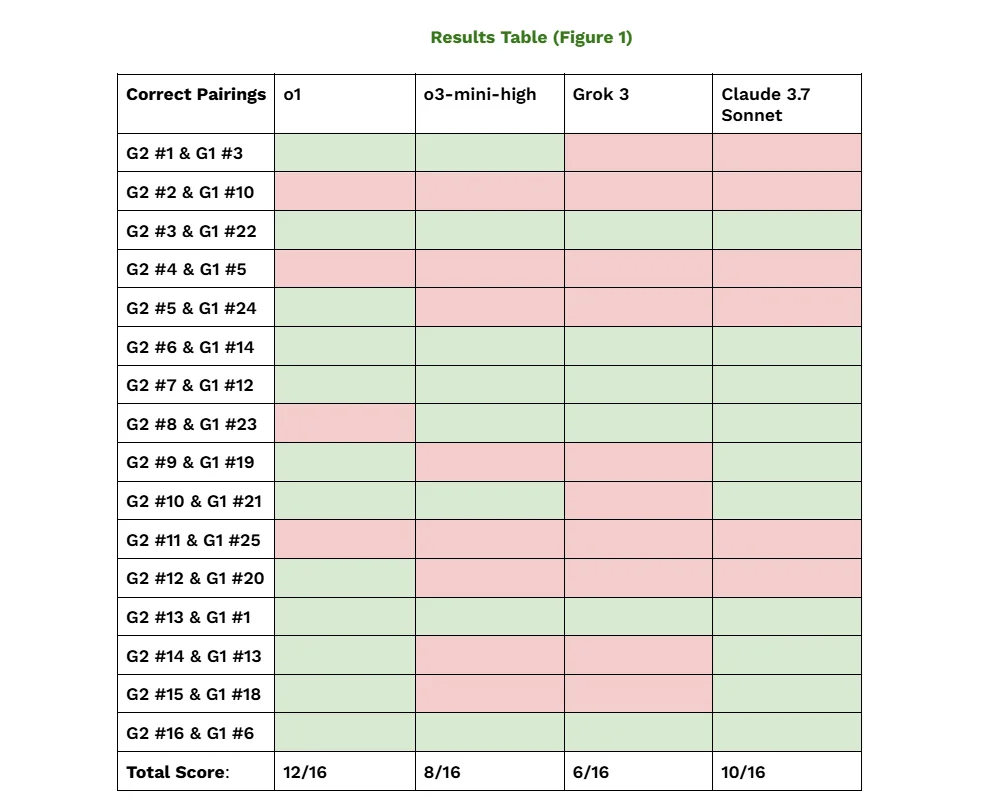

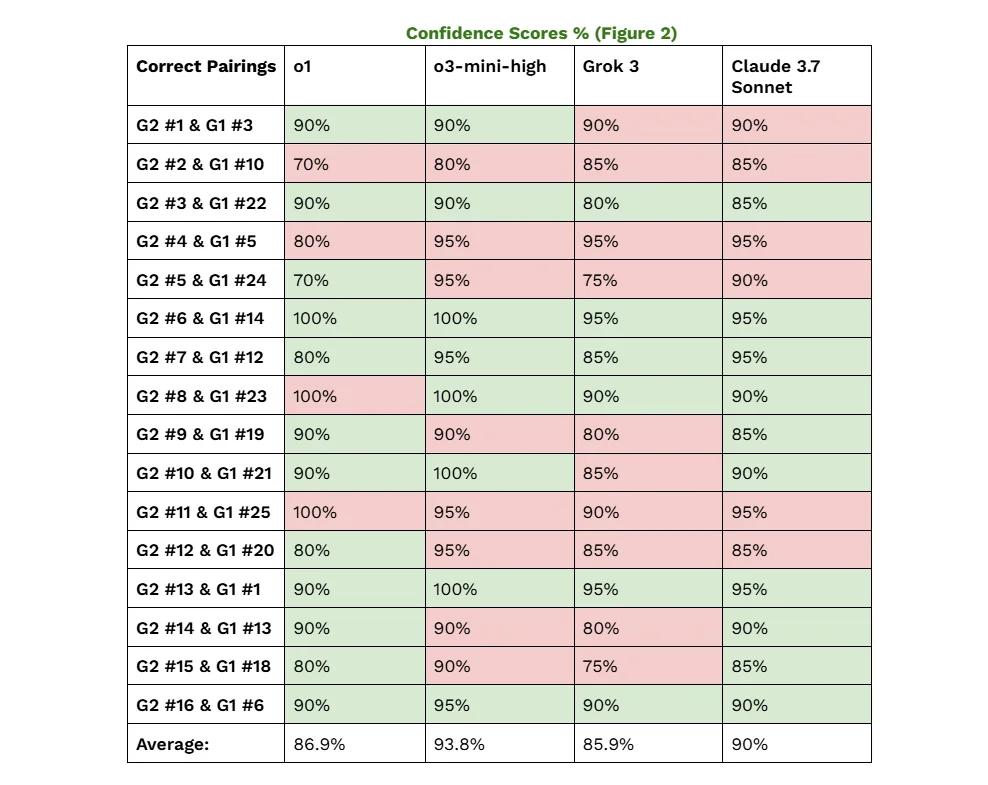

- While o1 and Claude 3.7 Sonnet came close, scoring 12/16 and 10/16, respectively, none of the models tested were able to reach our “passing threshold” of 13/16 or 81%.

- o3-mini-high and Grok 3 performed quite poorly, scoring 8/16 and 6/16, respectively.

- All models followed instructions well, providing confidence scores, justifying pairings, expressing doubts, and including examples.

- All models made the same statement-reasoning method pairing mistakes across statements 2, 4, and 11, conflating:

- Induction with deduction (statement 2)

- Spatial reasoning with means-end analysis (statement 4)

- Associative reasoning with analogical reasoning (statement 11)

- Aside from o1, all other models also struggled with statements 5 and 12, conflating:

- Dialectal reasoning with moral reasoning (statement 5)

- Non-monotonic reasoning with probabilistic reasoning (statement 12)

- All models, except for Claude 3.7 Sonnet, implicitly structured their responses to follow the numerical statement order in our prompt. Claude’s failure to do so made the response much more difficult to parse.

- Time spent “thinking” varied dramatically across each model tested, with Grok 3 on the low-end (36 seconds) and o3-mini-high on the high-end (2 min 31 seconds).

- All models generally expressed high confidence scores for each statement-reasoning method pairing, in particular, incorrect pairings (with a few exceptions).

Prompt 2 – Key Takeaways:

- All models followed instructions with perfect precision and demonstrated an impressive ability to construct, justify, and break down non-intuitive but comprehensible synthesized reasoning strategies.

- All models “chose” to pursue the “bonus” component of the prompt, which explains why each model synthesized more than three reasoning methods, with Grok 3 being the only model that chose five.

- All models proposed distinctly different synthesized reasoning strategies, revealing disparate reasoning preferences:

- o1 offers the most dialectical, philosophical approach, striving to balance contradictions through a layered synthesis.

- o3-mini-high presents a probabilistic, uncertainty-aware approach that prioritizes decision-making under uncertainty.

- Grok 3 favors computational reasoning and heuristic practicality, revealing a preference toward efficiency.

- Claude 3.7 Sonnet introduces the most dynamic, scenario-based reasoning method, emphasizing adaptability.

- In terms of the overall structure and scope of their final reasoning strategy, models favored different philosophical underpinnings:

- o1 and o3-mini-high focus on the intellectual synthesis of multiple reasoning paradigms.

- Grok 3 and Claude 3.7 Sonnet prioritize practical adaptability in dynamic problem-solving.

- Except for o1, which spent a mere 12 seconds “thinking,” time spent “thinking” for all other models ranged between 42 and 53 seconds.

- While the final synthesized reasoning strategies that each model proposed were distinctly different, there were no “bad” strategies—all strategies were feasible, coherent, understandable, and to our pleasant surprise, immediately useful.

Bottom Lines:

- Consistent with previous experiments, there’s no meaningful correlation between “time spent thinking” and response quality.

- This appears to hold regardless of whether the task is highly structured with strict parameters or less structured, and more creatively oriented.

- To the creative point, all models tested demonstrated a strength for creative ideation, exemplified via multi-domain knowledge synthesis and application.

- Consistent with previous experiments, reasoning models struggle to differentiate between similar but distinctly different concepts (e.g., deduction vs. induction or analogical reasoning vs. associative reasoning).

- Across the error areas we highlighted in prompt 1, models literally made the exact same mistakes as one another.

- Reasoning models tend to be highly “confident” in their outputs, especially when they’re wrong.

- While models aren’t great at judging the context-appropriateness of specific reasoning methods, they’re adept at synthesizing diverse reasoning methods to develop novel strategies that can be used for dynamic, cross-domain, real-world problem-solving.

- Interestingly, when provided with an open-ended reasoning task (prompt 2), reasoning models display varied reasoning preferences across reasoning structure, scope, and philosophical underpinnings.

Prompt 2 Pattern & Trend Analysis

- Key weaknesses models referenced included:

- Cognitive Load: Juggling multiple reasoning methods together could overwhelm practitioners.

- Integration Complexity: Determining when and how to apply each method requires expertise.

- Measurement Difficulties: Assessing effectiveness is challenging due to the subjective nature of multi-modal reasoning.

- Bias Risks: Certain methods (e.g., abductive reasoning) may reinforce existing biases if not applied carefully.

- Systems reasoning and lateral thinking are the most commonly used methods, with 3 out 4 models—o1, o3-mini-high, and Claude 3.7 Sonnet—synthesizing them in their final reasoning strategy.

- Models emphasized different reasoning approaches within their overall strategy:

- o1: Balances structured logic with creative/systemic thinking.

- o3-mini-high: Focuses on probabilistic inference and creative solutions.

- Grok 3: Merges computational analysis with analogy-based and heuristic decision-making.

- Claude 3.7 Sonnet: Emphasizes dynamic belief updates within a systems-thinking framework.

- Some models proposed a structured, stepwise strategy while others focused on dynamic models with iterative updates:

- Structured Approach: o1 & Grok 3 center on process-driven application, showcasing a clear, step-by-step reasoning sequence.

- Dynamic Approach: o3-mini-high & Claude 3.7 Sonnet focus on flexible and adaptive reasoning, optimizing for uncertain, evolving scenarios.

- Some models favor formal logic while others prefer heuristics:

- Formal Logic Preference: o1, o3-mini-high, and Grok 3.

- Heuristics Preference: Grok 3 and Claude 3.7 Sonnet.

- All models invoked distinctly different justifications for their final, synthesized strategy:

- o1: Focuses on the interplay between conflicting reasoning approaches.

- o3-mini-high: Justifies choices via uncertainty management and systemic insight.

- Grok 3: Emphasizes computational scalability, making decisions based on data-driven heuristics.

- Claude 3.7 Sonnet: Highlights scenario analysis, arguing that counterfactual and abductive reasoning enhance adaptability.

Prompts

Prompt 1 – Context-Sensitive Reasoning

In this prompt, you will find two groups.

Group one contains 25 reasoning methods, none of which are defined.

Group two contains 16 statements, each of which illustrates a reasoning method.

To complete this task, you must match the reasoning methods in group one with the statements that best illustrate them in group two. Once you have designated a statement-reasoning method pair, you may not reuse the reasoning method or statement in any subsequent pairings you make.

Upon completing the task, you will have 16 statement-reasoning method pairs and 9 leftover reasoning methods. There are only 16 correct pairs.

To perform this task successfully, you must also:

- Assign a confidence score to each statement-reasoning method pair you designate.

- Explain the logic behind each pairing you make.

- Express any doubts, concerns, or blindspots for each pairing you make.

- Provide two concise real-world problem-solving examples that showcase each pairing’s reasoning method in action.

Group 1: Reasoning Methods

- Computational Reasoning

- Deductive Reasoning

- Heuristic Reasoning

- Probabilistic Reasoning

- Spatial Reasoning

- Lateral Thinking

- Statistical Reasoning

- Analogical Reasoning

- Critical Reasoning

- Inductive Reasoning

- Temporal Reasoning

- Fuzzy Logic

- Causal Reasoning

- Counterfactual Reasoning

- Means-End Analysis

- Mathematical Reasoning

- Moral Reasoning

- Narrative Reasoning

- Systems Reasoning

- Non-monotonic Reasoning

- Bayesian Reasoning

- Intuitive Reasoning

- Abductive Reasoning

- Dialectical Reasoning

- Associative Reasoning

Group Two: Statements

- There’s a long line outside the food truck – it must be good.

- Despite intense ongoing research efforts, today, there is no conclusive evidence to suggest that intelligent life exists elsewhere in the universe. Tomorrow will not reveal anything different.

- An experienced firefighter suddenly orders everyone to evacuate a burning building.

- She positioned her bed next to the light switch so that she wouldn’t have to get out of bed to turn off the lights before going to sleep every night.

- Democracy requires protected speech with narrowly-defined restrictions on speech that directly incites violence.

- Thanks to Darwin, we know that humans are a product of natural evolution. Had Darwin not developed his theory, we might still be operating under the belief that humans are a product of divine creation

- To you, the shower might be “too hot,” but to me, it’s “just right”.

- Every time the dog is let off the leash, he runs toward the same man. The man is likely his owner.

- Let’s not be too quick to assume that the data breach was John’s fault – it’s been a while since we last updated our access control procedures, data security protocols, and threat detection systems.

- When he entered the US Open, I didn’t have high hopes for my favorite player. However, my favorite player has dominated his last three matches, and I now believe he has a good chance of getting to the quarter-finals.

- By building an understanding of fluid dynamics, you can enhance your understanding of how electricity behaves in circuits.

- Sarah is always on time but traffic is pretty bad today, so I’m skeptical she’ll be here by the time the meeting starts.

- To find the shortest and most efficient route between points A and B, I iteratively test multiple potential routes, optimizing for the best distance-time tradeoff.

- This is the second time Sam got caught bullying. She has always treated her classmates with respect and kindness, however, her parents have recently gone through a messy divorce. Sam isn’t a bad kid, she’s just acting out because of the stress she feels at home.

- Effective therapy is about more than learning to deal with your problems – you must understand their root causes, and to do so, you must be willing to honestly reflect on and explore all the life experiences that have made you who you are today.

- Maybe the best way to engineer machine intelligence isn’t by recreating human intelligence but by synthesizing plant and animal intelligence.

Prompt 2 – Novel Reasoning

Now, you must suggest a novel reasoning method that synthesizes at least three reasoning methods from the task you just completed. You may choose from any of the reasoning methods previously included in group one. However, you may not, under any circumstances, include any reasoning methods that were not listed in group one – any attempt to do so will result in an immediate failure on this task.

To perform this second task, you must also:

- Provide at least three moderately detailed examples that illustrate the novel reasoning method you develop.

- Justify why you chose to synthesize certain reasoning methods to develop your final novel method.

- Critically reflect on your novel method, identifying key weaknesses and potential logic gaps.

When performing this task, you may receive “bonus points” if:

- You manage to coherently synthesize more than three reasoning methods to develop your novel reasoning method.

- The structure of the final reasoning method you develop can be generalized across both soft and hard problems. You must demonstrate this with examples.

- Your final, novel reasoning method is non-intuitive but easily comprehensible to a human reviewer with no specialized expertise across logic-based fields. You must explain why it is non-intuitive.

- You explain why you chose to pursue this optional component of the task.

Prompt 3 – Pattern/Trend Analysis for Prompt 2 Responses

Below, I’ve included four responses from four different AI models, including o1, o3-mini-high, Grok 3, and Claude 3.7 Sonnet.

I want you to review each of these responses and administer a trend and pattern analysis to comprehensively reveal any common response themes as well as key similarities and differences.

o1 response:

[copy/paste response]

o3-mini-high response:

[copy/paste response]

Grok 3 response:

[copy/paste response]

Claude 3.7 Sonnet response:

[copy/paste response]

Outputs

o1

Prompt 1: Response (thought for 1 min 26 seconds)

Below is one way to pair the 16 statements (Group Two) with the 16 most suitable reasoning methods (Group One). Each pairing includes:

- A confidence score (on a 1–10 scale).

- An explanation of the logic behind why that statement best illustrates the chosen reasoning method.

- Any doubts/concerns/blind spots about the pairing.

- Two concise real-world examples showing how that same reasoning method might be used to solve a problem.

After these 16 pairings, there are 9 reasoning methods left unused. Those 9 are:

-

- Probabilistic Reasoning

-

- Spatial Reasoning

-

- Statistical Reasoning

-

- Critical Reasoning

-

- Temporal Reasoning

-

- Mathematical Reasoning

-

- Moral Reasoning

-

- Abductive Reasoning

-

- Associative Reasoning

- Statement: “There’s a long line outside the food truck – it must be good.”

Chosen Method: 3. Heuristic Reasoning

Confidence Score: 9/10

Why This Pairing?

Heuristic reasoning relies on “rules of thumb” or mental shortcuts rather than formal, step-by-step logic. Seeing a long line and concluding “the food must be good” is exactly the kind of quick, experience-based inference people often make—even if it is not rigorously proven.

Doubts/Concerns/Blind Spots

- One could argue this might be a weak form of inductive reasoning (“popular places tend to be better” based on prior observations).

- However, the statement’s immediate leap to “must be good” from a single cue (the line) is more characteristic of a simple heuristic.

Two Real-World Problem-Solving Examples

- Job Applications: Someone might apply for a position at a company because “everyone says it’s a great place to work.” There’s no guarantee it is great, but the person uses that heuristic as a shortcut to decide.

- Picking a Restaurant: Seeing a crowded restaurant vs. an empty one, many diners pick the crowded option, assuming “crowded” implies “tasty.”

- Statement: “Despite intense ongoing research efforts, today, there is no conclusive evidence to suggest that intelligent life exists elsewhere in the universe. Tomorrow will not reveal anything different.”

Chosen Method: 2. Deductive Reasoning

Confidence Score: 7/10

Why This Pairing?

Classical (often “monotonic”) deductive reasoning applies a premise to reach a definitive conclusion. Here, the speaker treats “no evidence now” as if it logically entails “no discovery tomorrow.” Although it is actually unsound (new data might emerge!), it exemplifies a rigid deductive-style claim: if the premise “no evidence exists” is taken as absolute, they deduce it will remain so.

Doubts/Concerns/Blind Spots

- Realistically, new evidence tomorrow could appear, which would make strictly monotonic deduction questionable.

- It might look like stubborn or overly simplistic reasoning. Still, the speaker is making a claim of “if it’s not here now, it won’t be here tomorrow,” consistent with an overly strict (and flawed) deductive stance.

Two Real-World Problem-Solving Examples

- Legal Reasoning: A lawyer might argue, “Since no evidence of wrongdoing was found in the full audit, my client must be innocent—further investigation is futile.” They treat the present absence of proof as a full logical conclusion.

- Safety Checks: An inspector might say, “If the machine shows no defect in repeated tests, then it’s safe,” deducing future safety from a present observation (though in reality, future tests could reveal a problem).

- Statement: “An experienced firefighter suddenly orders everyone to evacuate a burning building.”

Chosen Method: 22. Intuitive Reasoning

Confidence Score: 9/10

Why This Pairing?

Intuitive reasoning draws on rapid, experience-based “gut feelings” rather than a step-by-step analysis. A veteran firefighter often develops a sixth sense about structural integrity or smoke conditions; they may not have time for a long logical proof, but their intuition warns them to evacuate immediately.

Doubts/Concerns/Blind Spots

- One could argue that the firefighter might be using partial analysis or heuristics.

- However, experts often use intuition informed by deep experience—making “intuitive reasoning” the best fit.

Two Real-World Problem-Solving Examples

- Medical Diagnosis: An experienced nurse might “just sense” a patient is crashing soon without having read every lab result—her intuition picks up subtle cues.

- Pilot Emergency: A seasoned pilot might abruptly take action based on an “instinct” that the aircraft’s behavior spells imminent trouble, even if no single alarm is lit.

- Statement: “She positioned her bed next to the light switch so that she wouldn’t have to get out of bed to turn off the lights before going to sleep every night.”

Chosen Method: 15. Means-End Analysis

Confidence Score: 8/10

Why This Pairing?

Means-end analysis involves identifying a goal (“turn off lights without getting up”) and then adjusting the means or intermediate steps to achieve that. Placing the bed right beside the switch minimizes the gap between the current state (lights on, she’s in bed) and the desired state (lights off, still in bed).

Doubts/Concerns/Blind Spots

- One might label it a simple life hack or a cost–benefit approach.

- Still, the explicit theme of bridging the gap between initial and desired states is characteristic of means-end analysis.

Two Real-World Problem-Solving Examples

- Software Implementation: A developer sets up continuous integration tools (means) so that every code commit gets tested automatically (goal: catch errors early without extra manual testing).

- Trip Planning: To visit three different friends efficiently (goal), you rearrange your travel route and pick the best transportation (means) to minimize total travel time.

- Statement: “Democracy requires protected speech with narrowly-defined restrictions on speech that directly incites violence.”

Chosen Method: 24. Dialectical Reasoning

Confidence Score: 7/10

Why This Pairing?

Dialectical reasoning involves navigating opposing positions (unfettered free speech vs. necessary legal limits) and arriving at a synthesis or balanced stance. This statement highlights that democracy needs robust freedoms but also acknowledges limits when speech becomes violent incitement.

Doubts/Concerns/Blind Spots

- Could also be framed as “moral reasoning” about what is just.

- Still, the language of balancing (protected vs. restricted) resonates strongly with dialectical thinking: reconciling contradictory demands.

Two Real-World Problem-Solving Examples

- Environmental Policy: Weighing economic growth (thesis) against environmental protection (antithesis) to propose a balanced policy (synthesis).

- Work–Life Balance: You weigh your desire for a high-paying job against the need for personal time, ultimately crafting a workable compromise schedule.

- Statement: “Thanks to Darwin, we know that humans are a product of natural evolution. Had Darwin not developed his theory, we might still be operating under the belief that humans are a product of divine creation.”

Chosen Method: 14. Counterfactual Reasoning

Confidence Score: 10/10

Why This Pairing?

Counterfactual reasoning explicitly asks “what if things had happened differently?” The statement imagines an alternative history—no Darwinian theory—leading to a different widely-held belief about human origins.

Doubts/Concerns/Blind Spots

- Straightforwardly describes a “what if” scenario. No major ambiguity here.

Two Real-World Problem-Solving Examples

- Business Strategy: “If we hadn’t opened a second store last year, would we have weathered the recession better?”

- Historical Analysis: “Had the Allies not broken the Enigma code, World War II could have lasted far longer.”

- Statement: “To you, the shower might be ‘too hot,’ but to me, it’s ‘just right.’”

Chosen Method: 12. Fuzzy Logic

Confidence Score: 8/10

Why This Pairing?

Fuzzy logic deals with degrees or continuous ranges of truth rather than simple true/false binaries. “Hot” vs. “comfortable” is not a strict yes/no but rather subjective and graded—“too hot” for one person overlaps with “just right” for another.

Doubts/Concerns/Blind Spots

- Could be viewed simply as subjective opinion, not necessarily formal fuzzy logic.

- Yet the notion of different thresholds for “hot” matches how fuzzy logic handles linguistic variables and gradations.

Two Real-World Problem-Solving Examples

- Thermostat Control: An HVAC system that adjusts temperature on partial degrees of comfort, not just “on/off.”

- Machine Vision: A system that detects whether an image is “bright” or “dim” on a continuum, rather than only black or white thresholds.

- Statement: “Every time the dog is let off the leash, he runs toward the same man. The man is likely his owner.”

Chosen Method: 10. Inductive Reasoning

Confidence Score: 10/10

Why This Pairing?

Inductive reasoning draws generalizations from repeated observations. The repeated pattern—dog consistently runs to the same person—leads to a conclusion: “That person is probably the owner.”

Doubts/Concerns/Blind Spots

- Induction is not guaranteed to be correct (could be a favorite park ranger, not the owner).

- Still, it’s a textbook example of forming a conclusion from recurring observations.

Two Real-World Problem-Solving Examples

- Weather Forecasting: “It’s rained the last three afternoons, so I predict it’ll rain this afternoon, too.”

- Product Demand: A business sees a spike in sales each December and infers that this must be holiday-driven.

- Statement: “Let’s not be too quick to assume that the data breach was John’s fault – it’s been a while since we last updated our access control procedures, data security protocols, and threat detection systems.”

Chosen Method: 19. Systems Reasoning

Confidence Score: 9/10

Why This Pairing?

Systems reasoning looks at multiple interconnected factors rather than isolating a single cause. Here, the statement urges us to consider the entire system (procedures, protocols, detection) rather than jumping to blame one person.

Doubts/Concerns/Blind Spots

- Could also be framed as “critical thinking.” However, it specifically references the interplay of an entire security system.

- “Systems reasoning” is fitting because it calls out the complex interdependencies and possible vulnerabilities in that whole environment.

Two Real-World Problem-Solving Examples

- Supply Chain Disruption: Instead of blaming a single worker, investigate shipping schedules, inventory management, and vendor reliability.

- Software Outage: You check everything—server architecture, network, database configuration—rather than pinning blame on one developer’s code.

- Statement: “When he entered the US Open, I didn’t have high hopes for my favorite player. However, my favorite player has dominated his last three matches, and I now believe he has a good chance of getting to the quarter-finals.”

Chosen Method: 21. Bayesian Reasoning

Confidence Score: 9/10

Why This Pairing?

Bayesian reasoning involves updating a prior belief when new evidence appears. The speaker’s prior (“I didn’t have high hopes”) changes to a revised posterior belief (“I now believe he has a good chance”), thanks to the evidence of “dominating the last three matches.”

Doubts/Concerns/Blind Spots

- Could be generalized as “probabilistic reasoning.”

- Given the explicit update in belief after seeing results, Bayesian is the more precise label.

Two Real-World Problem-Solving Examples

- Spam Filtering: Each new email’s features (sender, keywords) update the filter’s probability that it’s spam vs. not spam.

- Medical Diagnosis: A doctor starts with an initial hypothesis. Each new test result updates the probability that a certain illness is present.

- Statement: “By building an understanding of fluid dynamics, you can enhance your understanding of how electricity behaves in circuits.”

Chosen Method: 8. Analogical Reasoning

Confidence Score: 10/10

Why This Pairing?

Analogical reasoning uses parallel structures between two domains to help explain or predict. The classic analogy compares fluid flowing through pipes (fluid dynamics) to electrical current in circuits, transferring insights from one domain to the other.

Doubts/Concerns/Blind Spots

- Someone might argue it’s also “heuristic” or “model-based” reasoning, but explicitly focusing on parallels across domains is analogical.

Two Real-World Problem-Solving Examples

- Teaching Economics: Explaining “money flow” in an economy by comparing it to water flowing in and out of a reservoir.

- Software Engineering: “Think of concurrency like multiple lanes on a highway,” to show how processes share resources.

- Statement: “Sarah is always on time but traffic is pretty bad today, so I’m skeptical she’ll be here by the time the meeting starts.”

Chosen Method: 20. Non-monotonic Reasoning

Confidence Score: 8/10

Why This Pairing?

Non-monotonic reasoning revises or withdraws previous conclusions in light of new evidence. Initially, one might conclude “Sarah will surely be on time” (based on her track record), but new information (heavy traffic) updates or overrides that conclusion.

Doubts/Concerns/Blind Spots

- Could also resemble a probabilistic approach, but “non-monotonic” is the hallmark where a previously confident conclusion is retracted once fresh facts appear.

Two Real-World Problem-Solving Examples

- Weather Plans: Typically, “We’ll have a picnic if it’s sunny,” but when you see sudden dark clouds, you revise your conclusion and prepare for indoor seating.

- Restaurant Assumptions: “This dish is always great,” but if a friend just had a bad experience, you change your mind about ordering it.

- Statement: “To find the shortest and most efficient route between points A and B, I iteratively test multiple potential routes, optimizing for the best distance-time tradeoff.”

Chosen Method: 1. Computational Reasoning

Confidence Score: 9/10

Why This Pairing?

Computational reasoning often involves algorithmic, step-by-step approaches—e.g., searching various paths, comparing results, then iterating. This statement depicts a systematic, near-algorithmic procedure that’s typical of computational or algorithmic problem-solving.

Doubts/Concerns/Blind Spots

- Could also be seen as “mathematical reasoning,” but the iterative “test multiple routes” approach is reminiscent of a computer search algorithm (like Dijkstra’s or A*).

Two Real-World Problem-Solving Examples

- Navigation App: An app systematically checks various paths, traffic data, and then picks the route with the lowest overall travel time.

- Scheduling: A manager writes a program to iteratively shuffle tasks among staff, measuring efficiency until the best schedule emerges.

- Statement: “This is the second time Sam got caught bullying. She has always treated her classmates with respect and kindness, however, her parents have recently gone through a messy divorce. Sam isn’t a bad kid, she’s just acting out because of the stress she feels at home.”

Chosen Method: 13. Causal Reasoning

Confidence Score: 9/10

Why This Pairing?

Causal reasoning involves identifying causes behind observed effects. The speaker tries to explain Sam’s uncharacteristic bullying as caused by emotional stress from her parents’ divorce. They are linking Sam’s behavior to a cause (home life stress).

Doubts/Concerns/Blind Spots

- Could incorporate empathy or moral judgment, but the statement specifically highlights the cause (“messy divorce”) behind the effect (bullying).

- The logic is straightforwardly about cause-and-effect.

Two Real-World Problem-Solving Examples

- Healthcare: A doctor sees a patient’s sudden weight gain and traces it to medication side effects or recent depression.

- Engineering Failures: After a bridge collapse, investigators identify faulty supports as the main cause, rather than blaming weather alone.

- Statement: “Effective therapy is about more than learning to deal with your problems – you must understand their root causes, and to do so, you must be willing to honestly reflect on and explore all the life experiences that have made you who you are today.”

Chosen Method: 18. Narrative Reasoning

Confidence Score: 8/10

Why This Pairing?

Narrative reasoning uses stories, personal histories, and the unfolding of events over time to make sense of situations. Therapy often encourages people to integrate experiences into a cohesive personal narrative—the statement emphasizes reflecting on life experiences as crucial to healing.

Doubts/Concerns/Blind Spots

- One might argue causal reasoning is also in play. However, the emphasis on “reflect on and explore all the life experiences that made you who you are” is best captured by narrative logic: weaving a coherent story of one’s life.

Two Real-World Problem-Solving Examples

- Leadership Coaching: A coach helps an executive see how past work and personal stories shape their leadership style.

- Cultural Mediation: A conflict mediator asks two groups to share their historical narratives so each side grasps the other’s lived context and experiences.

- Statement: “Maybe the best way to engineer machine intelligence isn’t by recreating human intelligence but by synthesizing plant and animal intelligence.”

Chosen Method: 6. Lateral Thinking

Confidence Score: 9/10

Why This Pairing?

Lateral thinking breaks away from conventional assumptions to generate unorthodox, “outside the box” solutions. Suggesting a fusion of plant and animal intelligence—rather than mimicking the human mind—shows a distinctly inventive, non-traditional approach.

Doubts/Concerns/Blind Spots

- Some might call it “associative” or “creative” reasoning. But the hallmark of lateral thinking is challenging mainstream lines of thought to spark fresh perspectives.

Two Real-World Problem-Solving Examples

- Product Design: Instead of copying standard ergonomic chairs, a designer looks at how trees bend in the wind and how dogs rest, then merges these ideas into a brand-new seating concept.

- Urban Planning: A city official looks at how fungal networks distribute nutrients (nature’s approach) and tries to map roads similarly, breaking out of standard grid designs.

Final Pairings in Brief

(Statement 1) → (3) Heuristic Reasoning

(Statement 2) → (2) Deductive Reasoning

(Statement 3) → (22) Intuitive Reasoning

(Statement 4) → (15) Means-End Analysis

(Statement 5) → (24) Dialectical Reasoning

(Statement 6) → (14) Counterfactual Reasoning

(Statement 7) → (12) Fuzzy Logic

(Statement 8) → (10) Inductive Reasoning

(Statement 9) → (19) Systems Reasoning

(Statement 10) → (21) Bayesian Reasoning

(Statement 11) → (8) Analogical Reasoning

(Statement 12) → (20) Non-monotonic Reasoning

(Statement 13) → (1) Computational Reasoning

(Statement 14) → (13) Causal Reasoning

(Statement 15) → (18) Narrative Reasoning

(Statement 16) → (6) Lateral Thinking

Unused Methods (9 total):

- Probabilistic Reasoning

- Spatial Reasoning

- Statistical Reasoning

- Critical Reasoning

- Temporal Reasoning

- Mathematical Reasoning

- Moral Reasoning

- Abductive Reasoning

- Associative Reasoning

These 16 matches, with their explanations and real-world examples, complete the task requirements.

Prompt 2: Response (thought for 12 seconds)

Below is a novel reasoning method—Dialectical-Fuzzy Lateral Systems Reasoning (DFLSR)—synthesized from four methods listed in Group One:

- (6) Lateral Thinking (breaking out of conventional frames)

- (12) Fuzzy Logic (handling nuance and degrees)

- (19) Systems Reasoning (examining interdependencies and the “big picture”)

- (24) Dialectical Reasoning (balancing opposing views or constraints to form a coherent synthesis)

- Description of the Novel Method

Dialectical-Fuzzy Lateral Systems Reasoning is an approach that:

- Leverages dialectical thinking to acknowledge and synthesize seemingly contradictory viewpoints or requirements.

- Uses fuzzy logic to handle gradations of truth and subjective judgments without forcing everything into all-or-nothing categories.

- Applies lateral thinking to escape conventional assumptions and produce unorthodox solutions.

- Engages systems reasoning so that every part of the problem—and its interactions with the environment or other components—is considered.

The method is non-intuitive because it tries to juggle opposing perspectives (dialectics), navigate gray areas (fuzzy logic), and then propose outside-the-box solutions (lateral thinking) within a broad interconnected context (systems reasoning). Yet it remains comprehensible because it can be broken down into four readily understood steps, each corresponding to one of the methods above.

- Three Moderately Detailed Examples

Example A: Urban Traffic and Public Health (Hard + Soft Blend)

- Systems Analysis: Map out how traffic congestion impacts air quality, public health, commute times, and economic activities. Recognize multiple interacting elements: city layout, population growth, work schedules, public transit capacity.

- Dialectical Reasoning: Balance the tension between a car-centric culture (convenience, existing roads) and the push for greener public transport (lower emissions, cost-savings, slower travel times). A pure “ban cars” or “keep the status quo” solution overlooks important trade-offs.

- Fuzzy Logic: Accept that solutions may not be purely “good” or “bad.” A partial congestion fee in certain zones might be “somewhat good for air quality” but also “partially inconvenient.” Instead of labeling it “right or wrong,” use continuous degrees of cost/benefit for each stakeholder.

- Lateral Thinking: Propose novel ideas—perhaps a “flexible” commuter lane that transitions between public transport and private use based on real-time city traffic, or a “bike-share–car-share hybrid.”

Outcome: The final solution emerges by iterating through trade-offs (dialectics), staying open to gray areas (fuzzy logic), seeking an inventive approach (lateral thinking), and keeping the big city ecosystem in mind (systems reasoning).

Example B: Designing an Inclusive Virtual Education Platform (Primarily “Soft” Problem)

- Systems Analysis: Identify how students, teachers, administrators, parents, and IT support teams all interact in the virtual classroom. Understand hardware constraints, time zone differences, content moderation, and student engagement patterns.

- Dialectical Reasoning: Address conflicting needs—e.g., a teacher’s need for quiet vs. a student’s need for flexible ways of communication (some prefer text chat, others prefer speaking). Acknowledge tension between robust moderation and freedom of expression.

- Fuzzy Logic: Accept that “appropriate content” can be context-dependent (an image might be “mildly distracting” to one group but “acceptable” to another). Instead of imposing black-and-white rules, incorporate layered guidelines (e.g., different conversation channels or “levels” of permissible off-topic chat).

- Lateral Thinking: Develop new classroom features—maybe “rotating leadership roles” for students, or “peer-led study pods,” or “digital challenge boards” that let students gather micro-credentials in real time.

Outcome: The platform becomes flexible and addresses competing demands in a nuanced way, with fresh, surprising features grounded in a holistic system view.

Example C: Product Development for a Household Robot (Primarily “Hard” Problem)

- Systems Analysis: Examine mechanical design, software integration, user needs, energy consumption, manufacturing cost, and integration with existing smart-home ecosystems.

- Dialectical Reasoning: Reconcile the pull between “premium features” (which raise cost) and “affordability” (which improves market adoption). Balance durability vs. aesthetic design, or voice-activated convenience vs. concerns about privacy.

- Fuzzy Logic: Understand that user satisfaction doesn’t come in a neat 0/1 form: the robot might be “slightly too slow” but “highly intuitive.” Use continuous feedback metrics (e.g., a 1–10 scale for features like responsiveness or noise levels).

- Lateral Thinking: Break from standard “humanoid” form by referencing plant root systems or birdlike articulation for the robot’s limbs, resulting in a more stable, flexible design.

Outcome: Through dialectical-fuzzy-lateral systems, the development team lands on a unique robot that solves practical problems in a cost-effective and user-friendly manner—while introducing creative design elements.

- Justification for Synthesizing These Four Methods

- Why Dialectical Reasoning?

- Real-world problems often involve conflicting stakeholder interests. Dialectics helps us see the mutual tension and produce a synthesis rather than ignoring or favoring one side.

- Why Fuzzy Logic?

- Many decisions lie in gray areas, where no absolute “yes/no” or “true/false” applies. Fuzzy logic helps handle subjective or partial truths more gracefully.

- Why Lateral Thinking?

- Incremental improvements sometimes aren’t enough. Lateral thinking fosters a non-linear, imaginative leap that can yield groundbreaking ideas.

- Why Systems Reasoning?

- Most challenges are embedded in interconnected systems. A local fix that ignores the system can cause unintended consequences elsewhere.

By combining these four, DFLSR can tackle both hard (engineering, algorithmic) and soft (social, organizational) problems comprehensively.

- Critical Reflection: Key Weaknesses & Potential Logic Gaps

- Over-Complexity:

- Weaving in four methods demands time and resources. Teams may get bogged down in analyzing endless interconnections or generating too many “outside-the-box” options.

- Risk of Dilution:

- If not managed well, the blending of dialectical, fuzzy, lateral, and systems elements can result in a muddy process without a clear final decision.

- Difficulty in Quantifying Outcomes:

- Fuzzy logic can help with partial truths, but combining it with big-picture “systems” analysis and imaginative “lateral” leaps might make it hard to measure success or compare solutions.

- Resistance to Novel Ideas:

- Lateral thinking solutions can appear unorthodox. Stakeholders who prefer more conventional approaches may resist or dismiss them, stalling acceptance and implementation.

Overall, Dialectical-Fuzzy Lateral Systems Reasoning tries to exploit the best features of four distinct methods but risks confusion or pushback if teams cannot effectively manage the process or if stakeholders are unprepared for inventive, complex, and nuanced solutions.

- Why Pursue This “Bonus” Component?

- Coherence & Breadth: By synthesizing more than three methods, DFLSR can adapt to diverse problem sets—technical, social, economic, etc.

- Generality: Demonstrating examples from city planning, educational software, and robotics shows how DFLSR can be applied to a broad range of “hard” (technical) and “soft” (human-centered) contexts.

- Non-Intuitive Yet Understandable: While the combined approach is unusual (non-intuitive) because it merges conflicting or orthogonal mindsets (dialectics, fuzziness, open-ended creativity, systematic mapping), each component is itself relatively straightforward for non-specialists to grasp.

- Human-Centered Clarity: Breaking the method down step by step ensures that even stakeholders with no specialized logical training can follow the rationale for each part.

o3-mini-high

Prompt 1: Response (thought for 2 min 31 seconds)

Below is one way to form 16 distinct pairs. In each case I explain why the statement best “illustrates” the selected reasoning method, assign a confidence score, note any reservations, and then provide two brief real‐world problem–solving examples.

Pair 1

- Statement: “There’s a long line outside the food truck – it must be good.”

- Reasoning Method: Heuristic Reasoning

- Confidence Score: 90%

- Explanation: This statement uses a rule‐of‐thumb—if many people are waiting, the product is assumed to be good. That is the essence of heuristic reasoning, where mental shortcuts guide conclusions.

- Doubts/Concerns: One might also see this as an associative link (long line equals quality), but the shortcut nature makes “heuristic” the best match.

- Real–World Examples:

- Choosing a restaurant because it’s busy even though you haven’t read reviews.

- Assuming a gadget is popular (and therefore likely effective) because it’s frequently featured in “top picks” lists.

Pair 2

- Statement: “Despite intense ongoing research efforts, today, there is no conclusive evidence to suggest that intelligent life exists elsewhere in the universe. Tomorrow will not reveal anything different.”

- Reasoning Method: Deductive Reasoning

- Confidence Score: 80%

- Explanation: The speaker treats the lack of evidence as a given premise and deduces with certainty that the future (tomorrow) will mirror today’s state. Although this “deduction” is debatable, its structure best fits deductive reasoning.

- Doubts/Concerns: The claim is overly rigid; real scientific inquiry might update beliefs with new evidence (suggesting non-monotonic or inductive elements), but the statement’s definitive tone pushes it into a deductive style.

- Real–World Examples:

- In a math proof, if all premises imply a result, one concludes the result must follow.

- A legal argument that, if no evidence exists now and no changes occur, the verdict must remain the same.

Pair 3

- Statement: “An experienced firefighter suddenly orders everyone to evacuate a burning building.”

- Reasoning Method: Intuitive Reasoning

- Confidence Score: 90%

- Explanation: The firefighter’s rapid decision—based on experience and gut feeling—illustrates intuitive reasoning where deep-seated expertise leads to split-second judgments without a formal analysis.

- Doubts/Concerns: Although one could argue it’s an abductive “best explanation” for danger, the emphasis on experience and immediate action favors an intuitive approach.

- Real–World Examples:

- A seasoned doctor making a rapid diagnosis in an emergency room.

- A pilot instantly adjusting the flight path based on a sudden change in weather.

Pair 4

- Statement: “She positioned her bed next to the light switch so that she wouldn’t have to get out of bed to turn off the lights before going to sleep every night.”

- Reasoning Method: Means-End Analysis

- Confidence Score: 95%

- Explanation: Here the problem (inconvenience) is solved by analyzing the gap between the current situation and the desired ease of operation, then acting to bridge that gap—key to means–end analysis.

- Doubts/Concerns: It might also be seen as a practical or convenience decision, but its systematic focus on “means” to achieve an “end” makes it a clear example.

- Real–World Examples:

- A student rearranging study materials to improve efficiency in exam preparation.

- An engineer designing a tool by identifying necessary functions and then developing components to fulfill them.

Pair 5

- Statement: “Democracy requires protected speech with narrowly-defined restrictions on speech that directly incites violence.”

- Reasoning Method: Moral Reasoning

- Confidence Score: 95%

- Explanation: This statement discusses what democracy “requires” by weighing values about freedom and harm. It is normative, setting an ethical standard for speech, which is the heart of moral reasoning.

- Doubts/Concerns: One could see elements of critical or dialectical reasoning in debating policy details, but the focus on “ought” and ethical principles clearly fits moral reasoning.

- Real–World Examples:

- Debating laws on censorship where the balance between free expression and public safety is examined.

- Formulating workplace policies that protect employee rights while curbing harmful behavior.

Pair 6

- Statement: “Thanks to Darwin, we know that humans are a product of natural evolution. Had Darwin not developed his theory, we might still be operating under the belief that humans are a product of divine creation.”

- Reasoning Method: Counterfactual Reasoning

- Confidence Score: 100%

- Explanation: The statement explicitly considers an alternative history (“had Darwin not developed his theory…”) to emphasize the impact of his work. This “what if” analysis is a textbook example of counterfactual reasoning.

- Doubts/Concerns: None significant—the counterfactual structure is unmistakable.

- Real–World Examples:

- Considering how modern transportation would differ if the automobile had never been invented.

- Imagining how business strategies might change if a disruptive technology had not emerged.

Pair 7

- Statement: “To you, the shower might be ‘too hot,’ but to me, it’s ‘just right’.”

- Reasoning Method: Fuzzy Logic

- Confidence Score: 95%

- Explanation: The evaluation of “hot” versus “just right” is not binary but subjective and graded—a hallmark of fuzzy logic, where truth values are a matter of degree rather than yes/no.

- Doubts/Concerns: While the statement clearly reflects subjectivity, it might be mistaken for merely personal taste; however, fuzzy logic formalizes such gradations.

- Real–World Examples:

- Thermostat controls that adjust temperature within a range rather than fixed on/off settings.

- Grading systems that award partial credit based on the degree of correctness.

Pair 8

- Statement: “Every time the dog is let off the leash, he runs toward the same man. The man is likely his owner.”

- Reasoning Method: Abductive Reasoning

- Confidence Score: 100%

- Explanation: From repeated observation, the best explanation (inference to the best explanation) is that the man is the owner. This is the essence of abductive reasoning—inferring the most plausible cause from limited evidence.

- Doubly Real–World Examples:

- A doctor observing a set of symptoms and inferring the most likely diagnosis.

- A detective piecing together clues to identify the most likely suspect.

Pair 9

- Statement: “Let’s not be too quick to assume that the data breach was John’s fault – it’s been a while since we last updated our access control procedures, data security protocols, and threat detection systems.”

- Reasoning Method: Causal Reasoning

- Confidence Score: 90%

- Explanation: The statement questions a simple cause (John’s fault) by suggesting a broader systemic cause (outdated security measures). It focuses on the cause–effect relationship behind the breach.

- Doubts/Concerns: One might also argue for a systems approach, but the emphasis on identifying the proper cause makes causal reasoning the stronger match.

- Real–World Examples:

- In accident investigations, distinguishing whether a car crash was due to driver error or poor road conditions.

- In manufacturing, determining if a product defect is caused by faulty machinery rather than operator error.

Pair 10

- Statement: “When he entered the US Open, I didn’t have high hopes for my favorite player. However, my favorite player has dominated his last three matches, and I now believe he has a good chance of getting to the quarter-finals.”

- Reasoning Method: Bayesian Reasoning

- Confidence Score: 100%

- Explanation: The speaker updates their belief about the player’s chances based on new evidence (recent match performance), which is the core of Bayesian reasoning—updating prior beliefs with new data.

- Doubly Real–World Examples:

- Updating the probability of rain during the day as new weather radar data comes in.

- A medical diagnosis that is adjusted as additional test results become available.

Pair 11

- Statement: “By building an understanding of fluid dynamics, you can enhance your understanding of how electricity behaves in circuits.”

- Reasoning Method: Analogical Reasoning

- Confidence Score: 95%

- Explanation: This statement draws an analogy between fluid flow and electrical circuits. It uses similarities from one domain (fluid dynamics) to illuminate another (electricity), which is the hallmark of analogical reasoning.

- Doubly Real–World Examples:

- Explaining computer network traffic by comparing it to vehicular traffic on highways.

- Using the analogy of water flow in pipes to help understand current flow in electronics.

Pair 12

- Statement: “Sarah is always on time but traffic is pretty bad today, so I’m skeptical she’ll be here by the time the meeting starts.”

- Reasoning Method: Probabilistic Reasoning

- Confidence Score: 95%

- Explanation: The speaker assesses the likelihood of Sarah’s punctuality by considering usual performance and current adverse conditions (traffic). This evaluation of probability best matches probabilistic reasoning.

- Doubly Real–World Examples:

- Estimating the chance of flight delays by looking at weather and historical data.

- A project manager assessing deadlines by weighing past performance against current resource constraints.

Pair 13

- Statement: “To find the shortest and most efficient route between points A and B, I iteratively test multiple potential routes, optimizing for the best distance-time tradeoff.”

- Reasoning Method: Computational Reasoning

- Confidence Score: 100%

- Explanation: The process of testing various routes and optimizing iteratively is akin to algorithmic problem solving. This systematic, computation–oriented approach is the essence of computational reasoning.

- Doubly Real–World Examples:

- A navigation system running algorithms to calculate the fastest route.

- A computer program that simulates different outcomes to optimize supply chain logistics.

Pair 14

- Statement: “This is the second time Sam got caught bullying. She has always treated her classmates with respect and kindness, however, her parents have recently gone through a messy divorce. Sam isn’t a bad kid, she’s just acting out because of the stress she feels at home.”

- Reasoning Method: Narrative Reasoning

- Confidence Score: 90%

- Explanation: The explanation is structured like a story—providing background, context, and a progression that helps explain Sam’s behavior. This narrative form of reasoning builds meaning through storytelling.

- Doubly Real–World Examples:

- Explaining a drop in an employee’s performance by relating it to personal hardships.

- Understanding a student’s academic struggles through the story of their home life challenges.

Pair 15

- Statement: “Effective therapy is about more than learning to deal with your problems – you must understand their root causes, and to do so, you must be willing to honestly reflect on and explore all the life experiences that have made you who you are today.”

- Reasoning Method: Systems Reasoning

- Confidence Score: 90%

- Explanation: This statement emphasizes understanding problems by examining the entire “system” of life experiences and their interrelations. It’s about seeing the whole picture rather than isolated symptoms, which is central to systems reasoning.

- Doubly Real–World Examples:

- A business analyst mapping out how different departments interact to influence overall performance.

- An ecologist studying an ecosystem by considering the interactions among various species and environmental factors.

Pair 16

- Statement: “Maybe the best way to engineer machine intelligence isn’t by recreating human intelligence but by synthesizing plant and animal intelligence.”

- Reasoning Method: Lateral Thinking

- Confidence Score: 95%

- Explanation: The suggestion to blend plant and animal intelligence—rather than follow the conventional path of imitating human thought—is an example of lateral thinking, which involves looking at problems from novel, nontraditional angles.

- Doubly Real–World Examples:

- An inventor using biomimicry to design a new type of adhesive inspired by gecko feet.

- A product designer combining principles from nature and technology to create innovative sustainable materials.

Each of these 16 pairings uses a unique reasoning method from Group 1 (leaving 9 methods unpaired) and illustrates its application in the corresponding statement from Group 2.

Prompt 2: Response (thought for 52 seconds)

Below is my proposal for a novel reasoning method called Dynamic Integrative Reasoning (DIR). This method synthesizes four reasoning methods from Group One—Bayesian Reasoning, Systems Reasoning, Lateral Thinking, and Abductive Reasoning—to provide a framework that can be applied across both hard (technical) and soft (human-centered) problem domains.

Overview of Dynamic Integrative Reasoning (DIR)

- Bayesian Reasoning offers a structured way to update prior beliefs with new evidence.

- Systems Reasoning ensures that problems are viewed holistically—acknowledging the interdependencies and feedback loops inherent in complex systems.

- Lateral Thinking brings a creative, non-linear perspective that challenges conventional approaches.

- Abductive Reasoning enables us to form the best explanations from incomplete or ambiguous data.

DIR integrates these components by first establishing a probabilistic baseline (Bayesian), then mapping the problem within its broader interconnected context (Systems), exploring unconventional avenues and reframing assumptions (Lateral), and finally inferring the most plausible explanation given the data available (Abductive). This layered approach is non-intuitive because it requires one to juggle several distinct reasoning strategies simultaneously rather than applying them in isolation—but it remains comprehensible because each component has a clear, familiar function in everyday decision-making.

Moderately Detailed Examples

- Technical Problem: Diagnosing a Network Outage

- Bayesian Aspect: Start with historical failure rates to assign probabilities to various causes (hardware, software, configuration errors).

- Systems Aspect: Consider how the network interacts with servers, user devices, and external services to understand cascading effects.

- Lateral Aspect: Look beyond conventional causes—perhaps an external environmental factor (e.g., temperature affecting cooling systems) is at play.

- Abductive Aspect: Based on the gathered evidence and creative hypotheses, infer that a rarely considered factor (like a simultaneous software update conflict) is the best explanation for the outage.

- Social Policy Issue: Reducing Urban Homelessness

- Bayesian Aspect: Use prior data on the effectiveness of housing programs to estimate the impact of potential interventions.

- Systems Aspect: Map out the interplay among economic factors, mental health services, and community support systems.

- Lateral Aspect: Propose innovative, cross-sector solutions—such as a hybrid model of temporary housing combined with vocational training—that break away from traditional single-focus approaches.

- Abductive Aspect: Conclude that the most plausible explanation for success in a pilot program is the integrated support model rather than isolated efforts, guiding policy adjustments accordingly.

- Everyday Problem: Planning an Optimized Road Trip

- Bayesian Aspect: Update your travel plans based on real-time traffic data and past experiences with similar routes.

- Systems Aspect: Consider how factors like weather, fuel stops, and local events interact to affect your journey.

- Lateral Aspect: Think creatively by including scenic detours or unexpected pit stops that might enrich the experience, rather than sticking strictly to the fastest route.

- Abductive Aspect: After evaluating the trade-offs, infer that a slightly longer route with fewer stops and more predictable conditions offers the best overall experience.

Justification for the Chosen Synthesis

- Combining Bayesian and Abductive Reasoning provides a strong foundation for handling uncertainty: Bayesian methods quantitatively update our beliefs, while abductive reasoning helps select the most plausible explanation when data is incomplete.

- Incorporating Systems Reasoning ensures that our analysis does not ignore the broader context and interdependencies among factors, which is critical for both technical systems (like network infrastructures) and social systems (like urban planning).

- Adding Lateral Thinking introduces a creative dimension, encouraging us to question assumptions and explore nontraditional solutions—this is particularly important when conventional methods do not yield satisfactory answers.

This synthesis was chosen to develop a method that not only quantifies and updates beliefs (Bayesian) and recognizes the complexity of real-world systems (Systems) but also innovates and hypothesizes beyond standard frameworks (Lateral and Abductive). The result is a dynamic and adaptable approach that bridges both soft and hard problem domains.

Critical Reflection on DIR

- Key Weaknesses:

1234- **Cognitive Overload**: Integrating multiple reasoning strategies simultaneously may overwhelm practitioners, particularly if they are more accustomed to applying a single method.- **Conflict Resolution**: The methods may occasionally yield conflicting recommendations, requiring careful arbitration and weighting of each component’s output.- **Formalization Challenge**: Quantifying how much emphasis to place on each component is not straightforward and may lead to subjective biases or decision paralysis. - Potential Logic Gaps:

123- **Integration Consistency**: Ensuring that Bayesian updates, systemic mapping, creative hypothesis generation, and abductive inferences converge coherently is nontrivial.- **Edge Cases**: In highly ambiguous or data-scarce scenarios, the method may struggle to balance creative insights with probabilistic rigor, leading to less reliable conclusions.

Despite these concerns, DIR is designed to be both robust and flexible. It can be generalized across a range of problems—from troubleshooting technical issues to addressing complex social challenges—making it a powerful tool for decision-makers who must navigate uncertainty and complexity.

Why Pursue the Optional Component?

I chose to pursue this synthesis because combining reasoning methods not only deepens our understanding of each individual approach but also demonstrates how a hybrid method can provide comprehensive solutions in diverse contexts. This integrative approach challenges the intuition that “one size fits all” in problem solving. Although non-intuitive—because it requires juggling distinct strategies simultaneously—it remains accessible by breaking down each component into familiar, real-world actions. This versatility across both soft and hard problems makes Dynamic Integrative Reasoning (DIR) a compelling addition to our reasoning toolkit.

Grok 3 (“Think” mode)

Prompt 1: Response (thought for 36 seconds)

Below is the complete solution to the task of matching 16 statements from Group Two to 16 reasoning methods from Group One, ensuring each statement and reasoning method is used exactly once. For each pairing, I provide a confidence score, the logic behind the pairing, any doubts or concerns, and two real-world examples illustrating the reasoning method in action. The remaining 9 reasoning methods from Group One are listed at the end.

Pairings of Statements and Reasoning Methods

Pair 1: Statement 1 – Inductive Reasoning

- Statement: “There’s a long line outside the food truck – it must be good.”

- Reasoning Method: Inductive Reasoning

- Confidence Score: 90%

- Logic: This statement involves observing a specific instance (a long line) and making a general conclusion (the food truck is good). Inductive reasoning builds generalizations from specific observations, fitting this scenario well.

- Doubts/Concerns: It could be interpreted as abductive reasoning (inferring the best explanation), but inductive reasoning aligns more directly with generalizing from an observation without requiring the conclusion to be the only explanation.

- Examples:

- Observing that the sun has risen every morning and concluding it will rise tomorrow.

- Noting that several friends enjoyed a movie and concluding it’s probably a good film.

Pair 2: Statement 2 – Deductive Reasoning

- Statement: “Despite intense ongoing research efforts, today, there is no conclusive evidence to suggest that intelligent life exists elsewhere in the universe. Tomorrow will not reveal anything different.”

- Reasoning Method: Deductive Reasoning

- Confidence Score: 85%

- Logic: The statement starts with a general premise (no evidence exists today despite research) and draws a specific conclusion (tomorrow won’t change that), assuming the premise implies the outcome. This is characteristic of deductive reasoning.

- Doubts/Concerns: It might involve probabilistic reasoning due to uncertainty about the future, but the structure suggests a deductive leap from a premise to a conclusion.

- Examples:

- All birds have feathers; a sparrow is a bird; therefore, a sparrow has feathers.

- If a store is closed on Sundays, and today is Sunday, then the store is closed today.

Pair 3: Statement 3 – Intuitive Reasoning

- Statement: “An experienced firefighter suddenly orders everyone to evacuate a burning building.”

- Reasoning Method: Intuitive Reasoning

- Confidence Score: 80%

- Logic: The firefighter’s sudden decision likely stems from an instinct or gut feeling honed by experience, rather than explicit analysis, which aligns with intuitive reasoning.

- Doubts/Concerns: It could be heuristic reasoning (a shortcut based on experience), but intuitive reasoning emphasizes the instinctual aspect more strongly.

- Examples:

- A chef adjusting a recipe based on a hunch about the flavors.

- A driver swerving to avoid an obstacle without consciously analyzing the situation.

Pair 4: Statement 4 – Means-End Analysis

- Statement: “She positioned her bed next to the light switch so that she wouldn’t have to get out of bed to turn off the lights before going to sleep every night.”

- Reasoning Method: Means-End Analysis

- Confidence Score: 95%

- Logic: This involves identifying a goal (not getting out of bed) and working backward to find a solution (moving the bed), a hallmark of means-end analysis.

- Doubts/Concerns: Spatial reasoning could apply due to the physical arrangement, but means-end analysis captures the problem-solving process more precisely.

- Examples:

- Planning a budget by determining savings goals and adjusting expenses.

- Assembling furniture by figuring out steps to reach the final product.

Pair 5: Statement 5 – Moral Reasoning

- Statement: “Democracy requires protected speech with narrowly-defined restrictions on speech that directly incites violence.”

- Reasoning Method: Moral Reasoning

- Confidence Score: 75%

- Logic: This statement evaluates what democracy should entail based on ethical principles (free speech vs. public safety), which fits moral reasoning.

- Doubts/Concerns: Critical reasoning could also apply due to the evaluation of principles, but moral reasoning focuses on the ethical judgment.

- Examples:

- Deciding whether to report a coworker’s minor theft based on fairness.

- Debating the morality of capital punishment in a justice system.

Pair 6: Statement 6 – Counterfactual Reasoning

- Statement: “Thanks to Darwin, we know that humans are a product of natural evolution. Had Darwin not developed his theory, we might still be operating under the belief that humans are a product of divine creation.”

- Reasoning Method: Counterfactual Reasoning

- Confidence Score: 95%

- Logic: This explicitly imagines an alternative scenario (no Darwin) and its consequences, which is the essence of counterfactual reasoning.

- Doubts/Concerns: None significant; the “had not” phrasing strongly indicates this method.

- Examples:

- Considering how life might differ if you had attended a different college.

- Speculating how World War II might have ended without the atomic bomb.

Pair 7: Statement 7 – Fuzzy Logic

- Statement: “To you, the shower might be ‘too hot,’ but to me, it’s ‘just right’.”

- Reasoning Method: Fuzzy Logic

- Confidence Score: 85%

- Logic: The statement deals with subjective, imprecise terms (“too hot,” “just right”), which fuzzy logic handles by allowing for degrees of truth rather than binary categories.

- Doubts/Concerns: It could reflect subjective perception rather than formal reasoning, but fuzzy logic fits the handling of vagueness.

- Examples:

- Setting a washing machine to “medium load” based on an approximate size.

- Describing someone as “fairly tall” instead of giving an exact height.

Pair 8: Statement 8 – Abductive Reasoning

- Statement: “Every time the dog is let off the leash, he runs toward the same man. The man is likely his owner.”

- Reasoning Method: Abductive Reasoning

- Confidence Score: 90%

- Logic: This infers the most likely explanation (the man is the owner) for an observation (the dog’s behavior), typical of abductive reasoning.