AI Accountability

AI accountability refers to the responsibility of organizations, developers, and stakeholders to ensure that AI systems operate fairly, ethically, and transparently. It involves defining clear roles and responsibilities, setting guidelines for AI decision-making, ensuring compliance with regulations, and implementing mechanisms to address unintended consequences.

Accountability ensures that the individuals and/or organizations are held responsible for AI systems’ outputs and impacts, ensuring proper oversight and addressing any negative consequences. It is a fundamental part of fairness, accountability, transparency, and ethics in AI (FATE AI), promoting trustworthy AI deployment across industries.

Why Is AI Accountability Important?

AI is increasingly used in consequential domains such as hiring, lending, healthcare, and law enforcement. Without AI accountability, these systems could make biased, unethical, or even harmful decisions with little oversight. Key benefits of accountability in AI include:

- Ensuring Fairness: AI systems must provide equal and unbiased treatment to all individuals.

- Reducing Risks: While accountability in AI does not guarantee that unintended harm or biases will be prevented, it does ensure that when such issues arise, there are clear mechanisms to hold the responsible parties accountable and address negative impacts.

- Building Public Trust: Ethical AI systems increase confidence in AI-powered applications.

- Supporting Compliance: Regulations require AI systems to adhere to accountability standards.

Principles of AI Accountability

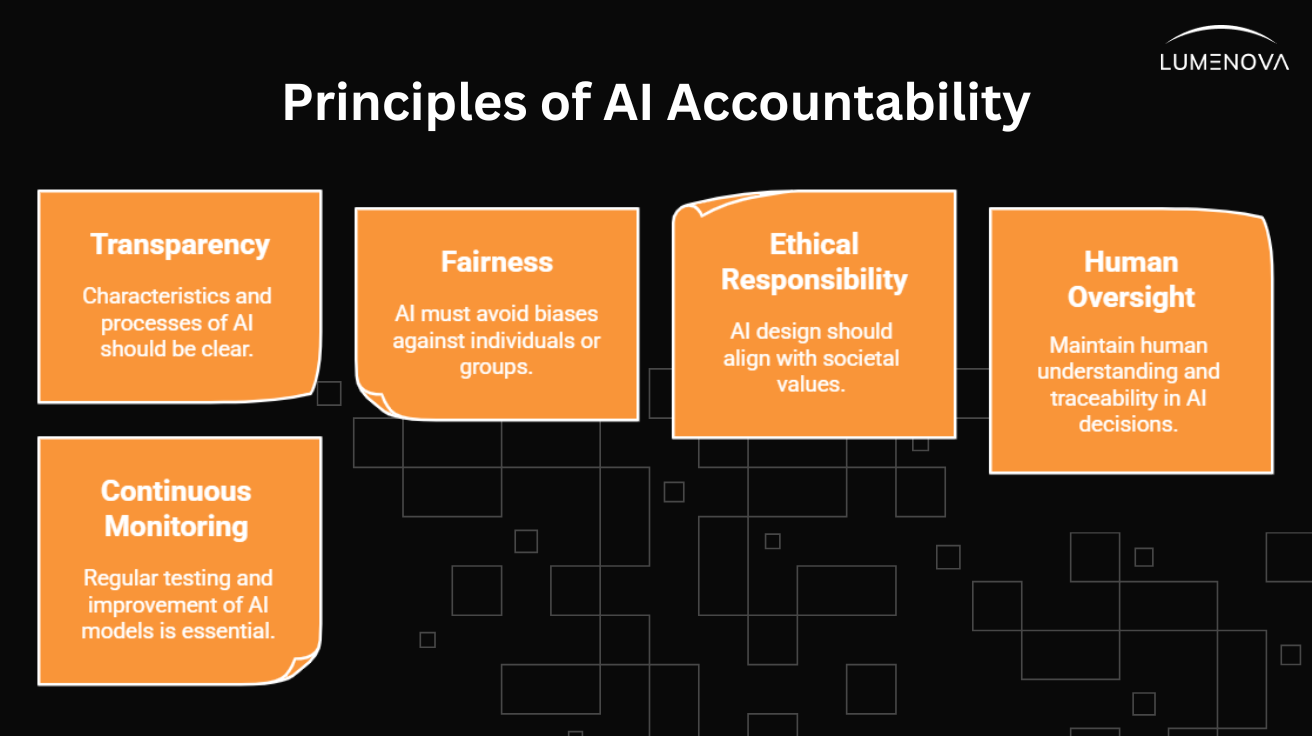

To ensure accountability in AI, organizations should follow these key principles:

- Transparency – The characteristics and processes of AI systems should be accessible and understandable.

- Fairness – AI models must avoid perpetuating biases that discriminate against individuals or groups.

- Ethical Responsibility – Developers must design AI that aligns with societal values and regulations.

- Human Oversight – While AI may replace human decision-making in certain contexts, it is essential to maintain oversight by ensuring decisions and their impacts are understandable, traceable, and aligned with a system’s intended purpose and use.

- Continuous Monitoring – AI models must be regularly tested and improved to mitigate unintended consequences.

How to Implement AI Accountability

Organizations can build AI accountability through several strategies:

- Explainability and Transparency: AI systems should be designed to provide insights into how decisions are made, ensuring fairness, accountability, transparency, and ethics in AI.

- Bias Audits: Regularly evaluate AI models for fairness to prevent discriminatory outcomes.

- Compliance Measures: Align AI systems with industry regulations and ethical standards.

- Ethical AI Governance: Establish governance frameworks that prioritize accountability at every stage of AI development, deployment, and integration.

- Public and Regulatory Reporting: Maintain documentation and make AI decision-making processes accessible to stakeholders.

Frequently Asked Questions

AI accountability advocates that businesses use AI ethically, avoiding legal risks, reputational damage, and biased decision-making.

By holding developers, companies, and users responsible for the design, deployment, and use of AI systems, accountability in AI helps reduce discrimination and bias, fostering fair treatment of user and/or those affected by the system’s outputs.

Laws like the EU AI Act, GDPR, and industry-specific guidelines require AI systems to be explainable, fair, and transparent.

Companies can enforce fairness, accountability, transparency, and ethics in AI by using explainable AI models, bias detection tools, impact assessments, and governance frameworks.

Transparency ensures that AI models are understandable and auditable, preventing harmful or unethical AI decisions.