AI Bias

AI bias (also known as algorithmic bias, or bias in AI) occurs when AI systems produce unfair, inaccurate, or discriminatory outcomes due to biases in the data, algorithms, or model design. These biases can unintentionally favor certain groups or data characteristics, leading to ethical concerns and real-world consequences. Algorithmic bias is one of the most common forms, where the system internalizes logic that reflects hidden patterns or errors contained in its training data.

Examples of bias in AI range from age and gender discrimination in hiring, to unfair loan denials rooted in biased credit history interpretations. This highlights the importance of addressing bias in AI models to ensure equitable and ethical AI use.

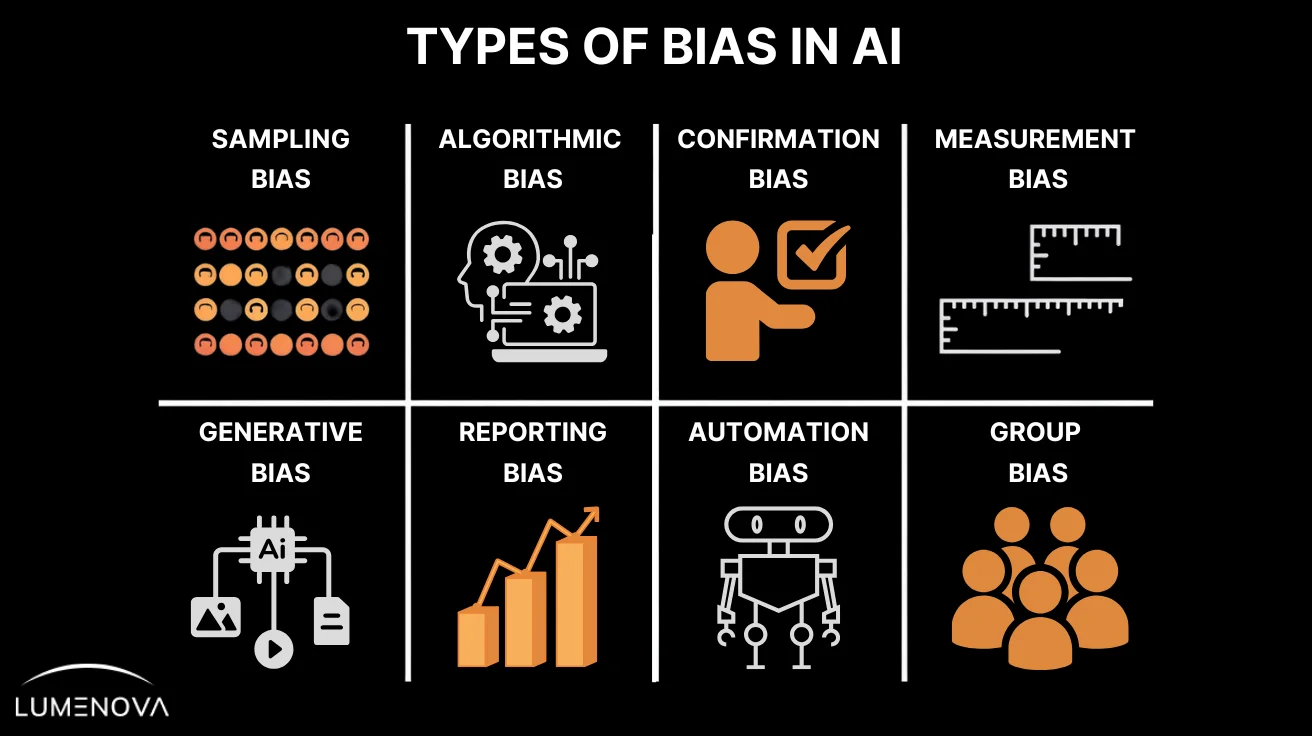

Types of Bias in AI

There are various types of bias in AI, including:

1. Sampling Bias

Sampling bias occurs when the dataset used to train an AI model is not representative of the full population it’s meant to serve, leading to skewed results.

If the data used to train a system predominantly reflects one group over others, the AI’s predictions or actions will favor that group, potentially excluding or misrepresenting others. For example, facial recognition systems trained mostly on light-skinned individuals may fail to recognize darker-skinned individuals with the same level of accuracy. To ensure fairness and accuracy, the data collection process must be inclusive and representative of all demographic groups.

2. Algorithmic Bias

Algorithmic bias arises when an AI system prioritizes certain attributes or patterns in its decision-making process, often due to limitations in the training data or algorithm design.

In many cases, AI models tend to repeat historical biases that have been encoded into the data. For instance, if a hiring algorithm is trained on resumes that predominantly feature male candidates, it will likely favor male applicants, reinforcing gender biases. Addressing this requires careful attention to data representativeness and algorithmic design.

3. Confirmation Bias

Confirmation bias in AI occurs when a system amplifies pre-existing biases in the data or its creators, reinforcing patterns that align with its prior assumptions.

AI models may inadvertently enxhibit training data biases or the biases of their designers. For instance, if an AI system is designed by an all-male team, the team might make implicit assumptions about its algorithmic structure and processes that ultimately disfavor female users. . These kinds of scenarios can perpetuate a lack of innovation and a failure to adapt to emerging trends and regulations.

4. Measurement Bias

Measurement bias occurs when the data used to train an AI model is inaccurately captured, often overrepresenting or underrepresenting certain populations or scenarios.

If AI systems rely on datasets that fail to capture the full scope of a population (such as surveys that focus solely on urban areas) then AI-driven results and predictions could fail to adhere to real-world conditions. This type of bias can distort decision-making processes, especially when the system is applied in real-world situations. Ensuring comprehensive and accurate data collection is critical to avoid this problem.

5. Generative Bias

Generative bias occurs in AI systems, particularly generative models, when the content they create is unbalanced or misrepresented due to biased training data.

When an AI model generates content, such as text or images, based on its training data, it can inadvertently propagate biases. For example, a generative model trained primarily on Western literature may produce content that overlooks other cultural perspectives. This bias is a significant issue when the AI’s output is meant to represent diverse viewpoints. A more inclusive training dataset is necessary to ensure that AI produces balanced and fair content.

6. Reporting Bias

Reporting bias happens when the frequency or nature of events represented in a training dataset doesn’t align with the real-world occurrence of those events.

If an AI model is trained on data that over-represents certain types of outcomes or behaviors (such as overly positive product reviews), it will fail to produce a realistic understanding of sentiment or trends. For instance, in sentiment analysis, if training data includes disproportionately positive reviews, the AI may erroneously conclude that customers are overwhelmingly satisfied, leading to inaccurate insights. Properly balanced and representative data is key to avoiding this bias.

7. Automation Bias

Automation bias refers to the tendency to favor decisions made by automated systems over human judgment, even when the system’s accuracy and/or reliability are questionable.

When AI systems make decisions in areas like medical diagnostics or product inspection, humans may blindly trust the AI’s judgment over their own, even if the system is wrong. For example, in defect detection, an automated inspection system may miss subtle issues that a human could easily spot. The over-reliance on automation can lead to critical errors being overlooked. A careful balance between human oversight and automated decision-making is crucial to mitigate this risk.

8. Group Attribution Bias

Group attribution bias occurs when an AI system assumes that individuals within a group share the same characteristics or behaviors, leading to generalized decision-making.

This bias can manifest when an AI assumes that members of a certain group (based on gender, race, or other demographic factors) share similar traits or behaviors. For instance, an AI might assume that all women in a specific professional role share the same qualities, ignoring individual differences. This can lead to unfair judgments and the perpetuation of stereotypes. To prevent this, AI systems must be designed to account for the individuality of each person rather than primarily relying on group-based assumptions.

Examples of Bias in AI Systems

Bias in AI and ML can lead to serious consequences:

- AI in Hiring: Gender bias in ML models may prioritize male applicants based on historical hiring trends.

- Healthcare AI: Data bias in ML models might cause diagnostic tools to underperform for underrepresented groups.

- Financial Services: AI bias in financial services can result in credit scoring models unfairly denying loans to minority applicants.

These examples of bias in AI illustrate the importance of addressing bias in AI systems to promote fairness and accountability.

Causes of Bias in AI

The root of bias in AI algorithms often lies in the data and processes used to train them:

Biased Datasets

Historical data may contain inherent biases, such as racial or gender bias, which are then learned and reproduced by AI systems.

Lack of Diversity in Training Data

Datasets that fail to represent diverse populations can facilitate machine learning bias and lead to unfair outputs.

Human Bias in AI

Developers’ assumptions or societal biases can inadvertently influence the design of AI models.

Feedback Loops

AI systems that use feedback from real-world applications can reinforce existing biases, creating a vicious cycle of biased outcomes.

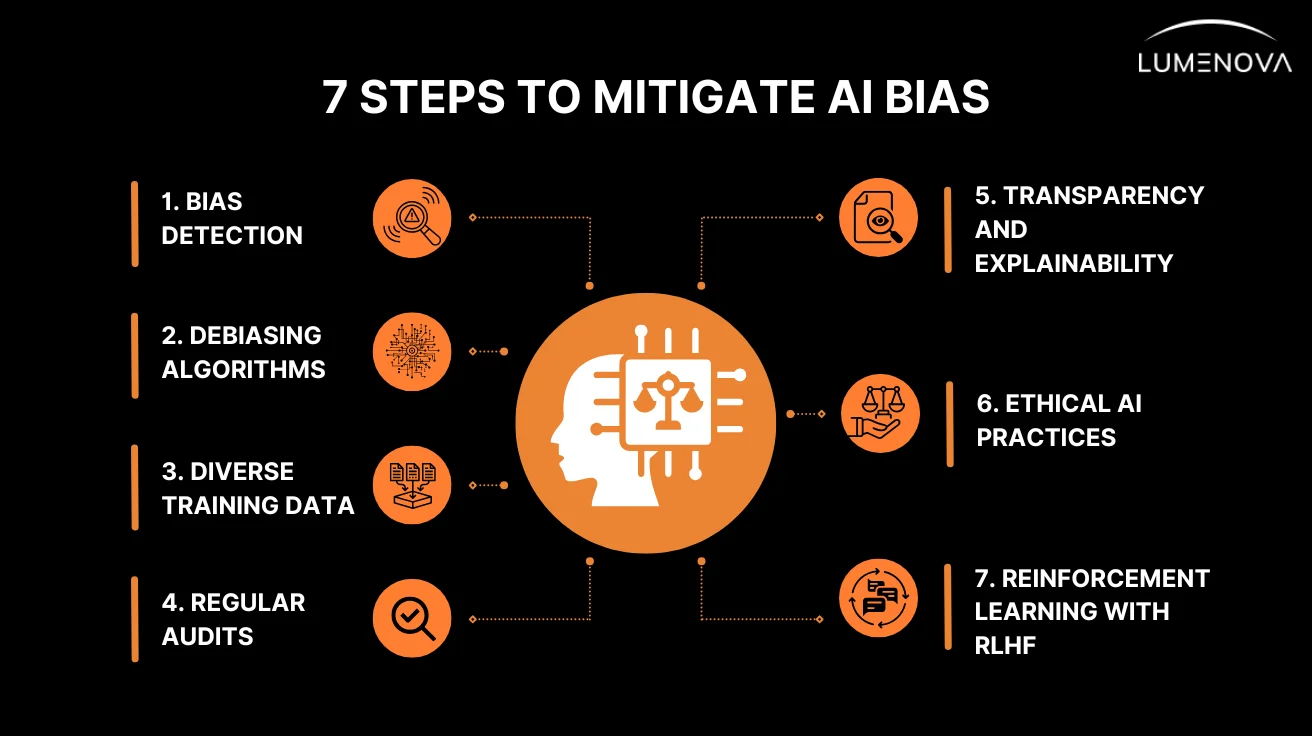

How to Mitigate AI Bias

Addressing bias in AI models is critical for creating equitable AI systems. Here are some effective bias mitigation techniques:

1. Bias Detection in Machine Learning

Start by thoroughly identifying biases in both the data and algorithms powering your AI systems. This can be achieved through bias detection tools and machine learning techniques. Regularly analyze and audit your models to assess where fairness is lacking and which areas need immediate improvement. Bias detection is crucial to ensure that AI systems remain aligned with ethical standards from the outset.

2. Debiasing Algorithms

Once biases are detected, implement debiasing algorithms to adjust and recalibrate AI models. These algorithms help correct unfair patterns and reduce the impact of biased data on AI performance. By applying debiasing techniques, you can help ensure that your AI model generates more balanced and equitable outcomes, especially in sensitive applications like recruitment or law enforcement.

3. Diverse Training Data

One of the most effective ways to mitigate AI bias is by using diverse and representative training data. When gathering data for AI systems, it is essential that datasets encompasses various demographics, cultural backgrounds, and social groups. The more inclusive your data is, the better equipped your AI system will be to make fair decisions across a wide range of users.

4. Regular Audits

AI models require ongoing monitoring to track and maintain fairness throughout their lifecycle. Implement a process for regular audits of your AI systems to check for any emerging biases. This continuous monitoring helps identify issues early on, before they can cause significant harm or spread unfair practices. Always be proactive about assessing your AI’s performance, as bias can often slip through unnoticed without regular checks.

5. Transparency and Explainability

Implement explainable AI techniques that make it easy to trace how decisions are made and identify where biases might have influenced outputs. Provide users with the ability to understand why certain decisions are being made, which can help identify relevant biases and foster greater trust in AI systems.

6. Ethical AI Practices

To ensure long-term fairness, build and adopt ethical AI frameworks and policies that guide the responsible development and deployment of AI. These frameworks should address how to handle biases, ensure accountability, and align AI with human values and ethical standards.

7. Reinforcement Learning with RLHF

Incorporate reinforcement learning with human feedback (RLHF) as a continuous improvement process. RLHF enables AI models to refine their decision-making by learning from human input. Rather than relying solely on data, this method allows AI to adapt to changing human preferences, ethical standards, and moral expectations over time.

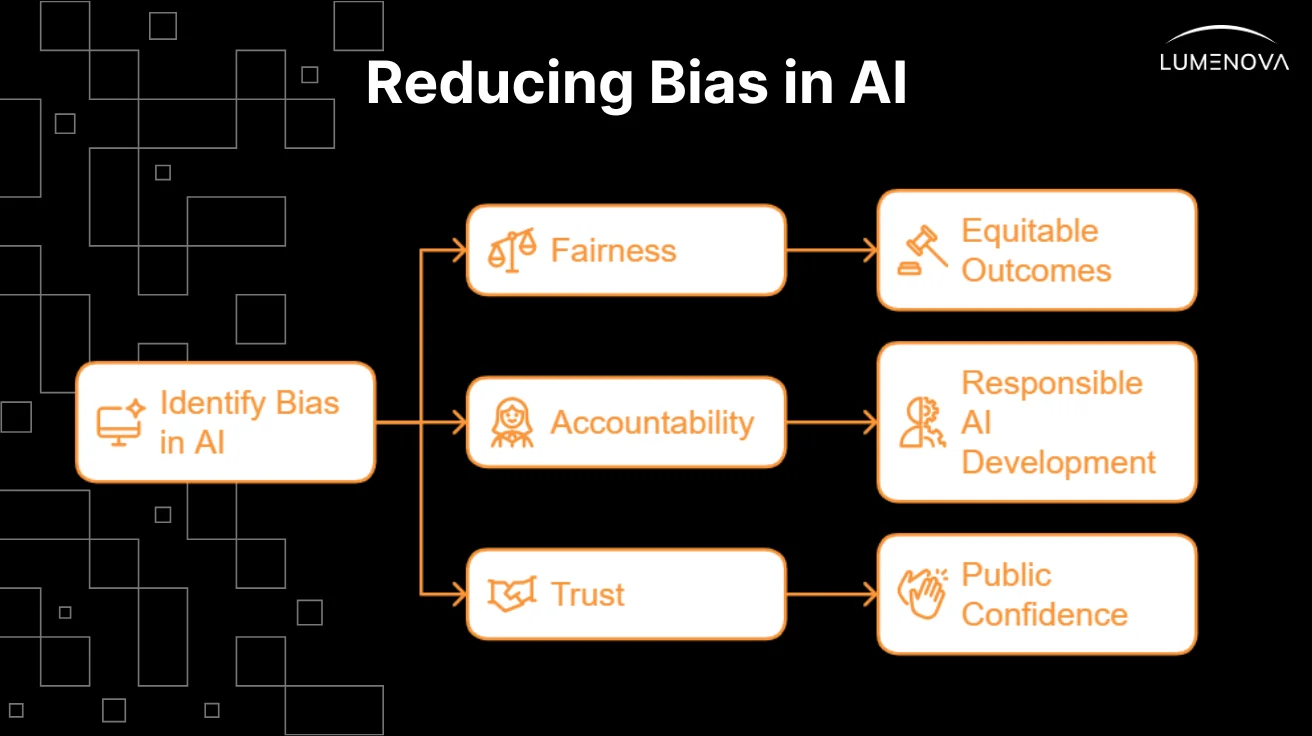

Why Is Tackling Bias Important?

Bias in AI can have real-world impacts, from denying opportunities to certain groups to reinforcing harmful stereotypes.

Reducing bias in AI algorithms is critical for:

- Fairness: Ensuring equitable outcomes for all individuals.

- Accountability: Holding AI developers and organizations responsible for the impacts of their models.

- Trust: Building public confidence in AI systems by demonstrating their fairness and accuracy.

Frequently Asked Questions

AI bias refers to unfair or discriminatory outcomes produced by AI systems due to biased data, algorithms, or design choices.

Bias in machine learning arises from unbalanced training data, flawed algorithms, or human assumptions influencing model development.

Examples of AI bias include gender discrimination in hiring models, biased facial recognition systems, and unfair loan denial in credit scoring.

Mitigating bias in AI involves using debiasing techniques, diverse datasets, and regular audits to identify and correct biases in AI systems.

Tackling bias in AI systems ensures fairness, reduces discrimination, and builds public trust in AI technologies.