AI Risk Assessment

AI risk assessment is the process of identifying, analyzing, and mitigating potential risks associated with AI systems. As AI becomes more integrated into industries like finance, healthcare, and cybersecurity, ensuring that AI models operate safely, reliably, fairly, and transparently is essential. Risk assessment in AI helps organizations prevent errors, biases, security threats, and regulatory violations before they cause widespread or unmanageable harm.

Why Is AI Risk Assessment Important?

AI models can influence critical decisions, from approving loans to diagnosing medical conditions. Without proper oversight, these systems may introduce risk assessment challenges such as bias, data privacy breaches, and unpredictable behaviors. Key benefits of AI in risk assessment include:

- Identifying and correcting biases in AI models to ensure fairness.

- Preventing AI-driven fraud and cyber threats.

- Monitoring systems for anomalies and emergent behaviors or objectives.

- Meeting legal and industry regulations for AI safety and transparency.

- Using risk analysis ML techniques to enhance accuracy, reliability, and accountability.

How ML Enhances Risk Assessment

Risk assessment using ML leverages AI algorithms to detect patterns, predict risks, and automate assessments. This approach is widely used in:

- Financial Services: Credit risk assessment ML helps banks evaluate loan applicants and prevent fraud.

- Healthcare: AI identifies medical risks and assists in early disease detection.

- Cybersecurity: ML models autonomously detect threats and prevent cyber attacks.

- Insurance: AI predicts policy risks and automates claims processing.

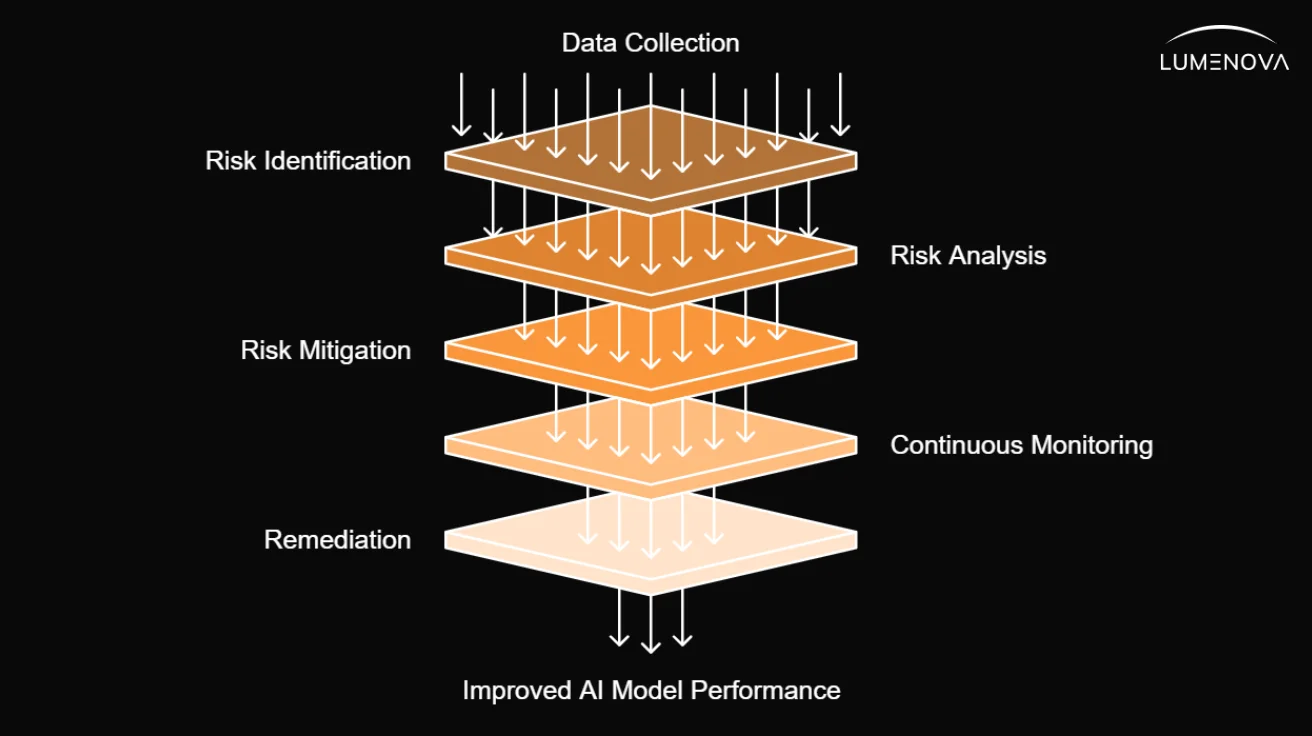

6 Key Steps

To implement effective risk assessment ML, organizations should follow these steps:

- Data Collection – Gathering diverse and unbiased data for training AI models.

- Risk Identification – Detecting potential biases, security vulnerabilities, and ethical concerns.

- Risk Analysis – Applying risk analysis ML techniques to evaluate the impact and likelihood of unintended consequences.

- Risk Mitigation – Adjusting models to minimize risks and improve reliability.

- Continuous Monitoring – Regularly assessing AI models to ensure ongoing compliance and accuracy.

- Remediation – Fixing or modifying models according to the risk insights obtained from previous steps, ensuring that any vulnerabilities or biases identified are addressed and that models are continuously improved for optimal performance.

Frequently Asked Questions

AI risk assessment identifies and mitigates potential risks in areas like finance, healthcare, and cybersecurity. It goes beyond decision-making, addressing concerns like safety, fairness, privacy, and compliance.

ML risk assessment detects patterns in large datasets, predicts potential risks, and automates decision-making, improving risk assessment accuracy and efficiency.

Credit risk assessment using ML allows financial institutions to analyze creditworthiness more accurately, reducing bias and streamlining loan approval processes.

Challenges include bias in AI models, data security concerns, regulatory compliance, algorithmic opacity and complexity, and the difficulty of interpreting AI-driven risk analyses.

Organizations can ensure effective AI risk assessment by using diverse datasets, applying ethical AI principles, continuously monitoring AI systems, and complying with industry regulations.