Contents

Adversarial Machine Learning (AML) is a rapidly evolving field that poses both significant threats and opportunities for the security and reliability of Machine Learning (ML) systems. AML involves leveraging sophisticated computational techniques, like gradient masking and adversarial training, to craft adversarial examples—carefully manipulated inputs designed to exploit vulnerabilities in ML models and induce erroneous or harmful predictions.

While AML techniques are often associated with security threats, they are also instrumental in applications such as Generative Adversarial Networks (GANs). While GANs have various applications, one significant use is generating synthetic data for training models. This is particularly valuable in situations where real-world data is scarce or sensitive, contributing greatly to the progression of Generative AI systems.

Moreover, as ML permeates critical sectors like healthcare and finance, the potential consequences of high-impact adversarial attacks are far-reaching, ranging from misdiagnosis and financial fraud to catastrophic incidents. For instance, in the finance sector, adversarial attacks might manipulate stock trading algorithms, leading to significant financial losses and market instability.

Consequently, this article will delve into the intricacies of adversarial attacks, examining their various types, the underlying mechanisms that enable them, and the impact they can produce on real-world applications. Furthermore, we will explore seven cutting-edge defensive strategies for mitigating AML-driven threats and ensuring the robustness and resilience of ML systems in the face of increasingly advanced adversarial attacks.

Understanding Adversarial Attacks

In essence, adversarial attacks exploit the vulnerabilities in ML models by making small, often imperceptible changes to the input data, leading to significant errors in the model’s output.

Types of Adversarial Attacks

Evasion Attacks: Attacks that occur during the deployment phase of an ML model. Attackers make minor alterations to the input data that are imperceptible to humans but lead the model to produce incorrect outputs.

- Example: Subtly modifying an image of a panda such that a neural network confidently misclassifies it as a gibbon, despite the changes being nearly invisible.

- Impact: Such attacks can cause significant issues in applications where accuracy is critical, such as image recognition systems used in security applications.

Poisoning Attacks: Poisoning attacks take place during the training phase of an ML model. Attackers introduce malicious data into the training set, corrupting the learning process and embedding errors into the model.

- Example: Attackers inject falsified medical records into training data, causing a diagnostic model to consistently misdiagnose certain conditions.

- Impact: This type of attack can have far-reaching consequences, particularly in fields where models learn from large-scale data collections (e.g., finance & healthcare).

Model Extraction Attacks: Attackers systematically query an ML model and analyze its responses to reverse-engineer its internal parameters or architecture. This process allows them to replicate the model’s behavior or extract sensitive information.

- Example: An attacker could query a proprietary ML model used in financial trading to uncover its strategy, potentially recreating it for competitive advantage.

- Impact: This can lead to the loss of intellectual property and sensitive data, which is especially concerning for proprietary or confidential models.

Model Inversion Attacks: These attacks allow adversaries to reconstruct sensitive input data from the model’s outputs.

- Example: An attacker could leverage access to a facial recognition system to recreate images of the individuals the system was trained on by analyzing the system’s outputs when given specific inputs.

- Impact: This could result in severe privacy breaches, as attackers could reconstruct sensitive data such as medical records or personal images from the system’s responses, violating user privacy.

Prompt Injection Attacks: Prompt injection attacks involve manipulating the input prompts to AI models, particularly in natural language processing, to alter their behavior or outputs.

- Example: An attacker might craft highly specific and complex prompts to ‘jailbreak’ a chatbot, bypassing its robust safety mechanisms. While modern AI models are designed to resist simple malicious prompts, people who aren’t high-level prompters are much less likely to circumvent these safeguards. Skilled attackers, however, can use sophisticated techniques to trick the AI into generating inappropriate or restricted content.

- Impact: This could lead to the dissemination of harmful content, misinformation, or even malicious actions if users trust the AI’s responses. It could also result in reputational damage for organizations deploying potentially corrupted AI systems.

Real-World Implications of Adversarial Attacks

The real-world consequences of adversarial attacks can produce wide-ranging implications, disrupting critical sectors and compromising public safety:

- Healthcare: Inaccurate diagnosis or treatment recommendations due to manipulated medical images could lead to delayed or improper care, endangering patient lives.

- Finance: Successful attacks on fraud detection systems could facilitate large-scale financial crimes, causing significant losses for individuals and institutions.

- Transportation: Adversarial attacks on autonomous vehicles could manipulate their perception of the environment, leading to accidents with potentially fatal consequences.

- Cybersecurity: By evading detection, adversarial attacks could allow malware to infiltrate systems, compromising sensitive data and disrupting essential services.

Identifying Adversarial Attacks

Unusual Model Behavior:

- Indicator: Significant deviations in model performance, such as sudden drops in accuracy or unexpected outputs for particular inputs, can signal an adversarial attack.

- Example: A model that typically classifies road signs with high accuracy suddenly misclassifies common signs.

Input Anomalies:

- Indicator: Inputs that are statistically or visually unusual compared to typical data can be flagged for closer inspection.

- Example: Slightly altered images or data that appear to have been modified in ways that do not align with normal variations.

Output Confidence Scores:

- Indicator: Unusually low or high confidence scores on outputs that are generally classified with high certainty can indicate potential adversarial inputs.

- Example: A neural network outputting low confidence levels for well-defined images, or overly confident predictions on ambiguous inputs.

Statistical Methods:

- Technique: Using statistical techniques to analyze the distribution of inputs and outputs for anomalies.

- Example: Applying techniques like clustering or principal component analysis (PCA) to detect deviations from the norm.

Gradient Analysis:

- Technique: Examining the gradients used by the model during prediction to identify unusual patterns that may indicate adversarial perturbations—small, imperceptible changes made to input data.

- Example: High gradients that suggest the model is overly sensitive to trivial changes in input data.

Consistency Checks:

- Technique: Running inputs through multiple models or using ensemble methods to check for consistency in predictions. (Ensemble methods enhance robustness by combining multiple models. For a detailed explanation, see the ‘Ensemble Methods’ section below.)

- Example: An input produces widely varying outputs across different models.

Real-Time Monitoring:

- Technique: Continuously monitoring model inputs and outputs to detect and respond to potential attacks immediately.

- Example: Using anomaly detection systems to flag unexpected patterns in real time.

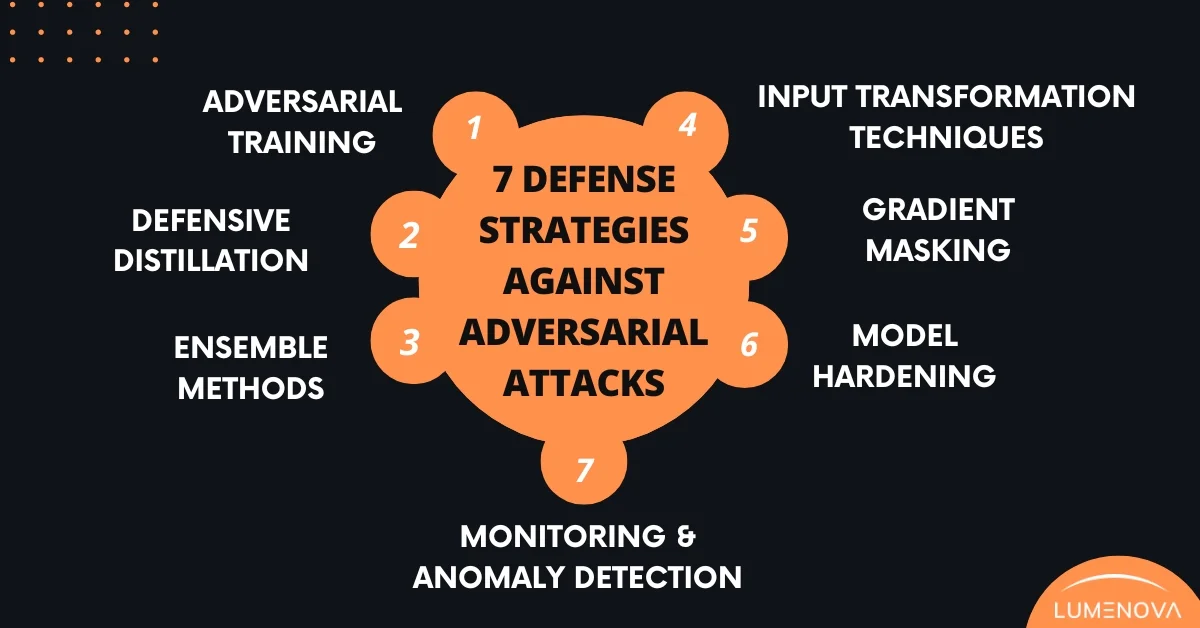

7 Defense Strategies Against Adversarial Attacks

1. Adversarial Training

Incorporating adversarial examples into training data is a proactive defense mechanism known as adversarial training. By exposing models to diverse attack scenarios during training, adversarial training equips them with the ability to recognize and counteract these manipulations, bolstering their overall robustness. This approach essentially vaccinates models against known attacks, making them more reliable in real-world applications.

However, adversarial training is not without its challenges. It requires considerable computational resources and time because incorporating generative adversarial examples into the training process is resource-intensive.

2. Gradient Masking

Gradient masking involves obscuring gradient information exploited by attackers to craft adversarial examples. They are essential for attackers to craft effective adversarial perturbations. By concealing or distorting these gradients through techniques such as adding noise (e.g., introducing random noise into the gradient calculations, which disrupts the attacker’s ability to determine the precise changes needed) or using non-differentiable operations (e.g., applying pixel thresholding, where pixel values are set to 0 or 255, breaking smooth gradient information and making it difficult for attackers to calculate accurate modifications), gradient masking significantly hinders the creation of effective adversarial inputs.

Gradient masking can be an effective defense mechanism, although some advanced attacks can still bypass it by using alternative strategies, such as black-box attacks that do not rely directly on gradient information. Furthermore, gradient masking can sometimes lead to a false sense of security, obscuring attack symptoms rather than addressing the underlying vulnerabilities of the model.

3. Defensive Distillation

Defensive distillation aims to reduce model sensitivity to minor input perturbations by generating smoother, less precise predictions. This is achieved by training a second model to mimic the softened outputs of the original model trained at a higher temperature setting—adjusting the softmax function (a mathematical function often used in machine learning, particularly in classification tasks. It converts a set of values into probabilities that sum up to 1, making it easier to interpret outputs as probabilities.) to produce smoother and less confident probability distributions, making the model less sensitive to precise perturbations.

Consequently, by obscuring gradient information exploited by attackers to craft adversarial examples, defensive distillation hinders the creation of potent adversarial inputs. This method effectively counters minor perturbations, making it more difficult for attackers to find the exact input modifications needed to deceive the model.

Despite its effectiveness, defensive distillation is not a foolproof solution. For instance, it can be bypassed by sophisticated attacks that exploit the remaining vulnerabilities in the model. Additionally, the process of distillation can sometimes lead to a loss of accuracy on clean, unperturbed data. Therefore, this trade-off between robustness and accuracy must be carefully managed to ensure that the model remains effective in real-world scenarios.

4. Ensemble Methods

Ensemble methods leverage the collective intelligence of multiple models to compensate for individual model weaknesses, enhancing overall system robustness. By combining the predictions of different models, ensemble methods create a more resilient defense against adversarial examples. As a result, an attack that deceives one model may not affect others, due to redundancy and improved robustness.

Nevertheless, ensemble methods can be computationally intensive and complex to implement. Maintaining and synchronizing multiple models is challenging, especially in resource-constrained environments. Furthermore, while ensemble methods improve robustness, they do not completely eliminate the risk of adversarial attacks. In fact, attackers can develop strategies that target the ensemble as a whole, exploiting any shared vulnerabilities among the constituent models.

5. Input Transformation Techniques

Input transformation techniques involve modifying input data before processing to disrupt adversarial manipulations and mitigate their impact. Essentially, these techniques “clean” the input data, removing or reducing adversarial perturbations before they can affect the model’s predictions. For example, methods such as feature squeezing, which reduces the precision of input data, and image preprocessing, which includes resizing, cropping, or applying filters, can effectively deter adversarial attacks.

However, input transformation techniques can also fall short. While they can effectively counter some known attacks, they tend to degrade the quality of the input data, negatively affecting the model’s performance on clean, unperturbed data. Moreover, sophisticated adversarial attacks can still find ways to bypass these transformations, especially if the attacker is aware of the specific transformations being used. This highlights the need for continuous adaptation and development of new transformation techniques to stay ahead of evolving adversarial tactics.

6. Model Hardening

Model hardening fortifies the internal structure and training processes of ML models, enhancing their resilience against adversarial inputs. Techniques such as robust optimization and regularization desensitize models to minor input changes, promoting stability under adversarial conditions. Robust optimization involves modifying the training process to explicitly account for adversarial perturbations whereas regularization adds constraints to the model’s parameters to prevent overfitting and improve generalization.

These methods work by making the model’s decision boundaries more stable and less prone to being disrupted by adversarial noise. However, model hardening techniques often require careful tuning and significant computational resources. Additionally, they need to be continuously updated to address new types of adversarial strategies, making them an ongoing defense strategy rather than a one-time solution.

7. Monitoring and Anomaly Detection

Real-time monitoring and anomaly detection systems proactively identify and respond to adversarial attacks by analyzing patterns and deviations from expected behavior. These systems continuously monitor the model’s inputs and outputs, flagging unusual patterns that may indicate a potential threat. For example, sudden changes in the distribution of input data or unexpected shifts in model predictions can serve as red flags, triggering an alert or initiating a defense mechanism.

Integrating anomaly detection with ML models can enhance their ability to resist evasion attacks, which involve manipulating input data in subtle ways to trick a trained model into making incorrect predictions. In effect, real-time monitoring allows for the immediate identification and mitigation of adversarial threats, reducing the potential damage caused. Nevertheless, implementing effective anomaly detection systems requires a deep understanding of normal model behavior and the ability to distinguish between benign anomalies and actual adversarial attacks. Moreover, these systems need to be robust against false positives and negatives, which can lead to unnecessary interruptions and reduced trust in the model.

Evaluating Defense Strategies

- Attack and Domain-Agnosticism: Effective defenses should be robust against a wide range of attacks and applicable across different domains. This versatility is crucial for scalable deployment in various real-world scenarios.

- AML Evaluation Metrics: Standardized metrics are necessary for measuring the performance of defense strategies. Common metrics include accuracy under attack, robustness scores, and the rate of successful attack prevention. They help in comparing different approaches and understanding their relative effectiveness.

- Source Code and Dataset Availability: Sharing the source code and evaluation datasets of defense methods promotes transparency and reproducibility. This enables other researchers to validate results and build upon existing work, fostering collaboration and innovation in AML defense.

Challenges and Future Directions

Despite significant progress in developing AML defenses, several challenges remain:

- Performance Trade-offs: Enhancing the robustness of ML models often comes at the cost of increased computational complexity or reduced performance. Balancing security with efficiency remains a significant challenge.

- Domain-Specific Solutions: Different applications may require tailored defense strategies. What works well for image recognition might not be suitable for NLP or audio processing, necessitating diverse approaches to defense.

Future Research Directions

As AML evolves, several promising avenues for future research and development emerge:

- Cross-Domain Defense Strategies: Developing defenses that are effective across multiple domains can provide broader protection against adversarial attacks. This involves creating versatile techniques that can be adapted to different types of models and data.

- Automated Defense Mechanisms: Leveraging automation and AI to dynamically adapt and enhance defense mechanisms can provide real-time protection against evolving threats. This includes using reinforcement learning and other AI techniques to continuously improve defenses based on evolving attack patterns.

- Explainability and Transparency: Improving the transparency and explainability of ML models can foster an understanding of how adversarial attacks succeed and the technical measures required to design more effective defenses. This involves making models more interpretable and providing insights into their decision-making processes.

- Collaborative Defense Efforts: Encouraging collaboration between researchers, industry, academia, and government agencies can lead to more comprehensive and effective defense strategies. Sharing knowledge, datasets, and tools can accelerate progress in combating adversarial attacks.

Reflective Questions on Adversarial Attacks in Machine Learning

As we conclude our discussion on adversarial attacks in machine learning, it’s important to step back and reflect on the broader implications and ethical considerations surrounding this critical issue. In this respect, we pose a few thought-provoking questions for readers to ponder:

- In the context of adversarial attacks, which values do you believe are most crucial to uphold, and why? Conversely, are there any traditional values in cybersecurity or machine learning that you think need re-evaluation?Think about the principles of transparency, fairness, and security. How do these values interact and sometimes conflict in the face of adversarial threats?

- Have you encountered any instances where adversarial attacks or defenses have influenced your daily life or professional activities?Reflect on personal or observed experiences where machine learning systems were manipulated or defended against such attacks. How did these events shape your view on the reliability and security of AI systems?

- When considering AI governance in relation to adversarial attacks, what components of governance excite and/or concern you the most?Contemplate the balance between innovative defensive strategies and the ever-evolving nature of adversarial threats. How do you perceive the role of governance in maintaining the safety and trustworthiness of AI systems?

Conclusion

Adversarial attacks present a significant threat to the integrity and reliability of machine learning systems. As these attacks become more sophisticated, the need for robust and resilient defense strategies becomes increasingly critical. By thoroughly understanding the nature of these threats and developing comprehensive defense mechanisms, we can safeguard the technologies that drive our modern world.

To address these challenges effectively, it’s crucial to combine a variety of defensive techniques, proactive monitoring, and collaborative efforts. With these strategies in place, we can build more resilient ML systems that are capable of withstanding the sophisticated adversarial threats they may face.

Moreover, continuous innovation and vigilance are essential in this ongoing battle against adversarial attacks. As we implement and refine defenses, the role of governance and adherence to principles such as transparency, fairness, and security remains critical.

If you wish to further examine the intricacies of adversarial attacks and machine learning security, we encourage you to visit Lumenova’s blog for further insights and detailed explorations.

For those looking to enhance their defense strategies against adversarial attacks, we invite you to book a demo on our platform today, to discover how our solutions can fortify your systems and help you stay ahead of evolving adversarial threats.

Ultimately, the fight against adversarial attacks requires a balanced approach that integrates innovative defensive strategies with proactive governance. By fostering collaboration and committing to continual improvement and remediation, we can ensure the safety, trustworthiness, and reliability of our AI systems for the future.