April 24, 2025

AI Governance Platform vs AI Risk Management Tool: You Need Both

Contents

Technology and risk leaders in heavily regulated industries face a dual challenge: maximizing AI’s benefits for efficiency and competitive advantage while ensuring compliance, stability, and risk mitigation. In this high-stakes environment, two types of solutions frequently surface: AI governance platforms and AI risk management tools.

While often used interchangeably or seen as overlapping, understanding their distinct roles – and why you fundamentally need both – is critical for successful and sustainable AI adoption. Getting this wrong isn’t just a technical misstep; it’s a significant business and compliance liability.

Let’s dissect the difference between the two and why integrating both is non-negotiable for robust AIOps in heavily regulated business verticals.

AI Governance Platform

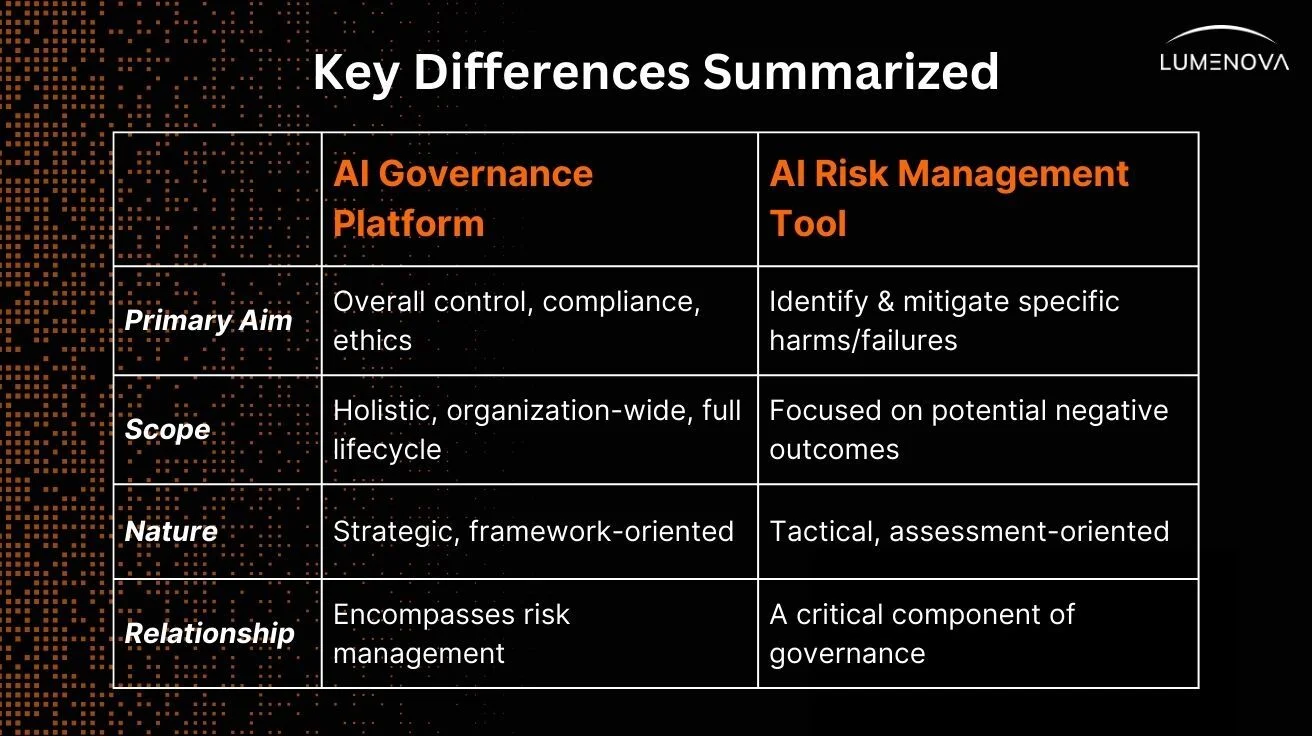

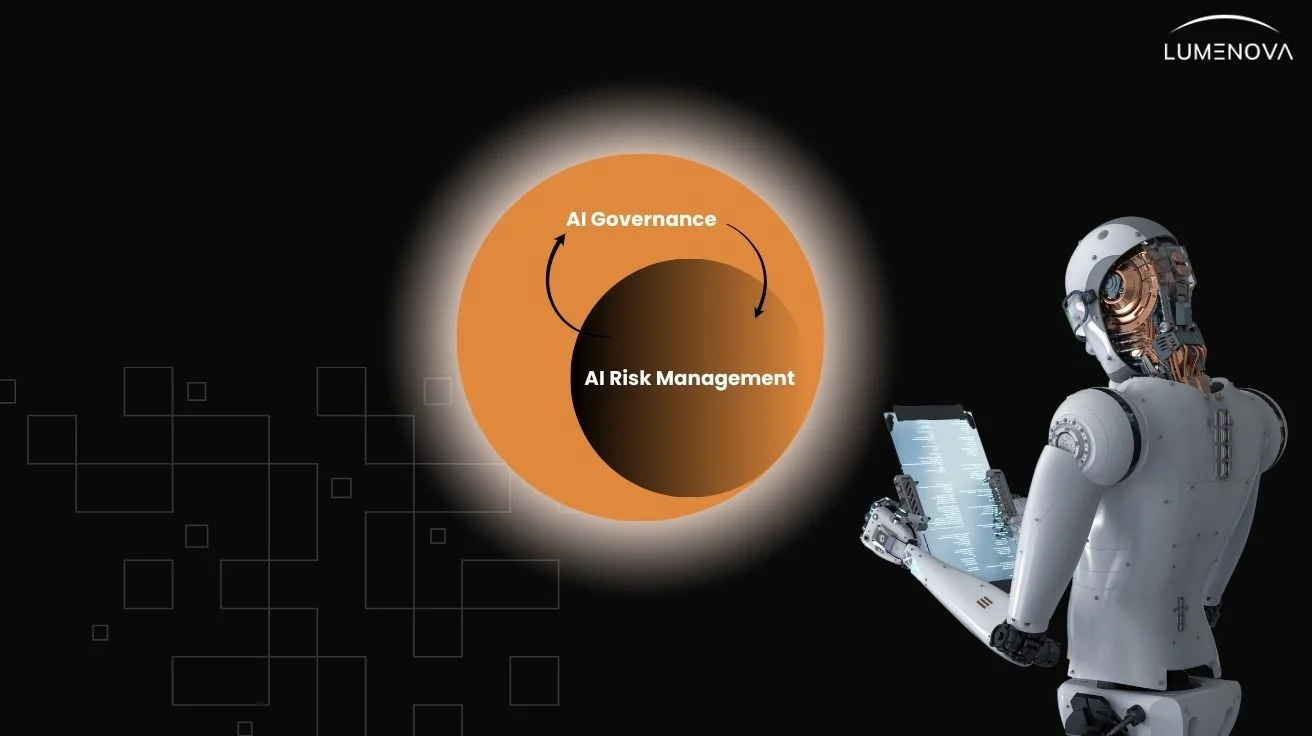

While the two aforementioned terms are closely related and often overlap, they have distinct primary focuses and scopes. Think of it this way: AI governance is the overarching strategy and framework, while AI risk management is a crucial component or specialty within that framework.

Let’s quickly clarify what we mean, cutting through the hype.

AI governance is the strategic rulebook and oversight framework designed to ensure the responsible, ethical, transparent, accountable, and compliant use of AI across an organization. Its scope is broad and holistic. Its primary goal is to establish and enforce alignment with overall business strategy and values, while mapping to regulations like the EU AI Act, NIST AI RMF, or industry-specific mandates, accountability structures, and standards that dictate how AI should be developed, deployed, and managed responsibly.

Role and Functions of an AI Governance Platform

An AI governance platform often serves as the central system for managing policies, inventories, documentation, and audit trails. Think beyond static documents. We’re talking about:

- Centralized model registries: Rich with metadata detailing data lineage, code versions (GitOps integration), ownership, intended use, training parameters, and validation results.

- Policy-as-code capabilities: Defining rules (e.g., fairness metric thresholds, required security scans, data usage constraints).

- Automated compliance workflows: Triggering reviews, approvals, and documentation generation based on predefined governance rules.

- Immutable audit trails: Logging every action, decision, and model transition for unimpeachable traceability required by auditors and regulators.

- Fine-grained access control: Ensuring only authorized personnel and systems can interact with specific models, data, or lifecycle stages.

Core functions of an AI governance platform include:

- Ensuring compliance with evolving regulatory landscapes and operational readiness.

- Promoting transparency and explainability in AI systems.

- Providing governance metrics to measure performance, risks, and ROI.

- Facilitating enterprise-wide oversight of AI initiatives and AI lifecycle management.

SEE ALSO: How to Choose the Best AI Governance Software

AI Risk Management Tool

If AI governance can be pictured as the control plane across an organization’s entire AI portfolio, AI risk management is the tactical defense and inspection mechanism. Its scope is more focused, drilling down into the specific potential negative outcomes of AI.

The primary goal is to specifically identify, assess, prioritize, mitigate, and continuously monitor potential risks and harms associated with individual AI systems or processes, like:

- Data bias leading to unfair outcomes

- Model performance degradation

- Security vulnerabilities that are exploitable by adversaries

- Privacy breaches

- Lack of transparency (the “black box” problem)

… and ultimately, non-compliance stemming from these failures.

Core Functions of an AI Risk Management Tool

An AI risk management tool provides the operational safety net, performing the necessary checks and balances through specialized diagnostics and technical safeguards applied to individual models and systems throughout their lifecycle. This includes technical probes and shields such as:

- Quantifiable bias & fairness analysis

- Explainability algorithm integration (XAI)

- Adversarial robustness testing

- Drift detection mechanisms

- ML-specific security scanning

- Runtime performance monitoring

Core functions of an AI risk management tool include:

- Conducting risk assessments to identify vulnerabilities, along with their likelihood and impact.

- Monitoring algorithmic performance for robustness and fairness against predefined risk thresholds.

- Mitigating risks like data breaches or biased decision-making through implemented controls and strategies.

- Performing security testing of AI models for vulnerabilities to adversarial attacks.

SEE ALSO: Managing AI Risks Responsibly: Why the Key Is AI Literacy

Why You Need Both

When talking about an AI governance platform vs an AI risk management tool, the “versus” is merely rhetorical; in practice, the pitfalls of having one without the other can significantly impair your business’s AI viability. Let’s delve into the “why” before we take a look at the “how” of integrating the two approaches.

If you grant us the luxury of metaphors, AI governance without AI risk management is like a meticulously planned voyage with no lookout.

Imagine a shipping company with extensive regulations (governance). They have detailed manuals for ship operations, strict policies on cargo loading, clear navigation routes planned years in advance, elaborate reporting structures, and ethical guidelines for crew conduct. Everything looks perfectly organized and compliant on paper. However, the company has cut costs on actual ship maintenance inspections, doesn’t employ skilled lookouts to watch for icebergs or other ships, hasn’t tested the lifeboats recently, and doesn’t actively monitor weather patterns (no risk management). The captain follows the procedures precisely, but no one is actively looking for, assessing, or mitigating the specific, real-time dangers the ship might encounter.

You might have comprehensive policies on data privacy, ethical AI principles posted on the company website, and detailed workflows for model approval. But without active risk management, no one is actually testing models for bias before deployment, scanning for security vulnerabilities, monitoring model performance for drift or unexpected harmful outcomes, or assessing the real-world impact on users. The rules exist, but the practical steps to find and fix specific dangers are missing, leaving the organization exposed despite its well-intentioned policies. Your governance is merely a “paper tiger.”

Back within the realm of metaphors, let’s imagine the reverse scenario: AI risk management without AI governance is like an expert firefighting squad in a lawless city.

Picture a city full of incredibly skilled and well-equipped firefighters. They can detect a fire starting anywhere (risk identification), assess its potential spread (risk assessment), and deploy the best techniques and equipment to put it out quickly (risk mitigation). However, this city has no building codes, no zoning laws, no fire safety regulations, no arson investigation unit, and no overall city planning (no governance). So, while individual fires (AI model failures/biases) are expertly handled after they start, new buildings are constructed with flammable materials right next to fireworks factories, electrical grids are dangerously overloaded, and there’s no strategy to prevent fires from starting in the first place or to ensure structures are inherently safe.

You might have teams great at detecting bias in a deployed model or fixing a security flaw after it’s found. But without governance, there are no rules about using inherently biased datasets, no required ethical reviews before deployment, no standardized security protocols during development, and no overall strategy ensuring AI is built and used responsibly from the start. You’re constantly putting out fires without addressing why they keep starting.

In conclusion, focusing solely on one area creates critical gaps, leaving an organization dangerously exposed. For professionals in highly regulated sectors, the need for both is non-negotiable.

Regulators demand:

- Demonstrable oversight: Clear policies, accountability, documentation, and ethical considerations (Governance).

- Proof of control: Evidence of specific risk assessments, bias testing, security validations, ongoing monitoring, and mitigation actions (Risk Management).

Failing on either front can lead to severe consequences: hefty fines, removal of licenses, loss of customer trust, operational disruptions, and significant reputational damage.

Overlap And Integration

While an AI governance platform provides the overarching framework for responsible AI use, an AI risk management tool dives deeper into specific operational risks that could jeopardize compliance or ethical standards. Together, they form a holistic approach to managing AI systems effectively. But don’t take our word for it. Countless industry reports, such as the Gartner AI TRiSM, validate the business importance of addressing these issues holistically and reference the emergence of platforms designed to manage both governance policies and technical risks.

How does it all work complementarily?

The goal is a tightly integrated ecosystem where governance and risk management continuously inform each other:

- An effective AI governance platform must incorporate robust risk management capabilities or integrate seamlessly with dedicated AI risk management tools.

- Data generated by risk management tools (e.g., risk assessments, bias reports) feeds into the governance platform for oversight, reporting, and policy enforcement.

- Governance policies dictate which risks need to be managed and how they should be assessed and mitigated using risk management processes and tools, while leaving room for innovation.

Outcomes of using both an AI governance platform and an AI risk management tool are all positive for the organization, including:

- Comprehensive oversight: Governance platforms ensure alignment with organizational goals and societal values, while risk tools handle vulnerabilities that could undermine these objectives.

- Regulatory compliance: Governance frameworks establish policies for compliance; risk tools monitor adherence to these policies in real-time. Frameworks and regulations like NIST AI RMF, ISO 42001, or the EU AI Act, explicitly mandate robust AI governance and technical documentation, bridging policy (governance) with technical implementation (risk management).

- Ethical assurance: Governance platforms promote fairness and accountability; risk tools identify biases or anomalies that could compromise ethical standards.

- Operational efficiency: Risk tools streamline risk mitigation processes, while governance platforms ensure these processes align with broader organizational strategies.

- Stakeholder trust: Governance platforms build trust through transparency and accountability; risk tools reinforce this trust by ensuring systems remain secure and unbiased.

AI governance platforms provide the strategic foundation for responsible AI deployment, while AI risk management tools address specific vulnerabilities at a tactical level. Organizations need to balance innovation with safety, ensuring that their AI initiatives deliver value responsibly and securely in a rapidly evolving technological landscape.

The Path Forward: Towards Integrated Assurance

As seen above, both scenarios of exclusivity are problematic. You need the overarching rules, strategy, and ethical framework (governance) as well as the practical, ongoing processes to identify and mitigate specific dangers (risk management) for AI to be truly safe, effective, and trustworthy.

The ideal state involves integrating these two functions seamlessly. The critical evaluation that all CIOs, CTOs, and Risk Leaders must perform when choosing an integrated AI governance and risk management solution includes questions like:

- Does our governance platform provide a comprehensive view, manage policies effectively, and integrate compliance workflows?

- Does it effectively incorporate or integrate with tools and processes for specific risk management tasks like bias detection, explainability, security testing, and ongoing monitoring?

- When evaluating new tools or platforms, do they integrate seamlessly into our existing ecosystem and support automated workflows between governance and risk functions?

Are our technology, risk, legal, and compliance teams collaborating effectively within this framework?

Conclusion

AI offers immense potential, but responsible adoption, especially under regulatory scrutiny, demands a dual focus. Don’t treat governance and risk management as an either/or proposition. By embracing both AI governance and AI risk management, we can move beyond simply managing AI initiatives to truly leading them, ensuring innovation, compliance, and resilience for the future of our enterprises.

By architecting an integrated approach that combines a strong governance framework with rigorous technical risk controls, we can build AI systems that are not only innovative but also demonstrably safe, fair, secure, and compliant – earning the trust of regulators, customers, and the business landscape itself.

Ready to see how an integrated platform can address your specific industry’s AI governance and risk management challenges? Schedule a demo to explore how Lumenova’s solutions are architected for the technical demands and regulatory realities of future-forward, AI-driven organizations.

Join the conversation on responsible AI adoption. Follow us on X and LinkedIn to stay informed with actionable insights, timely regulatory updates, and technical strategies designed to help leaders like you navigate the complexities of AI compliance and risk management.