Contents

Ever wondered how your company can embrace the transformative power of AI without risking your valuable data or inadvertently perpetuating biases? It’s a question keeping many leaders up at night, and for good reason.

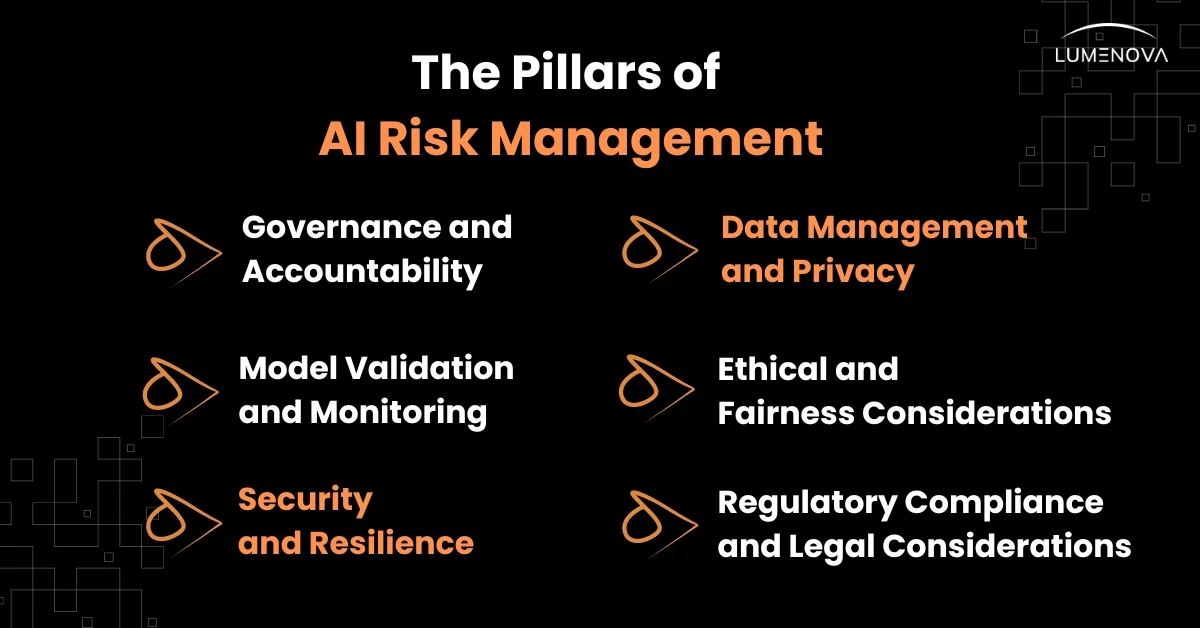

As businesses and organizations continue to integrate AI into their operations, it is increasingly important to ensure that data privacy & security are maintained. AI risk management involves identifying, mitigating, and addressing potential risks associated with AI technologies. These risks could include those related to models, data, cybersecurity, or legal matters. When talking specifically about data-related risks, we can focus on data privacy, bias and fairness in algorithmic decision-making, and the reliability of AI outputs.

A proactive approach to risk mitigation is essential to minimize the potential impact of AI-related risks. By implementing a comprehensive AI risk management framework, addressing data privacy and security concerns and ensuring compliance with relevant regulations, organizations can prepare to address potential risks and ensure the responsible usage of their AI systems.

Understanding AI Risks

AI technology brings with it a range of risks, and without adequate risk management, these risks can result in serious consequences for individuals and organizations alike. Understanding these risks is the first step in ensuring data privacy and security when implementing AI technology.

One of the biggest concerns with AI is data privacy, as AI systems often handle sensitive personal data, which can be vulnerable to privacy breaches. In late 2023, the Biden administration issued an executive order on ensuring AI safety and security, which included provisions for protecting personal data and ensuring that AI systems are transparent and accountable.

Data breaches are a significant concern, as AI systems often process large volumes of sensitive information. A breach can lead to the unauthorized access of personal data, resulting in privacy violations and financial losses. For example, the Cambridge Analytica scandal highlighted how data misuse can undermine public trust in AI technologies.

Establishing an AI Use Policy

One of the most important steps in ensuring data privacy and security is to establish an AI use policy. This policy should outline the intended uses of your AI systems and the steps to ensure these systems are secure and reliable.

An effective AI use policy involves setting up governance structures to oversee AI implementation and use. This includes appointing a dedicated team responsible for monitoring AI activities and ensuring compliance with ethical standards. Regular audits and reviews of AI systems can help identify potential issues and maintain accountability. By establishing such a policy, organizations can build a robust framework for managing AI risks and protecting user data. In addition to establishing an AI use policy, it is important to take proactive steps to mitigate risk.

Proactive Risk Mitigation

By adopting a healthy approach to risk management, we have the opportunity to proactively address identified risks and take appropriate measures such as:

- data minimization and user consent

- conducting thorough privacy impact assessments (PIAs)

- establishing robust security measures

- employing Explainable AI (XAI) to ensure transparency

Data Minimization and User Consent

By minimizing the amount of data collected and ensuring that users are fully informed and have given their consent, organizations can help ensure that their AI systems are secure and reliable. The European Union’s General Data Protection Regulation sets stringent guidelines for data minimization and user consent, serving as a model for global data privacy practices.

- Data minimization involves collecting only the data that is necessary for a specific purpose. This reduces the risk of data breaches and unauthorized access. Organizations should implement policies that limit data collection and retention, ensuring that personal information is not stored longer than required. Techniques such as anonymization and pseudonymization can further enhance data privacy.

- User consent is equally important in maintaining trust. Organizations should provide clear and concise information about how data will be used, giving users the option to opt-in or opt-out. Consent should be obtained through transparent and straightforward processes, avoiding any deceptive practices. By respecting user choices, organizations can build stronger relationships with their customers and stakeholders.

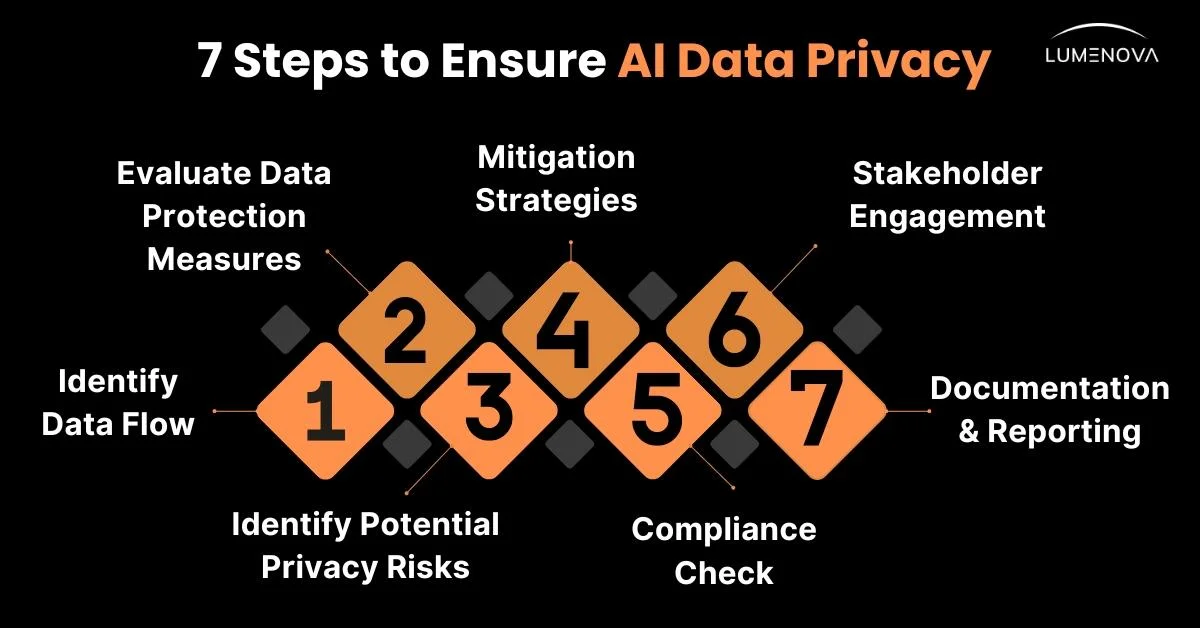

Privacy Impact Assessments

- Identify Data Flows: Understand how data moves through your AI systems, from collection to storage and processing. This helps in pinpointing where sensitive data is handled.

- Evaluate Data Protection Measures: Assess existing security controls and data protection measures. Determine if they are adequate to safeguard personal data.

- Identify Potential Privacy Risks: Highlight areas where data privacy could be compromised, such as through unauthorized access or data breaches.

- Mitigation Strategies: Develop strategies to mitigate identified risks. This may involve enhancing encryption methods, improving access controls, or anonymizing data.

- Compliance Check: Ensure that your AI systems comply with relevant data protection regulations, such as GDPR and CCPA. This involves regular audits and updates to privacy policies.

- Stakeholder Engagement: Involve stakeholders, including data subjects and regulatory bodies, in the assessment process to ensure transparency and accountability.

- Documentation and Reporting: Document the PIA process and findings thoroughly. Report any significant risks and the steps taken to mitigate them to relevant stakeholders and authorities.

Robust Security Measures

Effective access controls involve restricting access to sensitive data and AI systems to authorized personnel only. This can be achieved through multi-factor authentication, role-based access controls, and encryption. Additionally, monitoring systems should be in place to detect and respond to unauthorized access attempts, ensuring that any security breaches are promptly addressed.

Regular security audits are essential for maintaining the integrity of AI systems. These audits can identify weaknesses and areas for improvement, helping organizations stay ahead of potential threats. By leveraging XAI, these audits become more thorough and effective, as transparency allows for a deeper understanding of data processing and highlights where security measures need strengthening. Furthermore, collaborating with external security experts can provide valuable insights and enhance the effectiveness of security measures.

Additionally, organizations (such as those in the banking & investment, telecommunication, healthcare, and technology sectors) should invest in training and awareness programs for employees. By educating staff about AI risks and best practices for data security, companies can create a culture of vigilance and responsibility. Training programs can cover topics such as:

- recognizing phishing attempts

- securing personal devices

- understanding the importance of data encryption

By modernizing their cybersecurity strategies with automation and AI, businesses can further enhance these measures, making it easier to detect and respond to threats effectively. According to a 2023 study by IBM, this approach is key in protecting against costly data breaches, especially for retailers and consumer goods businesses. The report found that the retail and wholesale industry was the fifth-most targeted sector in 2022, with breaches costing millions of dollars on average.

Proactive risk mitigation also involves continuous monitoring of AI systems for potential threats. Organizations should employ advanced monitoring tools to detect anomalies and respond to security incidents promptly. Regular updates and patches to AI software can help address vulnerabilities and enhance system resilience.

This proactive approach not only mitigates potential risks but also ensures that all personnel are equipped with the knowledge to maintain very strong security protocols.

Explainable AI for Transparency

Implementing XAI systems is also imperative for ensuring data privacy and security. These systems are designed to be transparent, allowing users to understand how AI decisions are made. This transparency is crucial for identifying and mitigating risks related to data misuse or breaches, and can help mitigate the risk of algorithmic bias and ensure that AI systems are fair and reliable.

Transparency in AI is essential for accountability. When AI systems make decisions that impact individuals or society, it is important to provide clear explanations for these decisions. XAI helps achieve this by making the inner workings of AI algorithms more understandable to users and stakeholders. Clear explanations of AI decision-making processes can reveal potential vulnerabilities and areas where data privacy might be compromised. This can lead to better-informed decision-making and increased trust in AI technologies.

Moreover, XAI can facilitate regulatory compliance. Many data protection laws (such as the GDPR, CCPA, or HIPAA) require organizations to explain automated decisions that affect individuals. By implementing XAI, organizations can meet these legal risks and demonstrate their commitment to ethical AI practices. Ensuring explainability in AI systems can help organizations avoid penalties for non-compliance by providing transparency in data handling and decision-making processes, which helps mitigate legal risks and prevent potential fines or sanctions.

Compliance and Collaboration

Compliance and collaboration are critical components of AI risk management. By collaborating with cybersecurity experts and regulatory bodies, organizations can ensure that their AI systems comply with relevant regulations and take all necessary steps to mitigate risk.

Regulatory compliance involves adhering to laws and standards that govern AI use and data privacy. Again, this includes international regulations like the GDPR and CCPA, as well as industry-specific guidelines. Organizations should stay informed about regulatory changes and ensure that their AI practices align with up to date legal requirements.

Cybersecurity professionals play a crucial role in identifying vulnerabilities and crafting robust security strategies tailored to the unique challenges posed by AI technologies. Such partnerships not only fortify an organization’s defenses but also foster the development of industry-wide best practices and innovative solutions.

Lumenova AI exemplifies leadership in AI governance and risk management. We offer comprehensive, end-to-end solutions that navigate the complexities of AI implementation. Our commitment to transparency, accountability, and ethical AI practices ensures that your systems are secure, reliable, and trustworthy.

Reflective Questions

- How does your organization currently handle data privacy and security in AI systems, and where can improvements be made?

- What steps have you taken to ensure that your AI systems are transparent & explainable to both users and stakeholders?

- How do you involve stakeholders in the risk assessment and mitigation process for AI technologies?

Now we invite you to reflect on these questions and consider how Lumenova AI can help you strengthen your AI risk management practices. Book a demo to learn more about our comprehensive solutions and how we can support your organization’s journey towards responsible and secure AI implementation.

Conclusion

Organizations must ensure that their AI systems prioritize data privacy & security to protect sensitive information and maintain user trust.

To mitigate these risks, it is imperative to implement comprehensive AI risk management frameworks that encompass risk identification, assessment, mitigation, and ongoing monitoring.

Additionally, AI systems should undergo rigorous testing and validation before deployment to ensure they operate as intended and do not produce adverse outcomes.