Contents

The fusion of artificial intelligence (AI) and content creation is quickly transforming cyberspace. AI-powered tools are now capable of generating everything from marketing copy and social media posts to news articles and even creative works. While this technological leap offers unprecedented efficiency and potential, it simultaneously raises a myriad of legal and ethical concerns that demand careful consideration. Questions surrounding intellectual property (IP) rights, ownership, and authorship inevitably come to the forefront. Traditional legal frameworks may struggle to keep pace with this evolving technology, leaving creators, businesses, and policymakers grappling with how to navigate copyright, plagiarism, and licensing issues.

Furthermore, the potential for AI-generated content to perpetuate biases, spread misinformation, and manipulate public opinion raises significant ethical concerns. Therefore, ensuring transparency, accountability, and responsible AI use in content creation is paramount to maintaining trust and upholding ethical standards in the technological age. All things considered, the rise of AI-generated content presents a complex landscape where innovation and regulation must find a delicate balance. To fully harness the potential of AI while mitigating its risks, it is imperative to address the legal and ethical challenges head-on, fostering a responsible and transparent approach to algorithmic content generation.

The Fundamentals of AI-Generated Content

At its core, AI refers to computer systems that can perform tasks that typically require human intelligence, such as learning, problem-solving, and decision-making. In the context of content creation, AI algorithms are trained on vast datasets of existing content, learning patterns and structures to generate new material that mimics human language and style.

Intellectual Property Rights

The question of ownership emerges as a central concern in the realm of AI-generated content, casting a shadow over IP rights. Who rightfully claims ownership of content born from the depths of an AI algorithm? Is it the creator of the algorithm itself, the individual or entity responsible for training the AI model, or the user who prompts the AI to generate the content? These questions, steeped in legal intricacies, demand careful consideration and nuanced solutions to protect the interests of all parties involved.

Copyright Ownership

In the U.S., the Copyright Office has stated that works containing AI-generated content are not copyrightable without evidence of human authorship. This suggests that AI-generated works lacking sufficient human input may not be eligible for copyright protection at all. However, the matter is far from straightforward, raising a profound question: “How do we prove, beyond a reasonable doubt, human authorship in the age of AI?”

Determining the extent of human involvement in AI-generated content is inherently complex. Traditional measures of authorship do not neatly apply when human and machine contributions are intertwined. If an AI-generated work demonstrates originality and uniqueness as a result of human direction or curation, some argue it may qualify for copyright, with ownership attributed to the human author. The key factor is the level of human creativity involved in guiding and shaping the AI’s output.

Yet, quantifying this human input remains challenging. As of yet, there’s no precise way to measure how much human influence went into creating a specific piece of content. As AI technologies advance, the boundary between human and machine contributions blurs, making it increasingly difficult to assess and validate claims of human authorship.

Interestingly, copyright approaches to AI works vary in different parts of the world. While the U.S. requires human authorship, other jurisdictions like the UK and New Zealand allow copyright AI-generated content protection.

Patent Eligibility

Patent eligibility adds another layer of complexity, particularly concerning the novelty and subtlety of AI-generated content. While patents typically reward inventors of new and innovative creations, discerning the patentability of AI-generated content is challenging. To determine eligibility for patent protection and successfully navigate the complexities of patent law, consulting with patent attorneys is highly recommended.

In the U.S., the USPTO has clarified that while an AI system cannot be listed as an inventor on a patent application, a human’s use of AI in the invention process does not prevent patentability. The key is demonstrating that the invention meets the standard criteria of novelty, subtlety, and utility.

Determining novelty and subtlety can be challenging when AI is involved. If AI-generated marketing content infringes upon IP rights, it could render an invention unpatentable by undermining its novelty or making it obvious. For example, an AI system trained on existing pharmaceutical patents might generate a marketing description that inadvertently discloses key details of a new drug formulation before a patent application is filed, threatening its patentability.

Additionally, AI-generated artistic works, such as paintings or music, might face scrutiny regarding their originality. If an AI system heavily relies on existing copyrighted works or styles, the resulting creation might not be considered sufficiently novel to warrant patent protection.

Trademark Considerations

While AI itself cannot own trademarks due to its lack of legal personhood, issues can arise when AI-generated content is used for commercial purposes and incorporates existing trademarks. Unauthorized use of trademarks in AI-generated content would likely constitute infringement.

However, it’s important to note that the mere presence of another entity’s trademarks in AI-generated output does not always imply infringement. The context and manner in which the trademarks are used play a significant role in determining whether infringement has occurred.

Examples:

- Apple vs. Prepear: Apple claimed a pear logo was too similar.

- Louis Vuitton vs. My Other Bag: Parody canvas bags weren’t deemed infringing.

- Starbucks vs. DUMB STARBUCKS: Parody shop’s branding led to legal action by Starbucks.

Factors such as the likelihood of confusion, dilution, and fair use must be considered. For instance, likelihood of confusion entails making sure customers don’t get mixed up between similar-looking brands. Meanwhile, dilution prevents famous brands from losing their unique identity when their trademarks are used too broadly. Lastly, fair use allows for legitimate uses, like parodies or reviews, without infringing on trademark rights. Together, these factors help decide whether a trademark’s use is fair or if it crosses the line into infringement.

Interestingly, while AI-generated images may not qualify for copyright protection due to a lack of human authorship, this doesn’t mean they can’t be trademarked. As long as an AI-generated logo meets the standard criteria for trademark registration, such as being distinctive, it can be registered and protected. In fact, AI can be profoundly useful in the trademark realm—generative AI can search for and identify potential trademark conflicts, helping businesses clear and register their marks more effectively.

Liability Issues

Defamation and Misinformation

One of the biggest concerns with AI-generated content is the potential for defamation and misinformation. AI can generate content that is false or misleading, inspiring serious consequences for individuals and businesses alike.

Establishing clear guidelines and regulations to curb the dissemination of misinformation and shield against defamation lawsuits is critical. For example, policies could require platforms to implement more stringent verification processes for content, enhance transparency about how AI-generated content is produced, and develop mechanisms to quickly remove or correct false information.

By doing so, we can mitigate the risks posed by AI-generated defamation and misinformation and ensure that content shared online is reliable and trustworthy.

Product Liability

Another potential liability issue with AI-generated content is product liability. If AI generates harmful or negative content, the company that created it could be held liable for any resulting damages or injuries. This could include everything from faulty medical diagnoses to financial advice that results in significant economic losses, or even AI-generated legal documents containing errors that cause legal disputes. Consequently, companies need to set clear protocols for testing and validating AI-generated content to ensure that it is safe and reliable.

Privacy Concerns

Data Protection

AI systems’ reliance on data poses substantial privacy risks. In order to adhere to stringent data protection laws like the EU’s General Data Protection Regulation Act (GDPR), companies must handle data with the utmost care – collecting, storing, and utilizing it ethically and transparently. Sharing data without proper consent is a recipe for legal trouble.

Biometric Information

Biometric data, such as facial and voice data, ventures into sensitive territory. Mishandling such information could result in severe privacy breaches. Unlike other types of data, biometric information is intrinsically linked to an individual’s unique physical and behavioral characteristics, making it irreplaceable and permanent. For instance, while passwords can be changed if stolen, compromised biometric data exposes individuals to persistent risks of identity theft and unauthorized surveillance.

In terms of compliance, regulations like the GDPR impose strict obligations on the collection, use, and protection of biometric data. From a data governance perspective, the unique nature of biometric data necessitates comprehensive strategies to safeguard its integrity and confidentiality. Therefore, companies must fortify their privacy measures, ensuring comprehensive compliance with regulations and business requirements while handling biometric data.

Anonymity and Pseudonymity

Another privacy concern related to AI-generated content is anonymity and pseudonymity. AI systems can be used to identify individuals based on their online behavior, even if they are not explicitly identified by name. This raises concerns about the right to privacy and the ability to remain anonymous or use pseudonyms online. Companies must ensure that they are not violating privacy rights by collecting and using data in ways that could lead to the identification of individuals who wish to remain anonymous or use pseudonyms.

Ethical Considerations

Upon closer examination, these ethical implications can be broadly categorized into three distinct domains:

1. Bias and Discrimination

Bias and discrimination are major risk factors for AI-generated content. Rooted in the very data that fuels these algorithms, bias can manifest in content that perpetuates harmful stereotypes or excludes marginalized groups. Mitigating this risk necessitates a commitment to diverse and representative datasets, fostering inclusivity and equity in content creation.

2. Transparency and Accountability

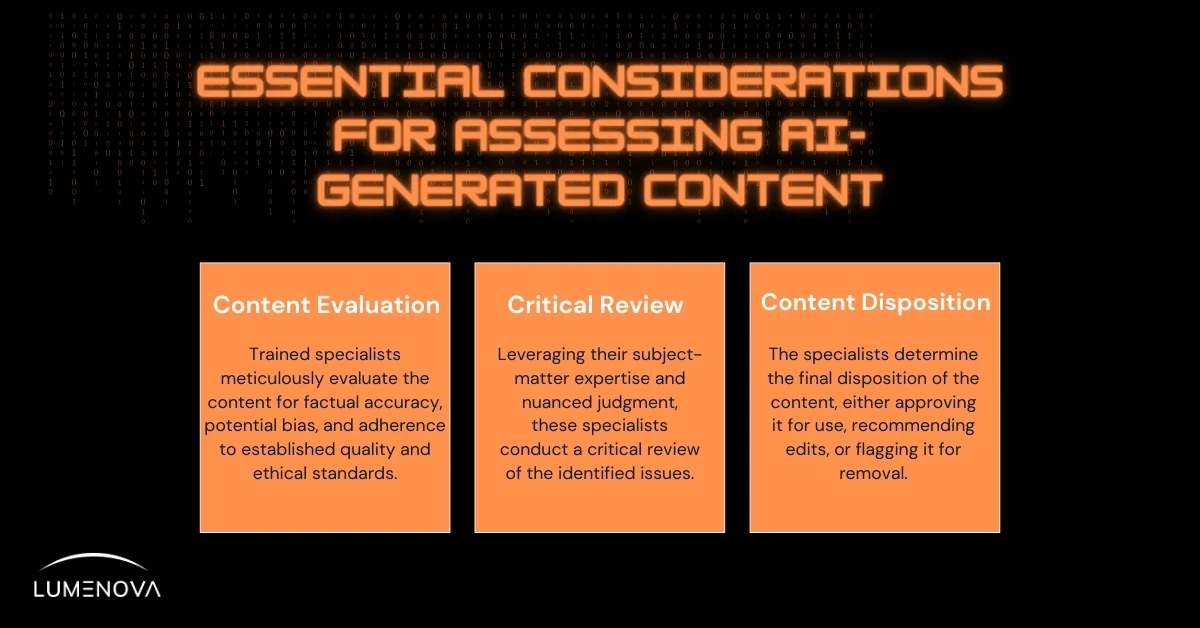

It is important to be transparent about the use of AI in content creation and to ensure that those who leverage AI to generate content are held accountable.

Providing clear information about the use of AI and ensuring that there are clear processes in place for reviewing, approving, and authenticating AI-generated content is a great place to start.

3. Human Dignity and Autonomy

AI’s persuasive potential raises profound ethical concerns for human dignity and autonomy. Since content wields significant influence over thoughts and behaviors, preserving individual autonomy is paramount. Integrating human oversight into the content creation process ensures alignment with ethical principles, safeguarding against undue manipulation, and respect for human rights.

Regulatory Frameworks

National Legislation

Although few targeted laws specifically address AI-generated content, regions like the European Union have implemented broader regulations that impact AI use. For example, the EU’sGDPR provides guidelines for the use of personal data, including data processed by AI systems. In contrast, the United States has yet to establish comprehensive and enforceable federal regulations targeting AI.

However, the Federal Trade Commission (FTC) has issued guidelines for the ethical use of AI and the content it generates. These existing regulations, while not exclusively focused on AI-generated content, set important precedents for managing its use and implications.

International Guidelines

International guidelines can also be used to manage AI-generated content. UNESCO has developed guidelines for the ethical use of AI, which include recommendations for AI-generated content.

- Transparency: It advocates for transparent AI systems, clearly disclosing how AI-generated content is created and the data/algorithms used.

- Inclusivity: It emphasizes the need for AI systems to promote diversity and inclusivity, ensuring content reflects a wide range of perspectives and avoids bias.

Similarly, the OECD has issued guidelines for the development and use of AI.

- Safety and Reliability: The OECD advises that AI systems, including those generating content, should be designed and rigorously tested to be safe and reliable, preventing harmful or misleading content.

- Human-Centric AI: The OECD guidelines stress that AI should be developed to enhance human capabilities and well-being, with AI-generated content supporting human interests and aligning with ethical standards.

Industry Self-Regulation

Industry self-regulation is voluntary and intended to ensure responsible and ethical use of AI within a given industry. For example, the Partnership on AI is a consortium of companies that aim to develop guidelines for the ethical use of AI.

3 Content Moderation Strategies

1. Automated Filtering

Automated filtering is a popular content moderation strategy that involves using algorithms to scan and filter content. This strategy can be effective in identifying and removing content that violates community guidelines or legal regulations. Automated filtering is especially useful when dealing with large volumes of content, as it can quickly identify and flag problematic content. However, it is not foolproof and can sometimes result in false positives or false negatives. Therefore, it’s important to have human oversight to ensure that the filtering is accurate.

2. Human Oversight

This approach leverages human moderators to review content flagged by automated filters. The human moderators possess the expertise to identify and remove content that violates community guidelines or legal regulations, potentially missed by automated systems. Additionally, they can provide context and nuance to content that may be ambiguous or confusing.

3. Community Governance

Community governance, similar to the system employed by Reddit, is a content moderation strategy that involves empowering the community to moderate content. This strategy is effective in promoting a sense of ownership and responsibility among community members. Community moderators can identify and remove content that violates community guidelines or legal regulations. However, community governance can be challenging to implement, especially in large communities. It’s important to have clear guidelines and policies in place to ensure that the community moderates content fairly and consistently.

Future Challenges and Opportunities

As AI continues to advance, managing AI-generated content will become increasingly challenging. There are several areas where future challenges and opportunities are likely to arise.

Advancements in AI Technology

As AI becomes more sophisticated, it will be able to generate increasingly complex and realistic content. This will create new challenges for managing and regulating AI-generated content. For example, deepfake videos are a form of AI-generated content that can be leveraged to manipulate public opinion and are already a growing concern. In 2023, a fake AI-generated video of Facebook CEO Mark Zuckerberg surfaced, showing him supposedly discussing the power of controlling data. While the video was quickly debunked, it highlighted the potential for AI-generated content to spread disinformation and cause real-world harm.

Moreover, it will also become increasingly difficult to distinguish between real and fake AI-generated content. This is where Lumenova comes in. Our platform uses advanced algorithms to detect and flag potentially misleading or harmful AI-generated content, helping businesses and organizations maintain the integrity of their content and protect their reputations.

Emerging Legal Theories

Some legal scholars argue that AI-generated content should be considered a form of speech and therefore protected by the First Amendment. This theory was put to the test in a recent case involving OpenAI’s GPT language model. In 2023, a legal debate emerged after a college student at a university used OpenAI’s ChatGPT to write an essay. When the student’s professor discovered the AI-generated content, the student faced academic consequences, sparking discussions about whether AI-generated work could be protected as free speech under the First Amendment. The university argued that the use of AI violated academic integrity policies, emphasizing the challenges and need for clear guidelines in educational settings to manage AI’s impact on academic standards and originality.

Alternative legal perspectives advocate for stricter regulation of AI-generated content to mitigate its potential use in spreading disinformation or infringing upon individuals’ privacy rights. Reflecting this concern, the European Union took significant strides in 2023 by introducing the AI Act. This legislation is designed to establish a comprehensive framework for the governance of AI technologies, with a strong emphasis on transparency, accountability, and the protection of individual rights.

For instance, the Cali GenAI Guidelines recommend that state entities:

- Conduct Thorough Risk Assessments: Before integrating GenAI solutions, entities should evaluate potential risks, including the impact on privacy, security, and the possibility of generating harmful content.

- Promote Transparency & Accountability: These guidelines emphasize the importance of transparency in the deployment and use of GenAI systems. This includes clear documentation and disclosure of how AI systems function and their intended use.

As these legal theories continue to develop, they will create new challenges and opportunities for businesses and organizations that use AI-generated content. On one hand, stricter regulation could create new barriers and liabilities for companies that might eventually rely on AI-generated content. On the other hand, clearer legal guidelines could help businesses navigate this complex landscape with greater confidence and certainty.

Ultimately, the key to managing the legal risks of AI-generated content will be staying informed about emerging legal theories and working closely with legal experts and policymakers to ensure that generative AI practices are compliant and ethical. This may involve developing clear policies and guidelines for AI-generated content, investing in tools and technologies to detect and prevent misuse, and engaging in ongoing dialogue with stakeholders to build trust and transparency around your AI practices.

For more information on how regulations like the EU AI Act address AI-generated content, see Lumenova’s EU AI Act series on our blog.

Public Perception and Trust

As AI becomes more prevalent in our daily lives, building public trust will be essential. Transparency concerning how AI-generated content is created and used, as well as clear guidelines for regulating its use are critical.

Public opinion on AI is all over the map—people trust it more for some things than others. For example, 79% of Americans said they would be uncomfortable with AI-generated news articles, while only 31% said they would be uncomfortable with AI-generated music. This suggests that public trust in AI-generated content is inconsistent and that different industries may face different challenges in building trust with their audiences.

By openly acknowledging the use of AI and providing tools to help identify it, we empower users to make informed decisions. While current AI detection tools aren’t perfect and may sometimes misidentify human-written content, they represent a significant step towards greater transparency and accountability in this rapidly evolving field.

Another important factor in building public trust is the development of clear guidelines and standards for the use of AI-generated content. In 2020, the Partnership on AI released a set of guidelines for the responsible publication of AI-generated content. The guidelines emphasize the importance of transparency, accountability, and respect for IP rights.

Failure to build public trust in AI could lead to backlash and resistance in various industries. For example, the use of generative AI in search engines might lead to a decline in user trust. If search engines start to generate, index, and distribute content without human oversight, it could lead to a proliferation of misinformation and a loss of public trust in the accuracy and objectivity of search results.

To avoid these negative outcomes, businesses and organizations that use AI-generated content will need to prioritize building public trust through transparency, accountability, and adherence to ethical guidelines. This should involve investing in public education and outreach efforts to help people understand the benefits and limitations of various AI tools, as well as working closely with policymakers and other stakeholders to develop clear regulatory frameworks that balance innovation with the need to protect public interests.

Conclusion

While the future of AI in content management presents significant challenges, it also offers exciting opportunities. By staying ahead of technological advancements, adapting legal frameworks, and prioritizing ethical considerations and public trust, we can harness the power of AI to revolutionize content creation and management in a responsible and beneficial way. As Diogo Cortiz argues in AI Ethics, “The key to unlocking the potential of AI in content management lies in developing a human-centered approach that prioritizes transparency, accountability, and the promotion of human values.”

Take the first step towards shaping the future of AI-driven content management by exploring how Lumenova AI can help your business navigate this transformative landscape. Our team is here to support you every step of the way, ensuring that you have the knowledge, tools, and confidence you need to succeed in this new era of content creation and management.

If you’re ready to learn more, we invite you to explore our blog for in-depth insights, case studies, and expert advice on leveraging AI to transform your content strategy.