Contents

Building on our previous discussion on AI governance, we continue our exploration of how businesses can align AI compliance with strategic objectives, with a particular focus on enterprise-scale AI governance.

A striking example of what happens when AI governance fails is the UK Department for Work and Pensions (DWP) fraud detection scandal. In 2024, the UK government implemented an AI system to detect welfare fraud. However, an internal assessment later revealed that the system disproportionately targeted individuals based on age, disability, marital status, and nationality. The flawed AI model resulted in unfair investigations, widespread distress, and a loss of public trust. The public backlash and legal scrutiny forced the government to review its AI practices, further highlighting the risks of deploying AI without proper human oversight and evaluation.

Enterprise organizations face similar risks on an even larger scale. With global operations, interconnected data ecosystems, and increasing regulatory scrutiny, even a single AI failure can lead to reputational damage, compliance violations, and financial penalties. Despite these high stakes, many enterprises still treat AI governance as an afterthought rather than a critical business function. AI oversight remains fragmented, compliance efforts are reactive, and governance frameworks often lack structure and consistency.

To address these challenges, businesses must rethink AI governance not as a regulatory burden but as a strategic advantage. A well-structured AI governance model strengthens resilience, enhances regulatory readiness, and drives sustainable growth in an AI-powered economy. Therefore, our article explores how enterprises can turn AI governance into a competitive differentiator and build trustworthy, transparent, and compliant AI systems.

The Cost of Weak AI Governance in Enterprise Organizations

In 2016, the Australian government implemented an automated debt recovery system known as “Robodebt,” aiming to identify and reclaim overpaid welfare benefits. The system matched income data from various sources to detect discrepancies, but it often generated incorrect debt notices to individuals who had not been overpaid. This led to significant public distress, legal challenges, and a $1.2 billion settlement by the government.

The Robodebt scandal underscores the critical importance of robust AI governance in public sector applications. A lack of AI governance doesn’t just expose businesses to compliance risks; it fundamentally undermines trust both internally and externally. Enterprise organizations must grapple with three major consequences of poor AI governance:

1. Operational Risk: When AI Systems Disrupt Instead of Enable

Enterprises deploy AI to optimize decision-making, enhance efficiency, and drive automation at scale. However, without proper governance, AI can introduce operational vulnerabilities that create more problems than solutions.

A clear example of this is Apple Card’s credit assessment system. Launched in 2019, the Apple Card leveraged AI to combine traditional credit-scoring factors with additional data points to determine credit limits. However, soon after its release, reports surfaced that women were receiving significantly lower credit limits than men, even when they had similar or identical financial profiles. This sparked allegations of algorithmic bias, raising concerns over the fairness and transparency of AI-powered lending. The controversy not only damaged Apple’s reputation but also demonstrated how ungoverned AI systems can unintentionally reinforce discrimination. Without proactive governance, compliance measures, and fairness audits, AI models can produce biased outcomes that expose organizations to legal risks, regulatory scrutiny, and customer distrust.

Beyond issues of fairness, poor AI governance can lead to fragmented AI deployment across business units. Different teams may implement AI solutions independently, without standardized oversight, leading to inconsistencies in compliance, security vulnerabilities, and conflicting outputs that disrupt enterprise-wide operations. When AI operates in silos, businesses struggle to maintain uniform risk management strategies, further increasing exposure to errors, inefficiencies, and regulatory failures.

Governance Challenge: Enterprises must ensure that AI operates within structured, standardized governance models that actively prevent foreseeable operational failures.

2. Regulatory Risk: Navigating Global Compliance Without Disrupting Innovation

In May 2023, Meta Platforms Ireland Limited (Meta IE) was fined €1.2 billion by the Irish Data Protection Commission (DPC) for violating the General Data Protection Regulation (GDPR). This penalty was imposed due to Meta’s continued transfer of personal data from the EU/EEA to the United States without adequate safeguards.

Despite utilizing updated Standard Contractual Clauses (SCCs) and implementing supplementary measures, the DPC determined that Meta’s data transfer practices did not sufficiently protect EU users’ data, thereby breaching the GDPR. Consequently, Meta was ordered to suspend future data transfers to the U.S. within five months and to cease unlawful processing and storage of EU/EEA users’ personal data in the U.S. within six months.

Organizations must proactively adapt to varying legal standards across jurisdictions to mitigate risks and maintain stakeholder trust.

Governance Challenge: Enterprise AI governance must be compliance-forward, embedding legal and ethical guardrails into AI development, deployment, and oversight.

3. Trust Erosion: The Invisible Cost of AI Failures

Enterprise trust is built over years but can be quickly lost due to a single AI failure.

Customers, investors, and business partners expect AI-driven decisions to be fair, unbiased, and explainable. But when enterprises deploy AI without clear governance, trust can erode in three key ways:

- Opaque AI Decision-Making: If an enterprise can’t explain why an AI-driven decision was made (e.g., hiring choices, pricing models, or risk assessments), stakeholders lose confidence.

- Data Privacy Violations: Enterprises collect massive amounts of data, but without strict data governance provisions, businesses risk compliance violations and consumer distrust.

- Bias and Discrimination: AI models trained on incomplete or biased datasets can reinforce discriminatory patterns, leading to legal challenges, regulatory intervention, and public backlash.

Governance Challenge: Enterprises need AI transparency frameworks that ensure explainability, fairness, and consumer trust in AI-driven processes.

Enterprise AI Governance: A Strategic Approach

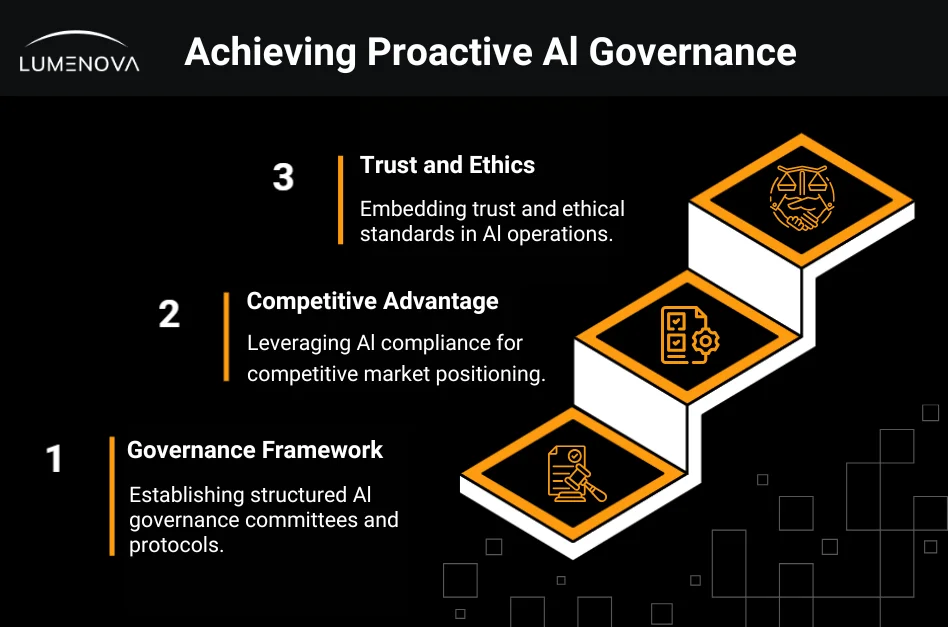

Rather than treating governance as a regulatory burden, enterprises must adopt a proactive AI governance model that enhances trust, compliance, and operational excellence.

1. Establishing an Enterprise AI Governance Framework

To ensure AI systems are transparent, accountable, and compliant, enterprises must embed AI governance into existing corporate structures. This requires:

- AI Governance Committees: Cross-functional teams comprising legal, compliance, IT, data science, and executive leadership.

- AI Risk Management Protocols: Formalized frameworks to assess AI risks before deployment, not after problems arise.

- Enterprise AI Audits: Regular evaluations of AI decision-making processes to ensure compliance and mitigate bias.

Why It Works: A structured governance framework ensures that AI aligns with enterprise-wide objectives rather than operating in siloed, unregulated environments.

2. Creating AI Compliance as a Competitive Advantage

Instead of viewing compliance as a restriction, enterprises can position AI governance as a market differentiator. By integrating governance into business strategy, companies can:

- Gain faster regulatory approvals and avoid disruptions in international markets.

- Demonstrate AI ethics leadership, reinforcing investor and stakeholder confidence.

- Build stronger consumer relationships by emphasizing data protection and AI transparency.

Why It Works: Organizations that lead in AI governance are seen as industry pioneers, rather than reactive participants in regulatory enforcement.

3. Embedding AI Trust and Ethics into Business Operations

To ensure AI enhances trust rather than erodes it, enterprises should implement:

- AI Explainability Standards: Ensuring that AI-driven decisions can be understood by non-technical stakeholders.

- Bias Mitigation Strategies: Proactively testing AI outputs to prevent discriminatory outcomes or decisions.

- Data Stewardship Policies: Strict governance on how enterprise AI systems collect, store, and process internal and external data.

Why It Works: A trust-first AI approach transforms governance from a risk-management tool into a business enabler.

Conclusion

At Lumenova, we believe AI governance should empower businesses, not slow them down. Strong governance builds trust, enhances compliance, and ensures AI systems operate with transparency and integrity. When organizations take a proactive approach, they not only mitigate risks but also unlock new opportunities for innovation and growth.

With our Trusted AI Platform, enterprises can simplify and automate AI risk management, making governance a seamless part of their operations. Additionally, our AI Risk Advisor provides real-time insights, tailored compliance guidance, and expert-driven risk assessments (all designed to help businesses navigate the complex AI landscape with confidence).

Take control of your AI governance strategy today. Explore our AI Risk Advisor and lead with trust in AI.