Contents

Managed Service Providers have always had to juggle technical complexity, tight SLAs, and evolving client expectations. In recent years, many MSPs have turned to artificial intelligence to streamline operations, enhance security, and offer smarter, faster service delivery. That shift has helped providers stay competitive in a crowded market. But it has also introduced a new layer of responsibility.

Clients are no longer just asking what your platform can automate. They are asking whether they can trust the decisions your systems are making on their behalf. They want to know how an alert was generated, why a behavior was flagged, and whether any automated action taken by your system can be justified after the fact. In other words, they are not just evaluating your performance metrics anymore. They are evaluating your transparency, accountability, and governance.

And if you cannot explain the logic behind your AI, then the trust your clients have placed in you is suddenly at risk.

This is the moment where Explainable AI (XAI) becomes essential. Not as a technical nice-to-have, but as a core competency for any MSP that is serious about using AI responsibly, ethically, and effectively.

What Does Explainable AI Mean in the MSP Context?

Explainable AI refers to AI systems that can clearly communicate the reasoning behind their decisions. These systems are designed not only to produce outputs but also to offer human-understandable insights into how those outputs were reached.

In practical terms, that means being able to answer questions like:

- What data was used to make this decision?

- What features or behaviors contributed most to the outcome?

- Were any assumptions or weighting factors involved?

- Can the decision be audited or verified?

For an MSP, explainability plays out across a wide range of services. It affects how you explain automated threat detection to a CISO, how you clarify flagged user behavior to a compliance officer, and how you justify AI-generated infrastructure recommendations to a CIO or board member.

In all of these situations, it is no longer enough to say that the system flagged an issue. You have to show how and why it happened.

Financial institutions are already under intense pressure to explain automated decisions to regulators and customers alike. MSPs can learn from how these sectors are embedding explainability into high-risk, high-compliance environments.

Read also: Explainable AI In Banking and Finance

Why Clients Are Pushing Harder for Explainability

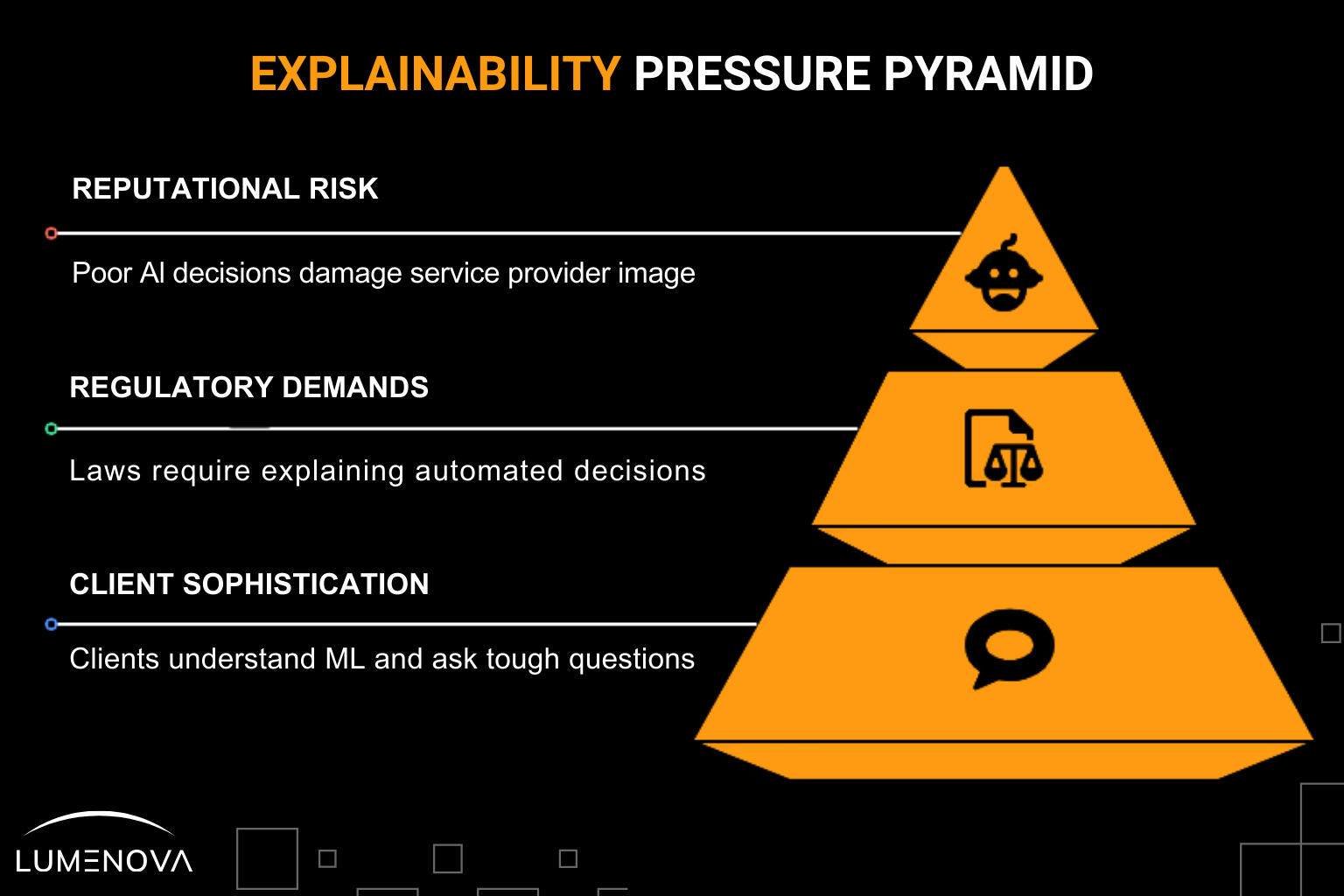

The pressure for explainability is not coming from nowhere. It is the result of multiple forces converging at once.

MSPs are now being held to the same governance standards as enterprise AI users. Understanding how trust, compliance, and transparency intersect is key to staying ahead of emerging regulations. Learn more here: Enterprise AI Governance: Ensuring Trust and Compliance

Clients Are More Technically Sophisticated

Today’s clients are not just passive recipients of managed services. Many have in-house technical teams, security leads, and data governance advisors who understand the fundamentals of machine learning. They are not afraid to ask challenging questions, and they expect you to provide clear answers.

Regulatory Requirements Are Getting Stricter

From GDPR in the European Union to HIPAA in the U.S., there is a growing expectation that companies must be able to explain how automated decisions are made, especially when those decisions impact personal data, security, or compliance obligations. As frameworks like the EU AI Act and emerging U.S. legislation begin to take shape, those expectations will become enforceable requirements.

If your AI models are not explainable, you are not just risking client trust. You may be risking legal exposure as well.

Reputation Is Tied to Responsibility

As AI becomes more visible in the products and services MSPs deliver, there is a reputational cost to systems that fail or make poor decisions. If your AI flags the wrong user, blocks legitimate traffic, or generates alerts that cannot be defended, clients may begin to question not just the tool but your judgment as a service provider.

On the other hand, providers that demonstrate transparency, accountability, and ethical foresight in their use of AI will stand out in a competitive field.

Why Performance Alone Is Not Enough Anymore

It is easy to fall into the trap of thinking that as long as the AI is accurate, that is good enough. But in the real world, results are not just judged by correctness. They are judged by how understandable, trustworthy, and defensible they are.

Let us consider an example.

Your AI-powered endpoint detection platform flags a set of machines as compromised. Automated actions isolate the devices, generate tickets, and send alerts to the client. Technically, the system worked. But the client’s security team follows up and asks a perfectly fair question: “Why were these machines flagged? What indicators of compromise were used? Can we review the logic?”

If your only answer is, “That is how the model works,” then you have already lost the conversation. The model may have been right, but the client is still frustrated and uncertain. They do not just want results. They want clarity and confidence.

This is why explainability is no longer a secondary concern. It is central to how clients evaluate your value, your reliability, and your strategic partnership potential.

The Real Business Risks of Unexplainable AI

When AI systems behave unpredictably or opaquely, it is not just a technical issue. It is a business risk. The consequences may include:

Loss of Client Trust

If clients begin to feel like they are being kept in the dark about how critical systems are making decisions, they may look elsewhere. In an industry where retention is critical and switching costs are low, trust is everything.

Compliance Violations

Unexplainable systems are difficult to audit. That becomes a serious problem if your client is subject to regulatory oversight. If a regulator asks how an automated decision was made and there is no documentation or logic available, that could trigger fines or investigations.

Slower Sales Cycles

Increasingly, procurement teams are evaluating AI transparency during the buying process. If your service descriptions, demos, or proposals do not include clarity around how your AI works, you may be adding friction to every deal.

Operational Confusion

Internally, unexplainable AI makes it harder for your own teams to support, troubleshoot, and improve systems. It limits learning, slows response times, and undermines the value of the AI itself.

The Good News: Explainability Is Not Out of Reach

Despite the challenges, explainability is not something reserved for elite research labs or billion-dollar platforms. It is practical, achievable, and increasingly built into the tools MSPs are already using.

Here are a few strategies and technologies that MSPs can leverage:

SHAP and LIME

These are two of the most popular tools for explaining machine learning models. (SHAPSHapley Additive exPlanations) assigns a contribution value to each feature in a prediction, helping you see which variables influenced a decision the most. LIME (Local Interpretable Model-Agnostic Explanations) builds local surrogate models to explain individual predictions in a more digestible way. Both can be integrated into dashboards or used behind the scenes to help teams explain decisions when needed.

Transparent Reporting

Some MSPs are building client-facing explainability into their regular reports. This includes sections that walk through why alerts were triggered, what criteria were evaluated, and how risk was assessed. These explanations are tailored for non-technical stakeholders and reviewed as part of QBRs or compliance check-ins.

Human Oversight Models

Explainability does not have to be fully automated. Some MSPs are layering human review into their AI workflows, especially for high-risk or client-facing outputs. This allows a human analyst to verify or validate AI-generated recommendations and add context that clients can understand and act on.

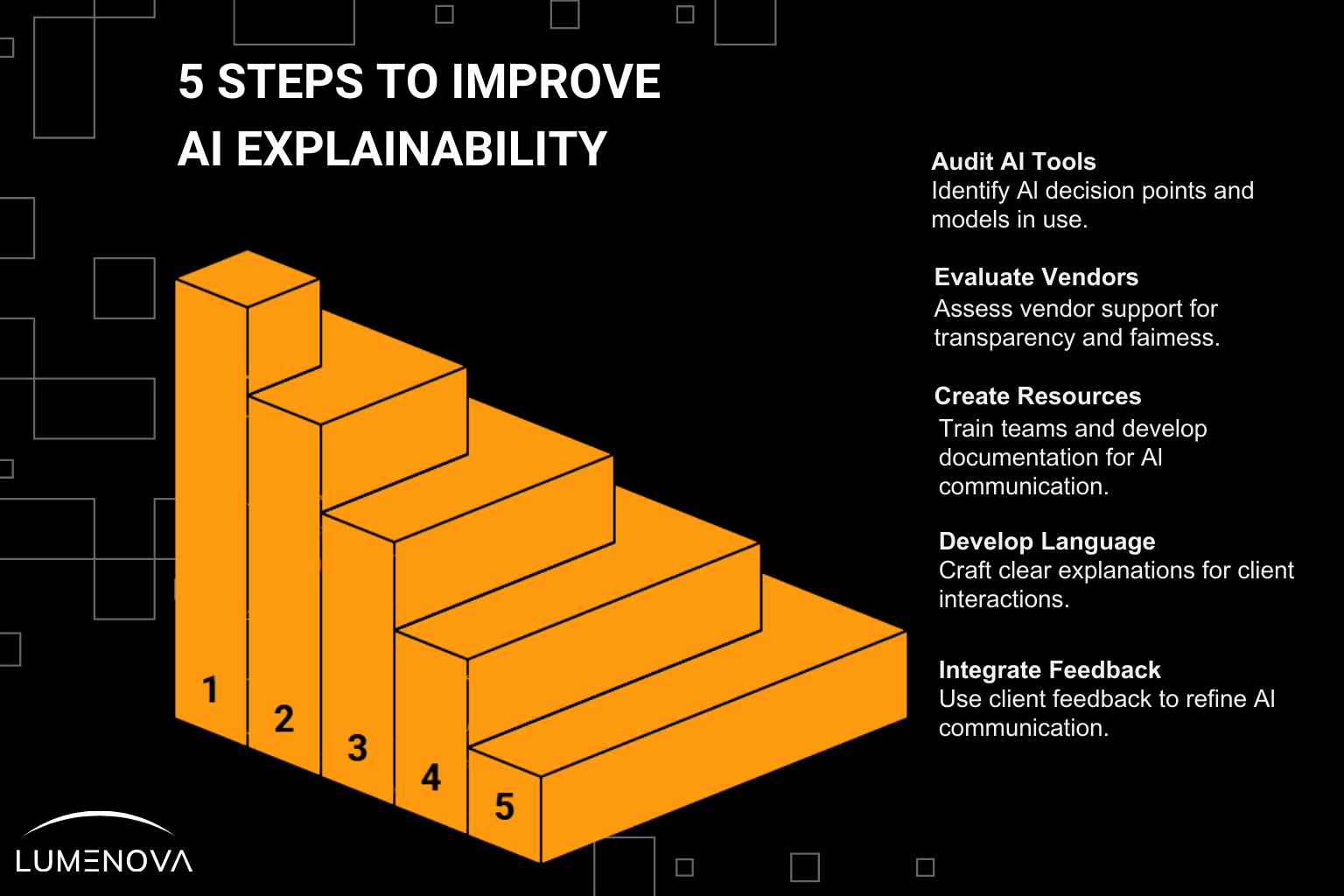

Five Steps MSPs Can Take Today to Improve AI Explainability

If your organization is ready to start improving explainability, here are five practical actions you can take immediately:

- Audit your current AI tools and services

Identify where AI is making decisions in your stack today. Understand what models are being used, what data they rely on, and how those decisions are currently explained, or not. - Evaluate your vendor relationships

Not all tools are created equal. Ask your vendors whether they support model transparency, whether explanations can be generated, and how bias or fairness are handled in their systems. - Create internal knowledge resources

Train your support and engineering teams on how to discuss AI logic with clients. Build documentation, internal FAQs, and quick-reference guides that can help them communicate with confidence. - Develop client-facing explainability language

Start including short, clear explanations in client tickets, reports, and portals. Focus on clarity, not complexity. Help clients understand what happened and why, without needing a technical background. - Integrate feedback into your governance

Ask your clients whether the explanations you are providing are helpful. Use their feedback to refine how you communicate about AI decisions and adjust your processes over time.

Read also: How to Make Sure Your AI Monitoring Solution Isn’t Holding You Back

Looking Ahead: Explainability as a Strategic Differentiator

The future of managed services is going to be shaped by intelligent automation. That is already happening. But as AI becomes more embedded in every layer of service delivery, explainability will separate responsible providers from risky ones.

MSPs that can communicate how their systems work, why they behave a certain way, and how they align with business and compliance goals will build deeper, longer-lasting client relationships. They will be trusted not just as technicians, but as strategic partners.

And perhaps most importantly, they will be prepared for the next phase of AI governance, where transparency, fairness, and accountability are no longer aspirations. They are requirements.

Final Thoughts

If you are using AI today, you are already operating in a space where trust and transparency matter. The question is not whether your system is good enough. It is whether your clients understand what it is doing and why.

Explainable AI is not a barrier to innovation. It is the foundation for responsible, scalable, and sustainable AI adoption in managed services.

At Lumenova AI, we believe that clear, defensible AI systems are not only possible but essential. And we are improving our AI risk management platform to help MSPs get there.

Reflective Questions

- Are your clients confident that your AI systems make fair and defensible decisions?

- How equipped is your support team to explain AI-driven alerts or automation?

- What would it take to make explainability a formal part of your service delivery model?

Build Trust with Explainable AI

Lumenova AI helps MSPs integrate explainable, responsible, and fully auditable AI into their service models. Whether you are automating security, scaling support, or modernizing infrastructure, we help you do it transparently (with a RAI platform that clients understand and trust).

Contact us today to learn how explainability can set you apart, reduce risk, and deepen client relationships.

Frequently Asked Questions

Explainable AI refers to artificial intelligence systems that can clearly articulate the reasoning behind their outputs. For MSPs, this is essential because clients increasingly demand transparency about how decisions are made, especially when those decisions affect security, compliance, or operations. XAI helps MSPs build trust, demonstrate accountability, and meet regulatory expectations.

MSPs can simplify explanations by using tools like SHAP and LIME, which break down model decisions into understandable components. It’s also helpful to translate technical findings into business language and incorporate visuals or plain-language summaries. The goal is to communicate clearly, not to expose every algorithmic detail.

Not necessarily. While some high-performing models are harder to interpret, recent advancements have made it possible to build powerful AI systems that are also transparent. In many cases, the small trade-off in complexity is worth the gains in trust, auditability, and regulatory compliance.

Sectors with high regulatory oversight or mission-critical decisions (such as healthcare, finance, and cybersecurity) demand explainability. For MSPs serving clients in these areas, being able to justify AI-driven alerts or automation is no longer optional. It’s essential for maintaining compliance and credibility.

Start by auditing where AI is used in your services and whether clients receive clear explanations. Work with vendors that support transparency and adopt explanation tools where possible. Train client-facing teams on how to communicate AI decisions effectively, and gather feedback regularly to improve your approach.