Contents

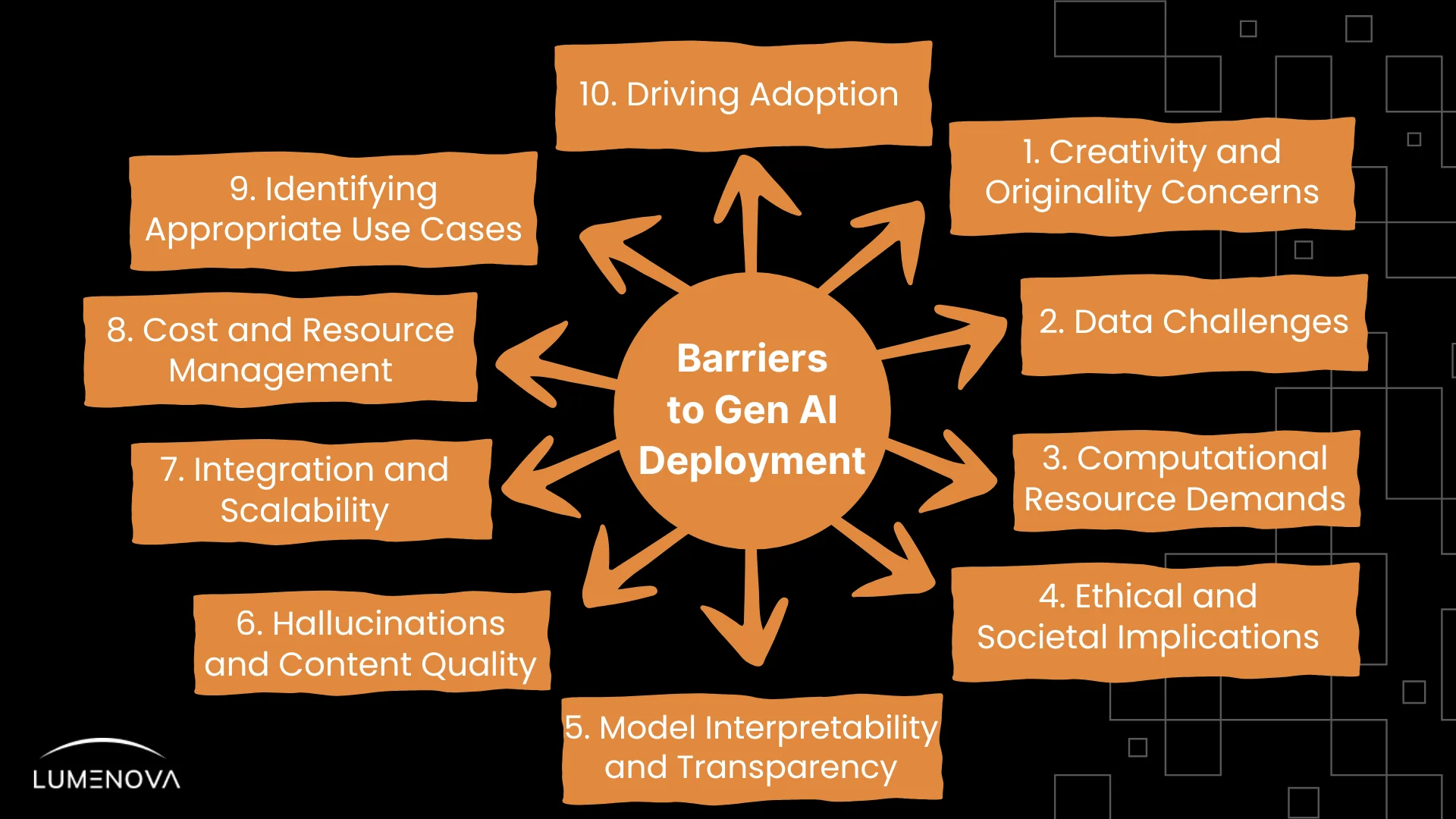

In our previous article, we tackled foundational challenges in generative AI (GenAI)—covering key areas like data privacy, bias reduction, and computational demands. We focused on strategies to ensure responsible AI use, from safeguarding data to aligning with regulatory standards.

Now, in Part II, we turn our attention to one of the most critical aspects of AI deployment: building trust and transparency. This article explores essential strategies for enhancing model interpretability, addressing AI hallucinations, and fostering clear communication to improve user understanding and confidence. These steps are essential not only for compliance but also for making GenAI a truly reliable and ethical tool in any organization.

Model Interpretability and Transparency

A well-documented case illustrating the risks of opaque AI decisions occurred in 2019 when Apple’s credit card, issued by Goldman Sachs, faced allegations of gender bias in its credit limit algorithm. Multiple customers, including tech entrepreneur David Heinemeier Hansson, reported that women received significantly lower credit limits than men, despite having similar financial profiles. This lack of transparency led to frustration and distrust among users, sparking public debate and prompting an investigation by the New York Department of Financial Services (NYDFS) into the algorithm’s fairness.

The “black box” nature of many AI models can lead to skepticism and hesitation, as users are left in the dark about how these powerful tools arrive at their conclusions. In an era where accountability and ethical standards are paramount, the inability to understand AI decisions can erode trust and hinder adoption. As you navigate the complexities of integrating GenAI into your operations, fostering interpretability and transparency becomes essential—not just for compliance, but for building strong relationships with your stakeholders.

Strategies for Enhancing Interpretability and Transparency

Implementing Explainable AI (XAI) Techniques

XAI focuses on creating models that provide clear insights into their decision-making processes. This involves using techniques that make the operations of complex models understandable. For example, feature attribution methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) help identify which specific features contributed to a model’s output.

- LIME: Imagine being a customer applying for a loan at a large bank. In some banks, AI models now help assess loan applications to streamline decision-making, but the process can feel cold and impersonal if you’re left wondering why you were approved or denied. To address this, some banks use LIME to “open up” the AI’s decision process. When the AI reviews your application, LIME can break down exactly which factors—like your income, credit score, or debt-to-income ratio—had the biggest influence. Loan officers then have a clear, human-friendly explanation to share with you, making the decision feel more transparent and grounded in understandable terms. Customers who’ve experienced this approach report feeling more respected and trusting of the bank, knowing that clear, relevant factors guided the outcome.

- SHAP: In hospitals, SHAP is used to make AI-based medical predictions more understandable for doctors and patients alike. Consider a scenario where an AI system predicts a high risk for a condition, such as diabetes, based on various health metrics. SHAP can reveal to the doctor which specific factors—such as family history, recent blood test results, or lifestyle habits—most influenced this prediction. This allows the doctor to discuss the AI’s assessment with patients in a clear and meaningful way. Patients have shared that this kind of transparency reduces anxiety and builds trust in the healthcare process, as they can see exactly how their personal health information contributed to the prediction. Doctors, too, report feeling more confident in sharing AI-driven insights, knowing they can explain these assessments in plain language.

Clear Communication About AI Use

Open and honest communication about how AI is used within your organization is essential. This includes providing context around the data being utilized, the algorithms being employed, and the decision-making processes at play.

Consider creating user-friendly, easy-to-interpret documentation that outlines how your AI systems function (and for what purpose) and the safeguards in place to ensure ethical use. Engaging with your users through training sessions or informational workshops can further demystify the technology and promote understanding.

Compliance Preparedness Through Transparency

Clear communication about how your organization uses AI also helps you stay prepared for compliance with regulatory standards. By thoroughly documenting the purpose, processes, and safeguards of your AI systems, you create a record that’s easy to reference during audits or regulatory reviews. This proactive approach not only builds trust with stakeholders but also reduces compliance risks, showing that your organization is committed to using AI responsibly and meeting industry standards.

Hallucinations and Content Quality

GenAI is a remarkable tool that can create compelling narratives, stunning visuals, and innovative solutions, but there’s a catch: it can also produce convincing outputs that are completely inaccurate or nonsensical. This phenomenon, known as hallucination, occurs when AI generates information that appears true but is in fact false.

Lumenova had a recent experience that perfectly illustrates this issue. One of our employees participated in an engaging GenAI training workshop, where the conversation took an unexpected turn. During a lively discussion, a GenAI evangelist shared an anecdote about a heated debate he had on a social media platform regarding a legal matter.

The evangelist recounted how a woman claimed to have found a specific law that supported her argument. Intrigued, he checked the citation and discovered that no such law existed. Curious about her source, he asked her directly, “Did you use ChatGPT for that?” To his surprise, she replied that she had. This moment sparked a deep discussion about the accuracy of AI-generated information and how easily it can lead to confusion.

This incident underscores the potential for GenAI to produce hallucinations. Relying on AI to provide crucial information without verifying outputs can lead to misunderstandings, poor decision-making, and potentially harm your organization’s reputation.

Therefore, as you navigate the complexities of GenAI, understanding the nature of these hallucinatory outputs and implementing effective strategies to manage them is essential for maintaining high content quality and ensuring reliable outcomes.

Understanding Hallucinations

These fictional claims made by AI express themselves in various forms, including:

- Factual Inaccuracies: Statements like “All electric vehicles (EVs) are zero-emission” sound authoritative but overlook emissions that can result from certain power sources used in charging, misleading users about the complete environmental impact of EVs.

- Confabulation: The AI invents plausible-sounding details, like claiming, “XYZ Brand just released a toothpaste that reverses cavities.” While this may sound exciting, no such product exists, which could easily mislead consumers into looking for products that don’t exist.

- Fabricated Sources: The AI cites sources that don’t exist, which can mislead users who rely on trusted references. For example, it references “The 2022 Global Climate Report by XYZ” to support a product’s environmental claims, even though no such report exists.

Understanding Hallucinations vs. Ambiguous Outputs

Generative AI can sometimes produce outputs that seem misleading or confusing, but not all of these cases are true hallucinations. There’s a difference between a genuine hallucination—where the AI provides made-up or incorrect information—and an ambiguous response due to a broad or unclear prompt.

For instance, imagine a user asks, “What are the benefits of meditation?” The AI might respond with a general overview, mentioning improved focus, reduced stress, and enhanced emotional well-being. This is not a hallucination; it’s a standard response to a broad question. However, if the AI claimed that a “2022 Global Study on Meditation by Harvard University” showed specific results—without any such study existing—that would be a true hallucination.

Organizations can minimize ambiguous responses by guiding users to create precise prompts, while also implementing checks to identify and correct hallucinations. Clear prompting encourages more accurate answers, and systematic review processes help catch any invented details that could erode trust in the AI system.

User Insights: Trust and Concerns Around AI Hallucinations

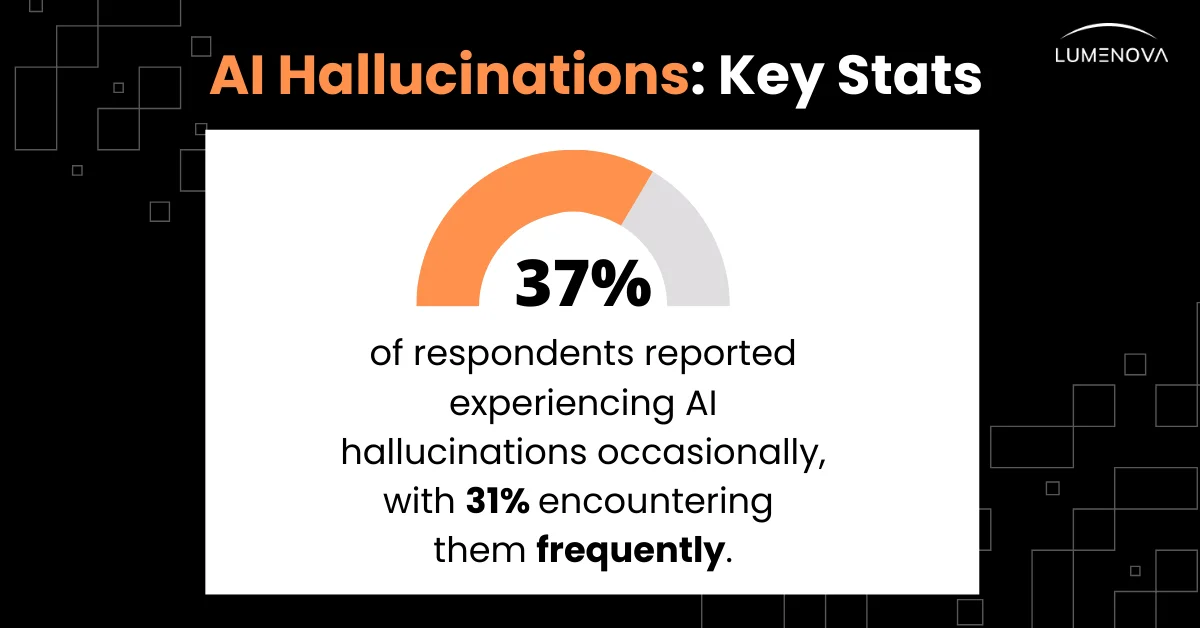

To delve deeper into user sentiments around AI hallucinations, we conducted a survey involving 399 participants from various backgrounds, including Machine Learning, Artificial Intelligence, Deep Learning, Computer Vision, Robotics, DataOps, and GenAI.

Key Findings:

Prevalence of Hallucinations

The survey indicates that 37% of users occasionally encounter AI hallucinations while 31% frequently encounter them, revealing a significant reliability concern. Frequent AI inaccuracies undermine user trust and can hinder successful AI integration. Organizations must prioritize the detection and resolution of these inaccuracies to foster confidence in AI technologies.

Why Do Hallucinations Happen in Gen AI?

Generative AI’s misleading assertions occur due to several underlying reasons, including:

- Limitations in Training Data: If the data used to train the AI is incomplete or outdated, the model may provide inaccurate responses. Gaps in knowledge can lead to faulty conclusions.

- Pattern Recognition Errors: AI generates content by recognizing patterns in data, but sometimes it may incorrectly link unrelated concepts, leading to erroneous correlations and inaccuracies.

- Lack of True Understanding: Unlike humans, AI lacks true comprehension of content. It generates responses based on learned patterns rather than real knowledge, leading to plausible-sounding but incorrect information.

- Overfitting: When AI focuses too much on specific examples in its training data, it struggles to apply those patterns correctly to new situations, leading to generalization errors.

- Data Drift: Over time, changes in data patterns or the training environment—referred to as data drift—can cause models to generate outputs based on outdated information. This shift may result in inaccuracies or hallucinations, as the model’s responses no longer align with current data trends or contexts.

- Model Drift (Concept Drift): Model drift, also known as concept drift, happens when the relationship between inputs and outputs changes. Even if the input data looks similar, the underlying patterns or behaviors the model is designed to predict may evolve over time. For example, a model that predicts product demand based on seasonal trends may become inaccurate if consumer preferences shift unexpectedly.

- Unknown Causes: Sometimes, even with advanced insights and monitoring, the precise cause of certain hallucinations remains elusive.

Addressing Hallucinations Across Generative AI Applications

The challenges posed by hallucinations can impact various sectors, from marketing and customer service to content creation and data analysis. Regardless of the industry, the consequences can be significant:

- Marketing: Misleading claims or fabricated testimonials can undermine campaigns and lead to customer dissatisfaction or regulatory scrutiny.

- Customer Service: Inaccurate information provided by AI-powered chatbots can erode trust, especially when customers depend on accurate details for their inquiries.

- Content Creation: Whether drafting articles, generating reports, or creating product descriptions, GenAI’s imaginary insights can result in misleading or factually incorrect information, negatively affecting engagement, data analysis and brand reputation.

Creativity and Originality Concerns

The question of creativity in AI-generated content is an ongoing debate, especially in fields where originality is highly valued, like art, design, and writing. Concerns arise about whether AI-generated content lacks the authenticity and human touch that audiences expect.

Key Concerns

Intellectual Property and Ownership

In creative fields, the use of AI raises critical questions around intellectual property (IP). For instance, when AI generates content—whether it’s art, text, or music—who legally owns the rights to this work? Moreover, how does this align with existing IP laws, which may not fully account for AI-generated creations? This is especially relevant in industries where originality is essential, and ownership of creative output is directly tied to brand value and market differentiation.

On the other side, the issue also affects those whose work contributes to AI training datasets. Many AI models are trained on large, often publicly available datasets, which include content created by individuals, companies, or organizations. This raises ethical questions: should creators have a say in how their work is used for training, and should they be compensated? For example, if an AI model trained on a photographer’s portfolio generates images with a similar style, does the photographer deserve acknowledgment or even a share of ownership?

Navigating these IP and ownership complexities requires a balanced approach. Organizations may consider setting clear guidelines on the ethical use of training data and maintaining transparency about how AI-generated content will be owned and utilized. In some cases, involving creators or offering opt-in options for data usage can build trust and support broader collaboration.

Perception of Generic Output

GenAI outputs can feel formulaic and lack authenticity. This can be a concern in industries where unique perspectives and creativity are essential.

Strategies for Navigating Creativity and Originality Concerns

Combining AI with Human Input

Use GenAI as a tool to support human creativity. For example, in content creation, AI can be used to draft ideas or create variations, while humans bring refinement and originality, ensuring the final product aligns with the brand’s voice and vision.

Establishing Guidelines for Originality

Develop clear guidelines that define the role of AI in content creation. This could involve setting standards for how AI is used in brainstorming or generating content that still allows human creators to contribute distinct, original elements.

Transparency with Audiences

Inform audiences when content is both partially and wholly AI-generated. For instance, in media or design, openly stating that AI was used in a project can build trust while ensuring audiences understand that human creativity and oversight are still key components.

Regular Review and Feedback

Regularly review AI-generated content to ensure it meets originality standards and aligns with audience expectations. Feedback loops involving human reviewers can help refine AI outputs, maintaining creativity without compromising quality.

Strategies for Enhancing Content Quality

To mitigate the risk of hallucinations and improve the quality of AI-generated content, consider implementing the following strategies:

Diverse Training Data

Ensure that your AI model is trained on a diverse and representative dataset. This means including a wide range of scenarios in your training data to help the model understand nuances and produce more accurate outputs.

Pre-Processing Filters

Implement pre-processing filters to clean and validate the data before it is fed into the AI model. These filters can remove low-quality content, irrelevant information, or potential sources of bias, ensuring that only high-quality data contributes to the training process.

Reinforcement Learning from Human Feedback (RLHF)

RLHF techniques enhance AI accuracy by aligning models with your organization’s specific needs and standards. In this approach, human feedback on AI outputs helps refine the model’s responses for better accuracy and nuanced decision-making, essential for fields like customer service, legal advisories, and medical recommendations.

Explore RLHF methods like:

- Reward Modeling: Human feedback forms a reward model that guides the AI toward prioritized, standards-aligned behaviors.

- Iterative Training Loops: AI models undergo retraining with ongoing feedback, adapting continuously to evolving scenarios and requirements.

- Domain Expert Fine-Tuning: Input from industry experts improves contextual accuracy, enabling the AI to deliver sector-relevant responses.

- Mixture of Experts (MoE) Architectures: Uses specialized sub-models (“experts”) to handle different tasks, activating only the relevant ones to improve accuracy and efficiency.

- Retrieval Augmentation (RAG): Combines AI with a real-time retrieval system, pulling in external, up-to-date information to reduce errors and outdated responses.

- Debate Techniques: Structures AI responses as a debate between options, helping the model evaluate multiple perspectives for more balanced and accurate outputs.

Human-in-the-Loop Review

Incorporating a human-in-the-loop (HITL) approach can significantly enhance content quality. Involving human reviewers in the process allows for catching errors, identifying hallucinations, and providing valuable context that AI might miss (e.g., understanding regional slang in customer messages or recognizing sensitive topics that require careful handling).

Continuous Monitoring

Establishing a system for continuous monitoring of AI outputs allows you to identify and address issues as they arise. By following frameworks like NIST AI RMF, you can regularly review AI-generated content for accuracy and relevance, making timely adjustments to the model or training data.

Conclusion

GenAI could revolutionize workflows, drive innovation, and deliver insights like never before—but achieving this impact demands strategic planning and responsibility at every step. Through a focus on transparency, clear communication, and rigorous oversight, organizations can foster user trust and empower AI to become a dependable asset. As we’ve explored, ensuring the integrity of AI systems involves much more than technical prowess; it requires a commitment to ethical standards, user understanding, and organizational readiness.

In the final installment of this series, Part III, we’ll tackle one of the most pressing aspects of AI implementation: Scalability and ROI. As GenAI expands, managing expenses and maximizing ROI through effective budget strategies and resource allocation will be essential. Stay tuned to explore actionable insights on cost control, cloud optimization, and sustainable scaling practices that can secure AI’s place as a high-impact, cost-effective solution for your organization.

Frequently Asked Questions

Transparency in generative AI builds trust by making AI decisions understandable and reducing risks of bias, misinformation, and unethical impacts. It helps businesses comply with AI regulations and improves user confidence in AI-generated content.

Organizations can enhance AI interpretability by using Explainable AI (XAI) techniques like LIME and SHAP, implementing clear documentation, and providing transparency reports on how AI models generate outputs.

AI hallucinations occur when generative AI models produce false or misleading information that appears credible. Causes include incomplete training data, overfitting, and errors in pattern recognition, leading to factual inaccuracies.

Businesses can minimize AI hallucinations by using high-quality, diverse training data, applying retrieval-augmented generation (RAG) to verify real-time information, and incorporating human-in-the-loop (HITL) review processes.

Concerns include intellectual property (IP) rights, content authenticity, and the perception that AI-generated outputs are generic. Organizations should balance AI automation with human creativity, establish originality guidelines, and ensure transparency in AI-assisted content creation.