Contents

Amidst the ongoing AI proliferation, 2023 has ushered in an era often likened to an AI gold rush, making businesses worldwide fervently engaged in an unofficial and unprecedented race – a race to embrace Generative AI technology.

Nevertheless, an analysis of the situation reveals a nuanced reality. While Generative AI may present an initial facade of simplicity, a deeper examination unveils a contrasting landscape.

Accelerated integration of Generative AI, devoid of comprehensive safeguards, presents a paradox. This rapid adoption exposes businesses to vulnerabilities that undermine their competitive advantage, and ultimately jeopardize their original objectives.

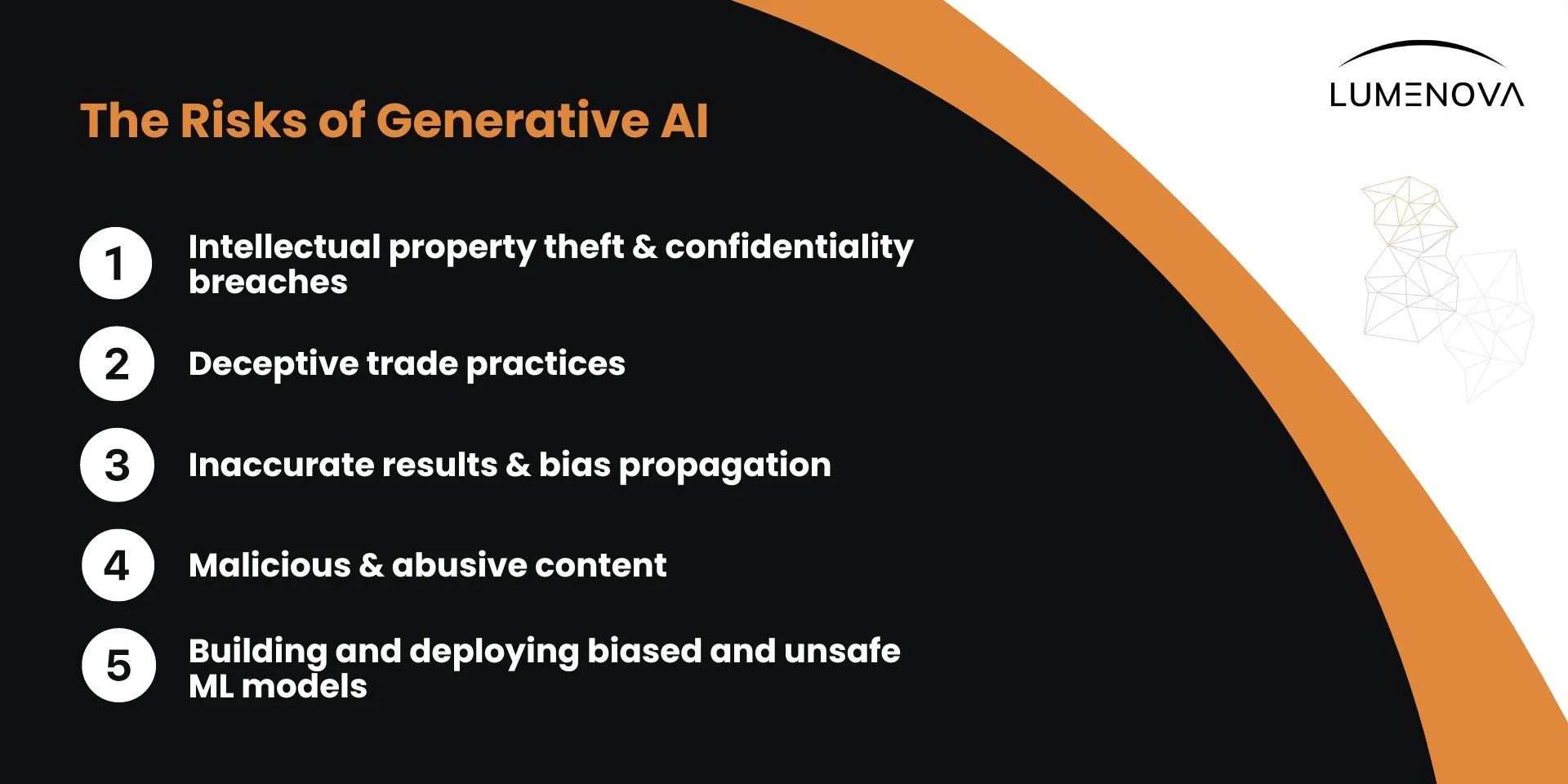

Get Acquainted with the Risks of Generative AI

Although we delved into the risks associated with Generative AI more extensively in a previous article, let’s recap the primary concerns here.

Even with internal tools built on LLMs such as ChatGPT for custom business uses, the potential for risks persists, underscoring the necessity of implementing robust safeguards. For instance, a model equipped with access to personally identifiable information could generate false information or inadvertently disclose sensitive data.

Consequently, the implementation of comprehensive AI and data governance protocols becomes paramount in effectively mitigating these potential vulnerabilities.

Quality, context, and privacy must be the guiding principles to harness the true potential of Language Models like ChatGPT.

Stay Ahead of the Curve: Prioritize Responsible AI

According to a panel of experts interviewed by MIT, organizations that prioritize Responsible AI programs and implement AI risk management frameworks are the strategic front-runners in navigating the emerging Generative AI landscape.

Establishing a solid foundation of Responsible AI principles is not just advisable —it’s essential. Rushing into this game-changing territory without proper Responsible AI practices and risk management frameworks is a dangerous gamble. The consequences? Disruptions, severe security flaws, and significant liabilities.

Now is the time for decisive action. It is absolutely crucial for businesses to establish or adapt their Responsible AI initiatives before integrating Generative AI tools.

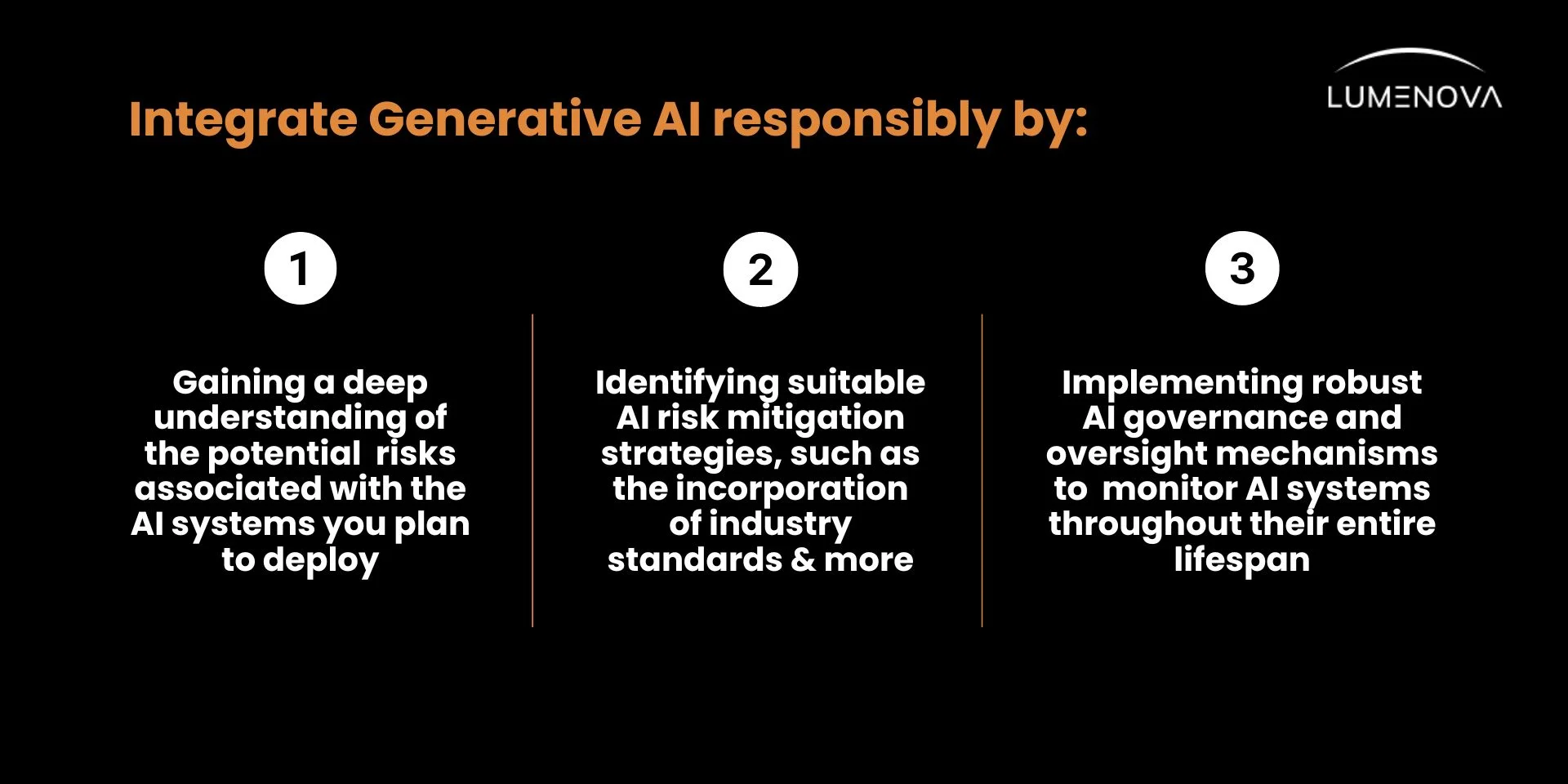

Guidelines for Implementing Effective Generative AI Governance

As organizations harness the potential of Generative AI tools, it becomes imperative to navigate the ethical dimensions they bring. Below, we present a set of guidelines that organizations can follow to develop and integrate Generative AI responsibly.

Step 1: Define Guardrails

- Start by developing policies and procedures aligned with the ethical and corporate values within your organization. Outline the various areas these policies should encompass, such as safeguarding data, protecting intellectual property (IP), cybersecurity, and more.

- Clarify what is deemed acceptable and unacceptable regarding the utilization of generative AI within your organization.

- Identify the types of information permissible for sharing in prompts and pinpoint what constitutes “sensitive data” that must not be disclosed.

- Deploy measures to safeguard your IP throughout the entire process and develop protocols for managing crises.

- If you intend to incorporate generative AI into your operations, evaluate the necessity for data protection policies and conduct assessments on the impact of data transfers. Consider the confidentiality implications and establish protocols for deleting data from the AI system, among other necessary considerations.

Step 2: Educate and Delegate

- Designate a leader to champion Responsible AI principles company-wide.

- Empower staff with awareness of Generative AI risks and understanding of established safeguards.

- Implement training initiatives and leverage available resources for staff education.

- Cultivate a culture of transparency and accountability in Generative AI practices.

- Facilitate open dialogues for employees to inquire, discuss, and express opinions on the technology.

- Clearly communicate your organization’s position on generative AI use both internally and externally.

Step 3: Keep the Regulatory Landscape in Mind

- Ensure that your organization’s use of Generative AI adheres to pertinent legal and regulatory standards, including data privacy regulations like GDPR and CCPA, as well as intellectual property laws.

- Stay ahead of forthcoming laws or regulations that could affect your company’s Generative AI practices, such as the EU AI Act.

- Take into account industry-specific regulations that might be relevant to your organization.

Step 4: Monitor and Assess

- Set up systems to continually monitor AI-generated content and assess risks proactively.

- Establish protocols for the regular inspection of outputs, detection of biases, and timely system updates.

- Evaluate if your organization possesses the necessary tools and procedures to manage Generative AI risks effectively, including code libraries, testing procedures, and quality assurance measures.

Using Lumenova AI’s Generative AI Framework

Lumenova AI provides an advanced risk management framework for Generative AI, aiding enterprises to integrate Generative AI responsibly while preserving trust and privacy.

The framework encompasses an extensive array of requirements applicable to all AI systems, while also incorporating specific requirements tailored to Generative AI.

It will allow your organization to:

- Clearly outline the boundaries and limitations of usage, respecting confidentiality and intellectual property rights.

- Opt for a human-in-the-loop approach and encourage transparency through disclosing system nature, biases, and limitations.

- Shape your Generative AI system through continuous user feedback.

- Implement measures to detect, prevent, and mitigate adversarial threats in order to safeguard the GenAI systems against intentional manipulation of inputs by malicious actors to generate undesirable or malicious outputs.

- Refine content by using post-processing techniques, filters, and detection tools, to ensure greater accuracy and compliance/alignment.

- Implement measures of detecting hallucinations in order to prevent spreading misinformation and false knowledge.

If you’re interested to learn how it works, please get in touch with us for a product demo.