Contents

AI models are designed to identify patterns, make predictions, and optimize business decisions. However, their performance is not static. Over time, as data distributions shift and real-world conditions evolve, these models can become less accurate. This phenomenon, known as model drift, poses a significant risk to organizations that depend on AI for critical operations.

Recognizing model drift is essential for maintaining the reliability of machine learning systems. Changes in consumer behavior, market trends, and external factors can gradually erode a model’s predictive power, often without immediate warning. Without proactive monitoring and mitigation, businesses may find themselves making decisions based on outdated or misleading insights.

Understanding how and why model drift occurs is the first step in ensuring long-term AI effectiveness. In this first installment, we’ll unpack the fundamentals: what model drift is, its different types (concept drift, data drift, and more), and why early detection matters. We’ll also explore practical strategies for identifying drift before it impacts your bottom line. In Part II, we’ll dive deeper into advanced prevention tactics, real-world case studies, and future-ready solutions to keep your AI investments resilient.

Understanding ML Model Drift

To begin, let us define what model drift means in practical terms. Simply put, ML model drift refers to the phenomenon where a machine learning model’s predictive power diminishes over time due to changes in the underlying data distribution or environment.

For instance, consider a credit card fraud detection system trained on historical transaction data. Over time, as criminals develop new tactics and consumer habits evolve, the original patterns the model learned may become obsolete, resulting in decreased detection accuracy. From our experience, recognizing the signs of model drift early is key to maintaining robust and reliable systems.

Types of AI Drift

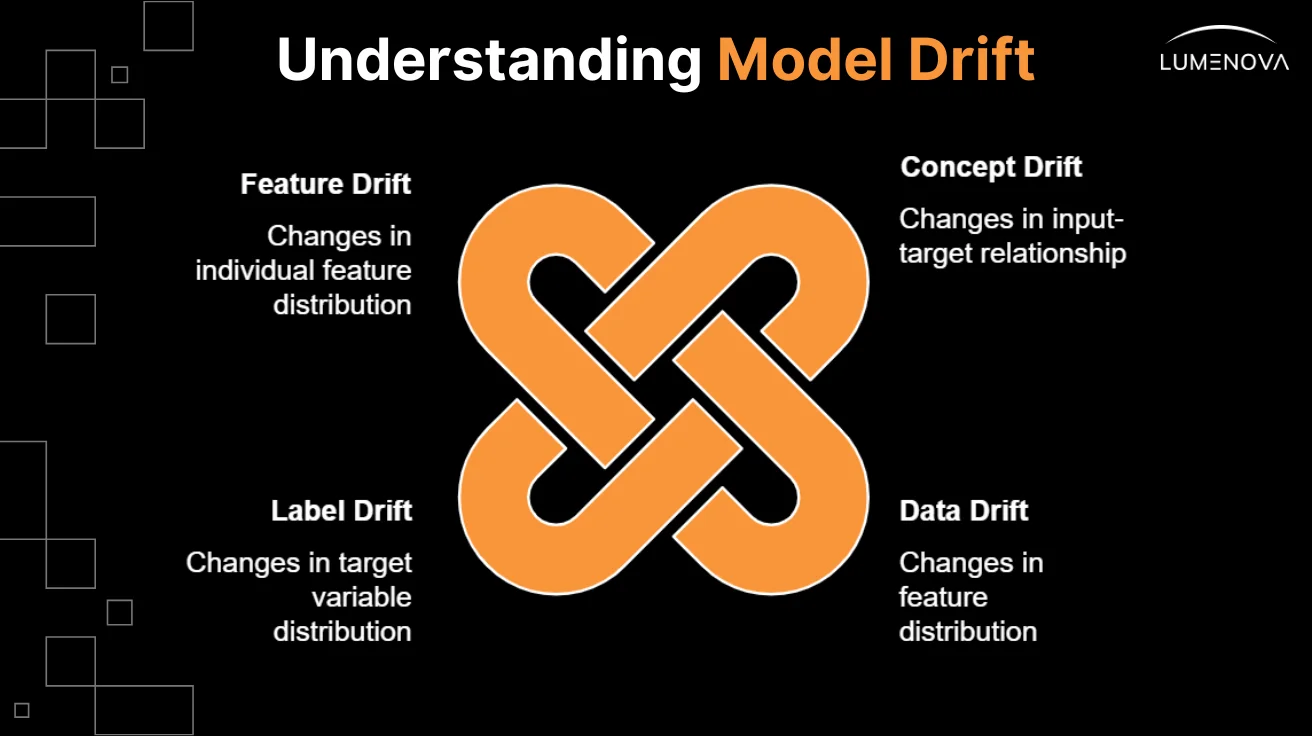

Understanding the nuances of model drift is crucial for maintaining model accuracy and reliability. Below, we outline the four primary types of drift in machine learning:

1. Concept Drift

Concept drift occurs when the relationship between the input features (x) and the target variable (y) changes over time. This type of drift directly impacts the predictive model’s performance because the underlying patterns it has learned no longer hold true.

- Sudden Drift: The relationship changes abruptly.Example: A sudden change in customer behavior due to a new competitor entering the market. In banking, this could mean an unexpected rise in loan defaults following a recession.

- Gradual Drift: The relationship changes slowly over time.Example: Slowly evolving user preferences on a social media platform. In healthcare, this could involve shifting treatment protocols for chronic diseases.

- Incremental Drift: The relationship changes in small, incremental steps.Example: Gradual changes in sensor readings due to wear and tear on machinery. In consumer goods, this could manifest as gradual shifts in consumer preferences toward eco-friendly products.

- Recurring or Seasonal Drift: The relationship changes cyclically, often in a predictable manner.Example: Seasonal changes in product demand (e.g., holiday sales spikes). In retail, predicting inventory levels becomes challenging during peak shopping seasons.

For instance, consider a retail company using an ML model to predict inventory levels. If consumer preferences shift due to seasonal trends or unexpected events (e.g., a global pandemic), the model may struggle to adapt unless updated regularly.

2. Data Drift (Covariate Shift)

Data drift, also known as covariate shift, occurs when the distribution of the input features (x) changes over time, while the relationship between the features and the target variable remains the same. Even though the underlying relationship hasn’t changed, this shift can still degrade model performance if the model was trained on a different feature distribution.

Example: Changes in user demographics on an e-commerce site, such as a significant increase in younger users over time. In finance, this could mean a growing proportion of millennial investors whose spending habits differ from older generations.

3. Label Drift (Prior Probability Shift)

Label drift happens when the distribution of the target variable (y) changes over time, without a change in the relationship between input features and the target variable. This type of drift is particularly relevant in classification problems where class proportions fluctuate.

Example: A change in the proportion of spam to non-spam emails over time. In healthcare, this could involve changing prevalence rates of certain diseases due to improved diagnostic tools or public health interventions.

4. Feature Drift

Feature drift refers to changes in the distribution of individual features. While similar to data drift, feature drift focuses specifically on the behavior of certain features rather than the overall input distribution.

Example: A sudden increase in a particular product’s sales due to a viral marketing campaign, affecting the sales feature. In banking, this could occur if there’s a surge in credit card applications after a promotional offer.

Causes of AI Drift

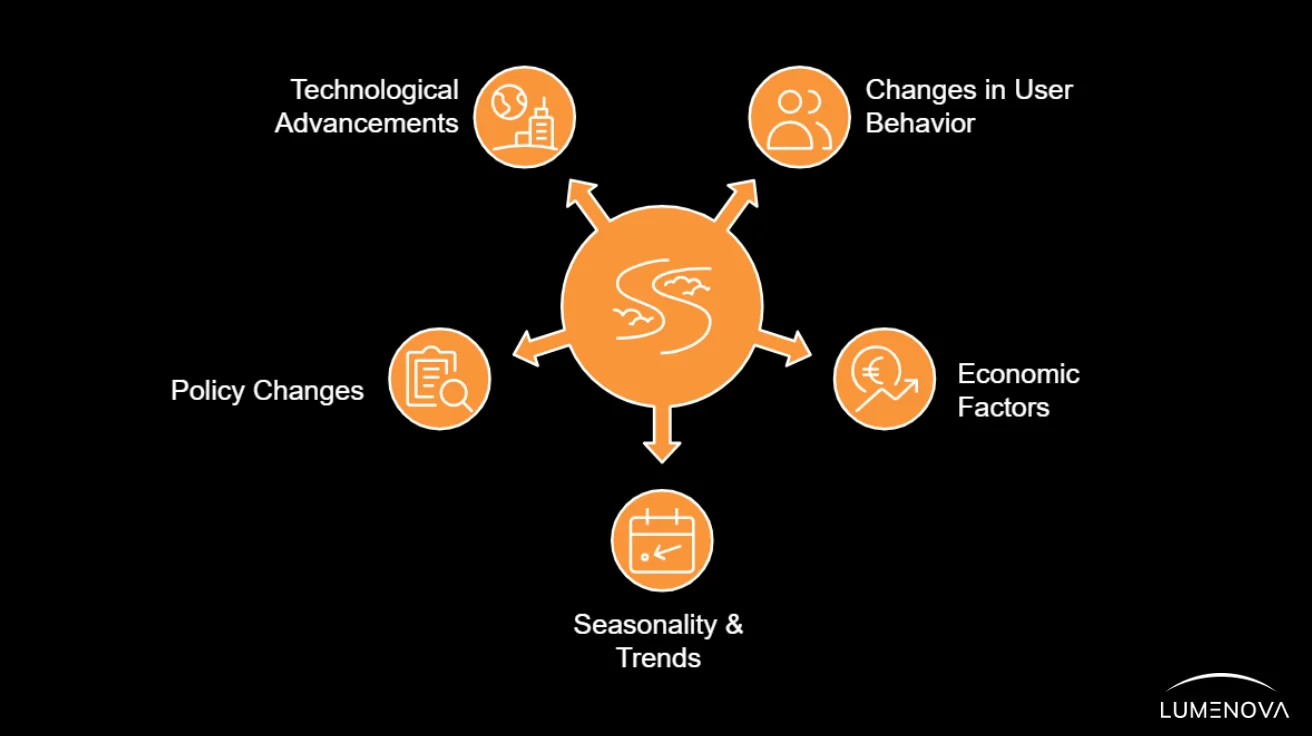

Model drift doesn’t occur in isolation, but it arises from a combination of internal and external factors. Below are some common causes of drift across industries:

Changes in User Behavior

Consumer preferences, technological advancements, and cultural shifts all influence user interactions with products or services, leading to altered datasets.

Example: A streaming service observing increased binge-watching habits during lockdowns.

Economic Factors

Economic cycles, inflation rates, unemployment levels, and regulatory changes can significantly impact business operations and, consequently, predictive models.

Example: A financial institution noticing higher default rates during an economic downturn.

Seasonality & Trends

Seasonal variations or emerging trends can introduce temporary fluctuations that skew long-term predictions unless accounted for properly.

Example: Retailers facing spikes in demand during holiday seasons.

Policy Changes

Regulatory updates or organizational policy modifications might necessitate changes in operational procedures reflected in collected data streams.

Example: Healthcare providers adapting to new compliance requirements after legislative changes.

Technological Advancements

Innovations in technology can render existing models obsolete, requiring updates to keep pace with modern standards.

Example: An autonomous vehicle manufacturer updating its object detection algorithms to incorporate LiDAR data.

Why Early AI Drift Detection Matters

Detecting model drift early can mean the difference between a minor adjustment and a costly system overhaul. AI models often degrade gradually, and if the decline is not addressed in time, businesses can face serious financial and operational consequences.

Take an e-commerce recommendation engine as an example. If consumer preferences shift due to emerging trends, an undetected AI drift could lead to irrelevant product suggestions. This not only reduces customer engagement but also impacts revenue. Similarly, in financial services, a credit risk model that does not adjust to economic shifts may approve high-risk loans or deny creditworthy applicants, increasing both financial exposure and customer dissatisfaction.

Early detection enables proactive intervention, allowing data teams to recalibrate models before they cause major disruptions. By continuously monitoring performance metrics and distribution changes, organizations can prevent drift from eroding business value.

Detecting AI Drift

As mentioned earlier, detecting model drift early is essential for maintaining system reliability. Several tools and techniques can help identify potential issues before they escalate:

1. Statistical Tests

Utilizing statistical tests like the Kolmogorov-Smirnov test or Chi-square test allows us to compare distributions across periods, highlighting significant deviations indicative of possible drift.

Example: Comparing the distribution of transaction amounts before and after a major marketing campaign.

Key Statistical Techniques:

- Kolmogorov-Smirnov Test: Measures the maximum difference between two cumulative distribution functions (CDFs).

- Chi-Square Test: Compares observed frequencies with expected frequencies in categorical data.

2. Monitoring Dashboards

Implementing real-time dashboards provides visual cues regarding key performance metrics such as precision, recall, F1 score, etc., enabling swift intervention upon noticing declines.

Example: A fraud detection system triggering alerts when transaction patterns deviate from expected norms.

Dashboard Features:

- Real-Time Alerts: Notify stakeholders of anomalies as they occur.

- Historical Trends: Visualize performance over time to identify gradual changes.

- Drill-Down Capabilities: Investigate specific areas of concern in greater detail.

3. Automated Alerts

Setting thresholds based on acceptable variance ranges triggers alerts automatically when breaches occur, facilitating timely adjustments.

Example: A manufacturing plant receiving notifications when equipment sensors detect abnormal vibrations.

Best Practices for Automated Alerts:

- Define thresholds dynamically based on historical data.

- Prioritize alerts based on severity and potential impact.

- Integrate alerts with incident management systems for seamless resolution.

4. AI Drift Detection Algorithms

Specialized algorithms designed to monitor and quantify drift can provide actionable insights into when and where drift occurs.

Example: Using ADWIN (Adaptive Windowing) to detect gradual changes in data streams.

Our Responsible AI Platform helps businesses track and manage ML model performance, providing insights that support proactive drift detection and mitigation.

Conclusion

Model drift isn’t a question of if but when. By understanding its types (from sudden concept shifts to gradual data decay) businesses can move from reactive firefighting to proactive governance. Tools like statistical tests, monitoring dashboards, and automated alerts act as early warning systems, buying critical time to recalibrate models.

For leaders, this isn’t just about preserving accuracy, but also about safeguarding ROI. A retail chain catching seasonal demand shifts early avoids stockouts; a bank detecting fraud patterns in real-time prevents losses. In Part II, we’ll explore how to turn these early signals into long-term resilience through advanced retraining frameworks, ethical AI practices, and cutting-edge technologies like edge computing. Stay tuned to future-proof your AI strategy.

For insights into the evolving landscape of AI governance and risk mitigation, visit our blog. If you’re ready to strengthen your AI strategy and implement proactive monitoring, book a demo today to see how Lumenova AI can help.