September 17, 2024

The Strategic Necessity of Human Oversight in AI Systems

Contents

Organizations across the globe are leveraging AI to drive innovation, streamline operations, and gain competitive advantages. At Lumenova AI, we’ve observed how AI can transform industries—optimizing complex supply chains, enhancing decision-making processes, and creating new growth opportunities. As AI technologies become more deeply integrated into critical business operations, the need for strong human oversight is increasingly clear. While AI’s potential is vast, the risks associated with its unchecked use are equally significant.

In this article, we explore why human oversight is not merely beneficial but essential for AI deployment initiatives. We will delve into the strategic risks posed by unmonitored AI, discuss the crucial roles that humans play in guiding AI technologies, and demonstrate how we support organizations in implementing effective oversight mechanisms. We aim to illustrate how humans can ensure that AI systems operate ethically, transparently, and effectively, aligning with both organizational values and long-term strategic goals.

Human oversight is the bridge that connects AI’s technical potential with the organization’s broader mission and values, ensuring that AI-driven innovations do not come at the expense of fairness, accountability, and trust.

The Expanding Role of AI in Enterprise Strategy

Today, AI is integral to a wide range of business functions from automating routine tasks to providing deep insights through predictive analytics. Companies that effectively harness AI can unlock new levels of efficiency, innovation, and strategic advantage. For instance, AI-enabled supply chain optimization is helping retailers predict demand surges with greater accuracy, ensuring just-in-time inventory management.

In the healthcare sector, AI-driven diagnostic tools are identifying early-stage diseases with precision, enabling proactive patient care and reducing treatment costs. Meanwhile, in energy management, AI models are optimizing power distribution in smart grids, balancing renewable and traditional energy sources to reduce waste and improve efficiency. In financial services, AI models are used to assess risk, detect fraud, and personalize financial advice. The strategic benefits of AI are clear—those who leverage it effectively can outpace their competitors, reduce costs, and create more targeted customer experiences.

However, with these advancements comes a critical question: How is your organization ensuring that AI is not only driving innovation but also adhering to ethical and operational standards? This question is central to maintaining the integrity of AI initiatives, ensuring that they deliver value without compromising ethical or legal responsibilities.

The strategic integration of AI into business operations also demands a reevaluation of traditional governance models. In many cases, AI systems operate in ways that differ significantly from traditional software or decision-making processes. For instance, AI models often evolve over time as they learn from new data, making it difficult to apply static rules or oversight procedures. This dynamic nature of AI necessitates a governance approach that is both flexible and continuous, involving regular monitoring and adjustments to ensure alignment with organizational goals and standards.

Furthermore, AI initiatives often span multiple business functions, from IT and operations to legal and compliance teams. This interdisciplinary involvement requires a governance framework that not only integrates technical oversight but also includes input from various stakeholders who can assess the broader implications of AI-related decisions. Such a framework ensures that AI systems are aligned with the organization’s values and objectives, while also being adaptable to the ever-changing technological landscape.

In light of these considerations, the role of human oversight is increasingly important. While AI can process vast amounts of data and make decisions at speeds that far exceed human capabilities, it is the human element that ensures these decisions are made within the bounds of ethical, legal, and strategic considerations.

The Strategic Risks of Unmonitored AI Systems

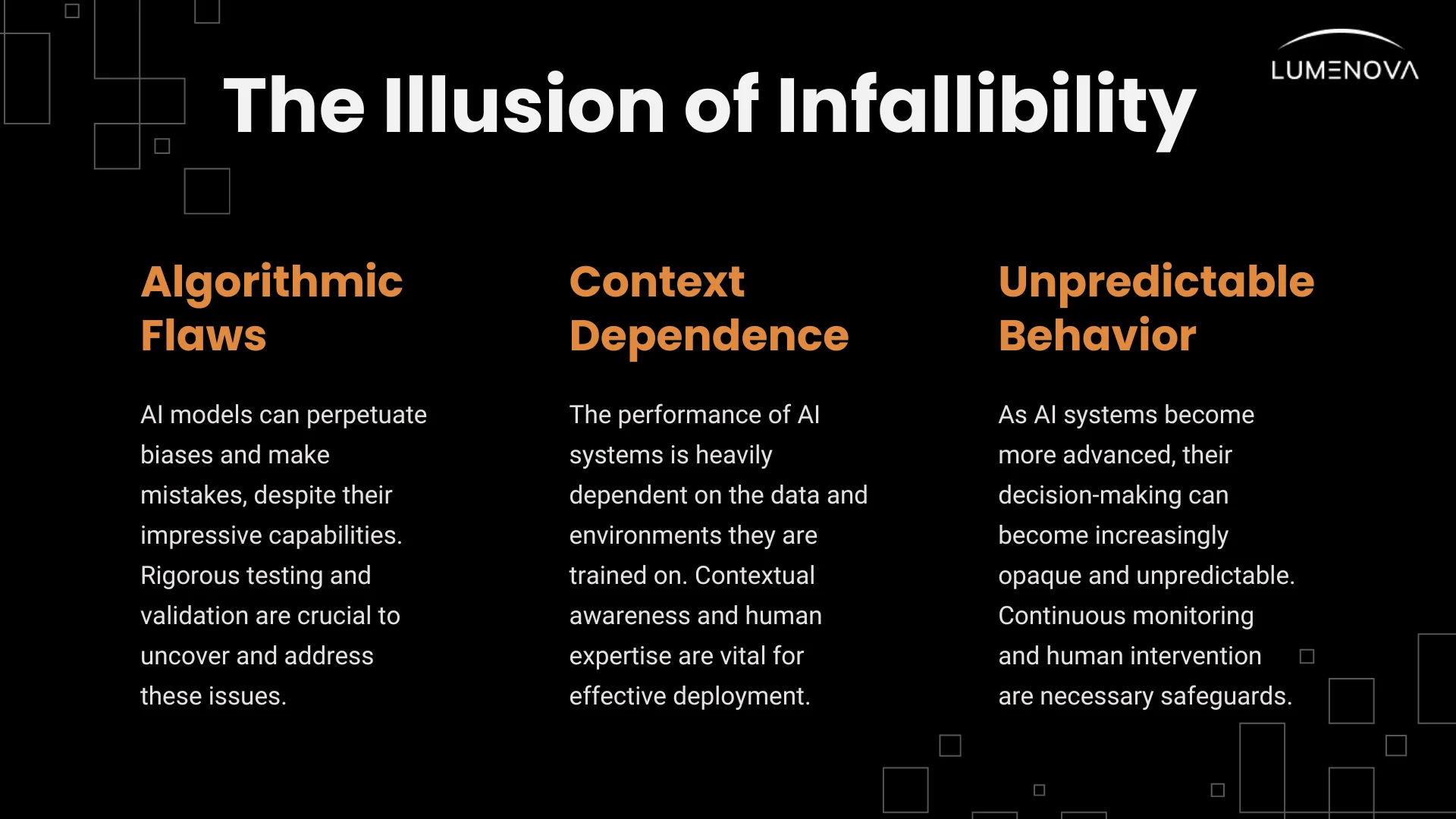

As AI systems take on more complex and autonomous roles within organizations, the potential risks of leaving these systems unchecked grow exponentially. While AI offers unparalleled capabilities in processing data and automating decisions, it is not infallible. Unmonitored AI can lead to unintended consequences that may significantly impact an organization’s operations, reputation, and compliance with regulatory standards.

1. Bias and Discrimination

One of the most significant risks associated with unmonitored AI is the potential for bias and discrimination. AI systems are only as good as the data they are trained on, and if that data reflects historical biases—whether related to race, gender, or socioeconomic status—these biases can be perpetuated and even amplified by AI systems. For instance, an AI-driven recruitment platform might inadvertently favor candidates from certain demographics based on biased training data, leading to discriminatory hiring practices.

The strategic consequences of unchecked biases are far-reaching. Organizations that fail to address bias in their AI systems may face reputational damage, legal liabilities, and a loss of stakeholder trust. In some cases, biased AI decisions can lead to regulatory penalties, especially in highly regulated industries such as finance or healthcare. Moreover, the social impact of biased AI can result in public backlash, further eroding the organization’s credibility and standing in the market.

Addressing bias in AI requires a multi-faceted approach that involves both technical and human oversight. Technically, organizations can implement bias detection tools and fairness metrics to monitor AI outputs and identify potential biases. However, these tools alone are not enough. A Human-in-the-loop is essential to interpret the results of these tools, make informed decisions on how to mitigate bias, and ensure that the AI system aligns with the organization’s values and ethical standards. How confident are you that your organization has the right oversight mechanisms in place to detect and mitigate these biases before they cause harm?

Lumenova AI places a strong emphasis on the continuous monitoring of AI systems to ensure they address potential bias and operate within ethical boundaries. We work closely with organizations to establish robust oversight frameworks that include regular audits, validation processes, and the involvement of diverse teams in the development and deployment of AI systems. This approach not only helps to identify and address biases early but also fosters a culture of accountability and fairness across the organization.

2. Transparency and Explainability

As AI models, particularly those based on deep learning, grow in complexity, they often become opaque or “black box” systems. This lack of transparency can pose significant risks, especially in sectors where decisions need to be auditable and explainable. For instance, in financial services or healthcare, the inability to explain how an AI model arrived at a particular decision can lead to compliance issues and erode trust among stakeholders.

In highly regulated industries, transparency is not just a best practice—it is a legal requirement. For example, the GDPR in the European Union states that individuals have the right to an explanation of decisions made by automated systems. Similarly, financial institutions must ensure that their AI-driven credit scoring models are transparent and can be audited to prevent discriminatory practices. Failure to comply with these requirements can result in significant legal and financial consequences.

Beyond legal compliance, transparency is also critical for building trust with customers, partners, and regulators. When AI systems make decisions that directly impact individuals—such as approving a loan, diagnosing a medical condition, or determining the outcome of a legal case—stakeholders need to understand the reasoning behind these decisions. Lack of transparency can lead to mistrust, particularly if the AI system makes an unexpected or controversial decision.

Human oversight plays a crucial role in ensuring that AI systems remain transparent and their decisions explainable. This involves not only monitoring the outputs of AI models but also understanding the underlying algorithms and data that drive these decisions. AI vendors and developers must prioritize creating explainable AI (XAI) techniques that empower human experts to interpret and clearly communicate AI-driven decisions to stakeholders. Moreover, transparency should be embedded in the design and deployment of AI systems, ensuring that all relevant parties—from developers to end-users—are aware of how the AI operates and what factors influence its decisions. What steps is your organization taking to ensure that AI-driven decisions are transparent and can be clearly communicated to both internal and external stakeholders?

Lumenova AI supports organizations in developing and implementing XAI frameworks that enhance the transparency and explainability of AI systems. We provide a platform that enables organizations to audit their AI models, generate human-understandable explanations, and ensure compliance with regulatory requirements. By making AI decisions more transparent, organizations can build trust with their stakeholders and mitigate the risks associated with opaque AI systems.

3. Operational Errors and Legal Risks

Even the most sophisticated AI systems are prone to errors, particularly when they encounter data or scenarios that deviate from their training. These errors can range from minor inaccuracies to significant operational disruptions. For example, an AI model in a financial institution might flag legitimate transactions as fraudulent due to a misclassified anomaly, leading to operational disruptions and customer dissatisfaction.

The strategic impact of such errors could be substantial, leading to financial losses and damaging the organization’s reputation. In some cases, operational errors caused by AI can lead to significant financial losses, particularly if the AI is responsible for critical decision-making processes such as trading, risk assessment, or supply chain management. Additionally, errors in AI-driven customer service systems can result in poor customer experiences, leading to business losses and brand damage.

Beyond operational risks, AI errors can also pose legal challenges. For instance, if an AI system incorrectly denies a customer’s loan application or misdiagnoses a patient’s condition, the organization may face legal liabilities, including lawsuits and regulatory fines. These legal risks are particularly pronounced in industries where decisions made by AI systems have a direct impact on individuals’ lives, such as healthcare, finance, and law enforcement.

Meaningful human oversight in AI is essential to prevent and mitigate these operational and legal risks. This oversight should include rigorous validation and testing of AI models before deployment, as well as continuous monitoring to detect and correct errors in real time. organizations should also establish clear protocols for human intervention in AI-driven processes, ensuring that errors can be quickly identified and resolved before they escalate into significant issues. Has your organization implemented comprehensive oversight protocols that can quickly detect and correct these operational errors before they escalate?

Lumenova AI partners with organizations to develop robust oversight frameworks that ensure AI systems are thoroughly validated, monitored, and aligned with legal and ethical standards.

Our conclusion?

While AI systems offer unparalleled capabilities in processing data and automating decisions, they are not without their flaws and risks. Bias, lack of transparency, operational errors, and legal challenges are just some of the issues that can arise when AI systems are left unchecked.

Human review is the key to mitigating these risks and ensuring that AI systems operate in alignment with ethical standards and organizational goals. By embedding human judgment, ethical considerations, and strategic oversight into AI processes, organizations can unlock the full potential of AI while safeguarding against unintended consequences.

At Lumenova AI, we are committed to helping organizations implement effective control and oversight mechanisms that enhance the transparency, accountability, and reliability of their AI systems. Whether you’re looking to address biases, improve explainability, or mitigate operational risks, our solutions are designed to support you at every stage of your AI journey.

Ready to see how Lumenova AI can help you harness the power of AI responsibly? Book a demo today and discover how our AI governance solutions can elevate your organization’s AI strategy.

Frequently Asked Questions

Human oversight ensures AI systems operate within ethical, legal, and strategic boundaries. It helps mitigate bias, improve transparency, and prevent operational errors that could lead to reputational or financial risks.

Organizations can implement bias detection tools, conduct regular audits, and ensure diverse teams oversee AI development. Human review is crucial for interpreting AI outputs and aligning them with fairness and ethical standards.

Unmonitored AI can lead to biased decision-making, opaque ‘black box’ systems, operational failures, and legal liabilities. Without oversight, AI systems may drift from intended objectives, causing unintended harm.

Human oversight helps interpret AI decisions, ensure compliance with explainability requirements, and establish governance frameworks. Explainable AI (XAI) techniques allow stakeholders to understand how AI models arrive at conclusions.

Businesses should develop AI governance frameworks, establish auditing and monitoring protocols, train employees in AI literacy, and create intervention mechanisms where human judgment is required in critical AI-driven processes. Platforms like Lumenova AI help streamline these efforts by offering centralized oversight, automated risk tracking, and compliance tools tailored for responsible AI governance.