Contents

The banking sector has witnessed a transformative leap forward with the integration of Artificial Intelligence (AI) in many business processes. Financial institutions now leverage AI to streamline operations, enhance customer service through chatbots and personalized banking experiences, and bolster security measures by detecting fraudulent transactions with more speed and accuracy.

According to the McKinsey Global Institute (MGI), financial institutions that effectively leverage gen AI have developed a specific, customized operating model that addresses the unique nuances and risks of the new technology, instead of attempting to integrate gen AI into their current operating model. Now, when it comes to AI-driven banking, navigating the complex web of compliance and regulatory requirements is critical. Banks must ensure their AI systems operate within legal frameworks to avoid substantial penalties, reputational damage, and the undermining of customer trust.

Understanding these regulations is not only about legal obedience but also about safeguarding ethical standards and transparency in AI implementations. Staying ahead of evolving regulatory landscapes is necessary for banks to responsibly harness AI’s potential while maintaining public confidence and meeting the stringent demands of global oversight.

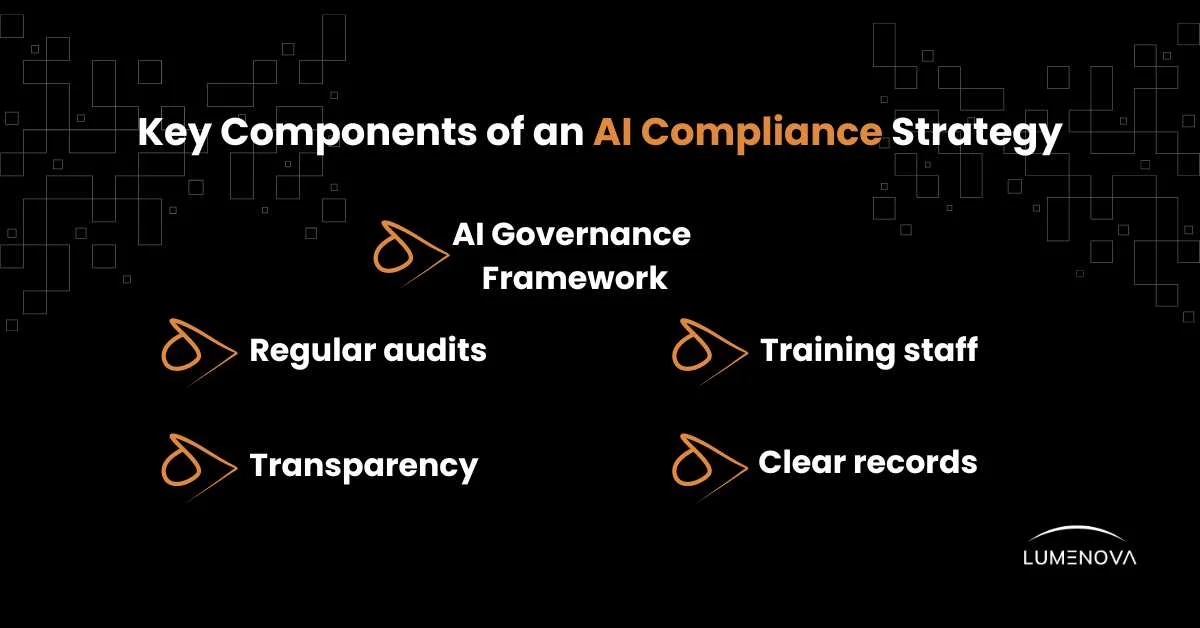

Key Components of an AI Compliance Strategy

AI-driven banking needs ongoing commitment and foresight, adapting to the changing technological landscape and regulatory environment. There are five key components that need to be addressed when designing an AI compliance strategy:

- Creating an AI governance framework.

- Ensuring transparency in AI decision-making processes.

- Training staff on ethical AI practices.

- Maintaining clear records for regulatory reviews.

- Regular audits and updates to AI systems.

By integrating a sound strategy, organizations not only safeguard against potential liabilities but also position themselves as responsible innovators in the field of AI, which ensures the trust and confidence of their clients, stakeholders, and the wider community.

Adopting the Right Type of Operating Model

Think of an operating model like a strategy game showcasing who’s doing what: who is making the decisions, how things should turn out, and how new technology should be handled. Now, banks didn’t just try to implement new AI tech into their old routine, they actually crafted a strategy to understand the power of AI and how it should be handled.

This means that the right operating model should make sure the financial institution efficiently carries out these three types of activities:

- Strategic steering to identify AI use cases that align with the bank’s objectives, sorting them and turning them into a road map.

- Standard setting, which deals with data and practices, risk frameworks and common standards in order to learn from past projects that proved success.

- Execution, where the use cases are tested against performance and safety criteria to be then taken to production, with the purpose of scaling them later on.

Now, for a financial institution that decided to dive into AI, the best course of action it can take is to adopt an operating model that’s not only scale-friendly but also one that works and fits the company, so a model that’s build-ready and flexible is key to adopting and using AI the right way.

International Regulatory Frameworks for AI in Banking

In the dynamic landscape of AI banking, several international regulatory frameworks shape the responsible deployment of AI:

- Europe’s EU AI Act and General Data Protection Regulation (GDPR) sets the benchmark for data protection, influencing AI use by enforcing customer consent, data minimization, and the right to opt out of data processing. Furthermore, the EU AI Act mandates financial institutions to implement robust risk management, ensure data quality, provide transparency, maintain human oversight, document AI processes, report incidents, adhere to ethical standards, comply with financial regulations, and engage stakeholders to balance AI benefits with consumer protection and financial stability.

- The United States’s take on data privacy and automated decision-making technology is to implement regulations like the CPPA ADMT, Colorado Privacy Act (CPA), American Data Privacy and Protection Act, NIST AI RMF, and President Biden’s Executive Order on Safe Secure and Trustworthy AI.

- Canada, as well, introduced the Digital Charter Implementation Act, which contains three major acts, one of which is the AI and Data Act (AIDA). But when it comes to banking, only the Office of the Superintendent of Financial Institutions (OSFI) managed to issue Guideline E-23: Enterprise-Wide Model Risk Management for Deposit-Taking Institutions, which only offers some recommendations for AI oversight.

- Singapore’s Model AI Governance Framework provides comprehensive guidance for AI deployment, prioritizing ethical use and human-centric practices.

- India is developing frameworks through institutions like NITI Aayog that emphasize AI for social empowerment.

These diverse regulations demand that financial institutions adopt a globally aware approach to AI policy and practices to navigate the complexities of cross-border operations.

What Does the Future of AI in Banking Hold?

The future landscape of AI compliance in banking is on the track to become increasingly complex and integral to financial operations. As AI technologies evolve and become more ingrained in banking services, regulatory bodies worldwide are expected to intensify their oversight and update existing frameworks to address emerging risks and ethical concerns. We anticipate a movement towards harmonizing international regulations, fostering a global consensus on AI use to facilitate cross-border financial services while maintaining robust risk management.

Collaboration between regulators and the banking industry will likely grow, aimed at developing standards that balance innovation with consumer protection. Financial institutions might be required to implement more rigorous governance structures for AI, including transparent algorithms and audit trails, to explain automated decisions to regulators and customers. Enhanced measures to ensure data privacy, fairness in lending, and robust cybersecurity protocols will be non-negotiable components of compliance.

Moreover, as part of ensuring responsible AI implementation, banks may have to demonstrate the ethical use of AI, which includes addressing bias and ensuring inclusivity in AI-driven services. The advent of AI-specific certifications and compliance expertise could become a trend as institutions seek to navigate the intricate landscape of AI regulations. Ultimately, ongoing education and adaptability will be critical for banks as the compliance landscape rapidly evolves in tandem with AI advancements.

Lead Your Organization to Responsible AI with Lumenova AI

Lumenova’s Responsible AI (RAI) platform provides a holistic strategy that addresses AI related risks and biases with precision. We designed our platform to elevate the transparency, and responsibility of your AI systems, ensuring that ethical standards and the promotion of fairness are always utilized in decision-making processes. We put great emphasis on these issues, which means our RAI platform can empower your organization to cultivate an inclusive and responsible AI environment.

Discover how Lumenova AI’s RAI platform can help your organization navigate the complexities of AI deployment by requesting a demo, or contact our AI experts for more details.

Frequently Asked Questions

An effective AI compliance strategy includes a clear governance framework, transparent AI decision-making, staff training on ethical practices, thorough regulatory documentation, and regular system audits and updates.

Banks should adopt a scalable and flexible AI operating model that includes strategic steering to align AI use cases with business goals, standard setting for risk and compliance management, and execution frameworks for TEVV and deploying AI models responsibly.

Key regulations include the EU AI Act, GDPR, the US’ CPPA ADMT and NIST AI RMF, and OSFI guidelines, Singapore’s Model AI Governance Framework, and India’s AI initiatives. These frameworks shape compliance requirements for AI-driven financial services.

Regulations will likely intensify, requiring stronger AI governance, transparency, bias mitigation, and ethical oversight. Banks will need to implement more rigorous compliance measures, including AI audit trails, robust consumer protections, and enhanced data and cybersecurity protocols.

Lumenova AI’s Responsible AI (RAI) platform provides end-to-end AI governance solutions, ensuring compliance, fairness, and transparency in AI-driven financial services. The platform helps banks mitigate AI risks, address bias, and streamline regulatory alignment.