July 31, 2025

Enterprise AI Governance: For a Better Strategy, Incorporate Explainable AI

Contents

Enterprise AI governance is facing a high-stakes decision nowadays, and in our opinion, there is a single possible correct answer. Implementing Explainable AI (XAI) principles and techniques into daily operations can only be a “yes, sir,” and the sooner, the better. Why is that?

Look no further than the CNN unfolding report about the FDA’s Elsa agent fabricating medical studies. Or take the CIGNA’s algorithm scandal in 2023, which involved wrongly rejecting insurance claims without even opening patient files. In both scenarios, the stakes were life-altering. The common thread is a critical decision driven by an algorithm whose inner workings are opaque and whose outputs have drifted from the original training path for various reasons. When the applicant or stakeholder rightfully asks, “Why?”, a simple answer of “the computer decided” is not just insufficient; it’s a massive legal, ethical, and commercial liability.

This is the central challenge that moves Explainable AI (XAI) from a technical curiosity to a core business necessity. The immense power of modern AI to find patterns in vast datasets often comes at the cost of transparency, creating an inscrutable “black box”. Sectors like Finance, Healthcare, and Insurance are highly regulated for a reason: decisions taken there can directly impact a person’s financial well-being, health outcomes, and security. In these areas, first and foremost, AI opacity is no longer acceptable.

To harness the power of AI responsibly, organizations must be able to explain how and why their models reach a conclusion.

Overview

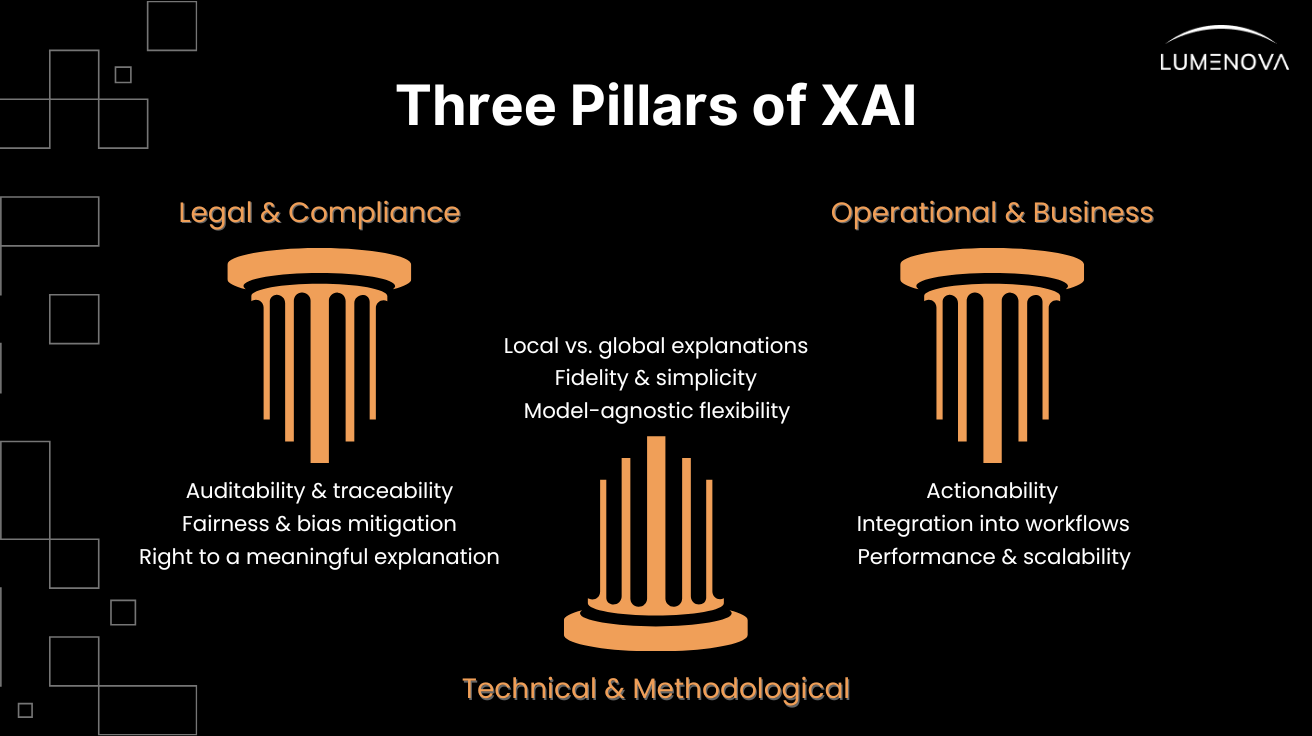

This article provides a comprehensive framework for leaders in finance, healthcare, and insurance to navigate the critical requirements of Explainable AI (XAI). As global regulations like the EU AI Act shift from theory to enforcement in 2025, the “black box” model has become an unacceptable liability. We address this challenge head-on by detailing what is truly required for compliance and trust, structuring our analysis around three core pillars.

First, we explore the non-negotiable legal and compliance demands, including auditable decision trails, robust fairness assessments, and the customer’s right to a meaningful explanation. Next, we delve into the technical and methodological necessities, clarifying key concepts like local vs. global explanations (LIME/SHAP) and the crucial balance between an explanation’s fidelity and its simplicity for the end-user. Finally, we cover the operational and business imperatives for making XAI work in the real world, focusing on scalability, seamless workflow integration, and ensuring that every explanation is actionable.

To conclude, we translate this framework into a practical, four-step roadmap for implementing a robust enterprise AI governance strategy. By mastering these requirements, organizations can move beyond defensiveness, transforming transparency into a powerful tool for building customer loyalty and securing a lasting competitive advantage.

Why Now? Between AI Power and Regulatory Scrutiny In Enterprise AI Governance

As we stand in mid-2025, the era of AI experimentation in regulated industries is definitively over. AI is now deeply embedded in core business processes, from credit scoring and claims processing to clinical diagnostics and fraud detection. This operational maturity has placed AI innovation on a direct collision course with the foundational duties of regulation: protecting consumers, ensuring fairness, and maintaining systemic stability.

In response, a global patchwork of rules has crystallized, demanding accountability from algorithms. The landmark EU AI Act, now in its implementation phase, sets a global precedent with its risk-based approach. It classifies many applications in finance, healthcare, and insurance as “high-risk,” imposing strict obligations for data governance, human oversight, robustness, and, critically, transparency.

This is not just a European phenomenon. In the United States, foundational laws are being actively applied to AI explainability. The Equal Credit Opportunity Act (ECOA) requires lenders to provide specific reasons for denying credit, a mandate that directly challenges black-box models. Similarly, the Health Insurance Portability and Accountability Act (HIPAA) governs the use of the sensitive patient data that fuels healthcare algorithms, creating a tight perimeter of privacy and security. The “right to a meaningful explanation” for automated decisions, first popularized by the GDPR, has become a global market expectation.

At a local level in the US, the most meaningful and comprehensive state AI laws are found in California, Colorado, and Utah, which lead with broad, targeted regulations in all three sectors (Healthcare, Finance, and Insurance). Nearly 30 states have adopted or are considering NAIC’s AI model governance for insurance, focused on non-discrimination and documentation. As a national trend, most other states are either in early stages (task forces, working groups, proposed bills) or await federal direction before establishing strict sectoral frameworks.

The takeaway? Even though this emerging regulatory cauldron may seem like Swiss cheese, or too unstable a ground to start building AI governance systems from, this is precisely the moment you should start doing so. That is, if your organization should avoid legal penalties for non-compliance, which are just around the corner.

The push for XAI isn’t, however, solely driven by the regulatory “stick”. There is also a powerful business “carrot”. Organizations are discovering that explainability is a competitive advantage. It builds profound trust with customers, reduces churn, and enhances brand reputation. Internally, it empowers employees to use AI tools confidently and allows data science teams to debug models, identify biases, and improve performance far more effectively. Avoiding fines is a baseline; building a trustworthy enterprise is the real prize.

The Three Pillars of XAI Requirements

Successfully implementing XAI in a regulated environment requires a holistic approach. It’s not just a data science problem; it’s a strategic challenge that rests on three distinct but interconnected pillars.

Pillar 1: Legal and compliance requirements

This forms the non-negotiable foundation. It’s about meeting the explicit and implicit demands of regulators and the law, and it comprises several important principles.

Auditability & traceability

You must be able to prove to an auditor how a specific decision was made, months or even years after the fact. This requires meticulous logging of model versions, input data, risk scores, and the explanations generated for each decision. Your system’s documentation must be able to answer questions like: “Show me exactly why Mr. Smith was denied a loan on October 15th last year.”

Fairness & bias mitigation

Regulators demand that you prove your models are not discriminating against protected classes. This goes beyond intent; it’s about impact. XAI is essential for identifying which features are driving model behavior, ensuring they are not acting as proxies for sensitive attributes like race, gender, or age. You need the tools to measure fairness metrics (like disparate impact) and the explanations to investigate and remedy any biases found.

Right to a meaningful explanation

When a customer is subjected to an adverse automated decision, they have a right to know why. The explanation must be timely, clear, and presented in plain language that a non-expert can understand. A list of fifty variables with cryptic weightings is not meaningful; a concise summary like “Your application was denied due to a high debt-to-income ratio and a short credit history” is.

Pillar 2: Technical and methodological requirements

This pillar addresses the data science and engineering challenges of generating accurate and useful explanations.

Local vs. global explanations

It’s critical to distinguish between the two types of explanation. Local interpretability explains a single prediction (“Why was this specific claim flagged for review?”). Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are the industry standard here. Global interpretability, by contrast, explains a model’s overall behavior (“What are the top five factors our model uses to assess credit risk across all applicants?”). Simpler methods like Feature Importance are often used for this.

Fidelity and simplicity

An explanation must walk a fine line. It needs to have high fidelity, meaning it accurately reflects the complex logic of the underlying model. However, it must also have simplicity to be understood by its intended audience, whether that’s a customer, a compliance officer, or a medical professional. Often, this means creating different “views” of an explanation for different users.

Model-agnostic flexibility

While some models are inherently simple (like logistic regression), high-performance models are often complex. Using model-agnostic XAI tools is often preferable because they can be applied to any underlying algorithm, giving your organization the flexibility to upgrade and change models without having to rebuild your entire explanation framework.

Pillar 3: Operational and business requirements

This pillar is about making explainability work in the real world, at scale, within your existing business processes.

Actionability

An explanation is only valuable if it empowers a user to take action. For a customer, this might mean understanding what they need to change to get a loan approved in the future. For a loan officer, it might mean providing the justification needed to override a model’s recommendation. An explanation that doesn’t lead to a smarter decision is just scholastic.

Integration into workflows

Explanations cannot live in a data scientist’s Jupyter notebook. They must be delivered seamlessly to the people who need them. This requires thoughtful UX design and technical integration via APIs, feeding explanations into user-friendly dashboards for compliance teams, pop-up windows in a CRM for customer service agents, or reports within a hospital’s electronic health record system.

Performance and scalability

In many regulated industries, decisions are made in real-time and at a massive scale. Your XAI system must be able to generate explanations with low latency without grinding business processes to a halt. Generating an explanation cannot take longer than the original prediction itself.

A Practical Roadmap: How to Implement XAI

Knowing what’s required is the first step. Here is a practical roadmap to get started.

Step 1: Conduct a risk assessment

Not all models carry the same risk. Use the principles of the EU AI Act as a guide. Classify your models (e.g., low, limited, high-risk) based on their potential impact on individuals. A model that recommends marketing content needs far less scrutiny than one that influences clinical treatment plans. This allows you to focus your XAI efforts where they matter most.

Step 2: Establish an enterprise AI governance framework

Create clear lines of ownership and accountability for every model. Define who is responsible for model performance, fairness, and explainability. Implement documentation standards like “Model Cards” that detail a model’s intended use, limitations, data sources, and fairness assessments. This framework is your primary defense in a regulatory audit.

Step 3: Choose the right tools and techniques

There is often a trade-off between a model’s predictive performance and its inherent interpretability. Decide on a case-by-case basis. Is a slightly less powerful but fully transparent model (like a decision tree) sufficient for the task? Or do you need a high-performance black box, requiring sophisticated post-hoc XAI tools like SHAP?

Step 4: Train your people

XAI is ultimately a human-centric discipline. You must invest in training your staff. Data scientists need to know how to build and validate explainable models. Compliance officers need to understand how to interpret fairness reports. And front-line employees need to be trained on how to communicate model-driven decisions to customers with clarity and empathy.

Conclusion: The Future is Transparent

In the high-stakes enterprise AI governance worlds of finance, healthcare, and insurance, Explainable AI is no longer optional. It is a foundational requirement for innovation, a prerequisite for regulatory compliance, and a strategic imperative for building trust. Meeting this requirement demands a multi-disciplinary effort that unites legal, technical, and operational expertise under a single, coherent governance framework.

The journey doesn’t end with explainability. It’s a stepping stone toward the ultimate goal of true algorithmic accountability. By embracing transparency, organizations are not just defensively avoiding fines; they are proactively building a fairer, more robust, and trustworthy business for the future.

Navigating the complex world of AI compliance is a significant challenge, but you don’t have to tackle it alone. The Lumenova AI platform is built to help you navigate ever-evolving regulations and govern your models for optimal explainability and transparency, hassle-free. If you’re ready to transform your AI governance from a roadblock into a competitive advantage, schedule a personalized demo for your enterprise today.