February 25, 2025

Model Drift: Detecting, Preventing and Managing Model Drift

Contents

In Part I, we laid the groundwork: model drift is inevitable, but with the right detection tools, businesses can catch it early. Now, let’s tackle the next critical question: How do you stop drift from undermining your AI systems in the long run? For leaders, this phase is about fixing problems and building adaptive systems that thrive amid change.

Think of model drift as a symptom of a deeper challenge: static AI in a dynamic world. Markets shift, regulations evolve, and customer expectations transform overnight. Traditional “set-and-forget” approaches won’t cut it. Instead, forward-thinking organizations are adopting strategies like continuous learning, ensemble models, and ethical governance to stay ahead.

In this second piece, we’ll explore advanced tactics to combat drift, from retraining frameworks to futuristic tools like explainable AI. We’ll also dissect real-world examples and address hurdles like resource constraints and data quality. Whether you’re a CTO or a data team lead, these insights will help you turn drift management into a competitive edge.

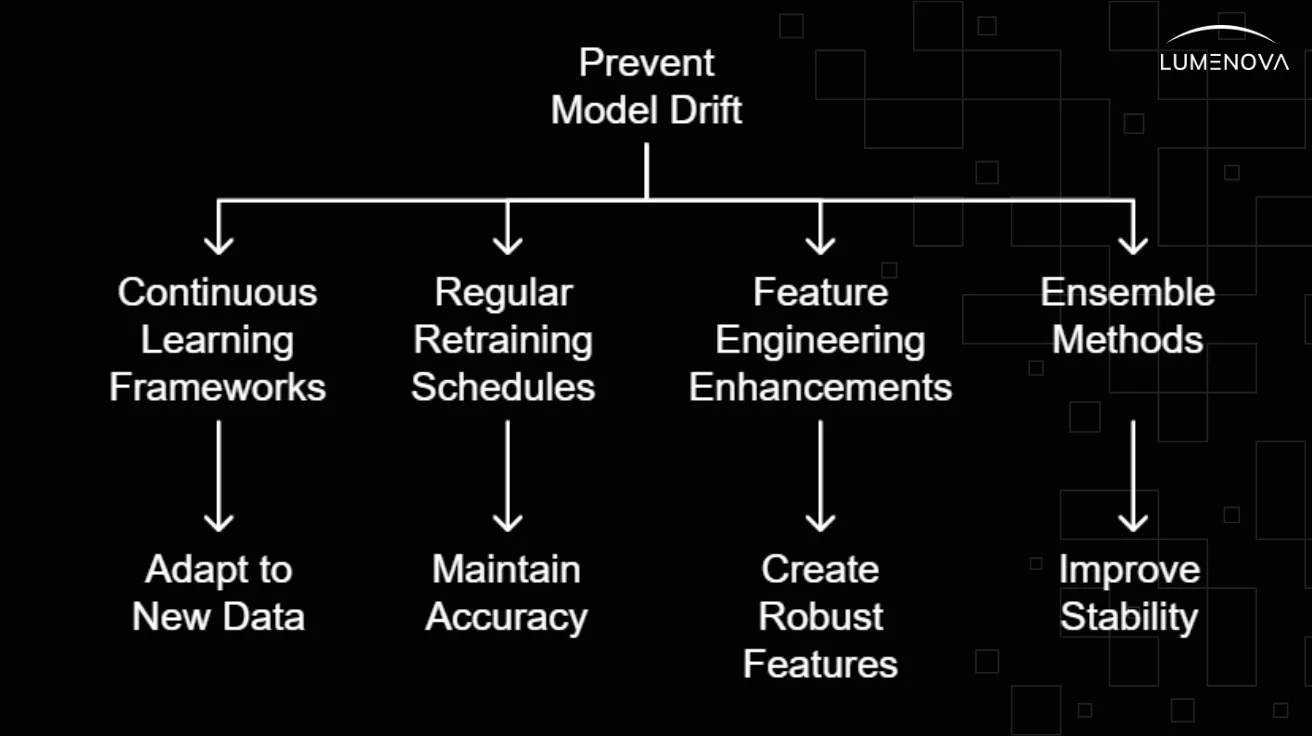

Strategies for Preventing Model Drift

Given the multifaceted nature of model drift, employing comprehensive strategies becomes imperative. Below are several approaches we recommend for sustaining optimal model performance:

1. Continuous Learning Frameworks

Adopting continuous learning frameworks enables models to adapt incrementally through exposure to fresh data continuously. Techniques include online learning algorithms capable of processing incoming samples sequentially rather than batch-wise, thereby reducing latency associated with periodic retraining sessions.

Example: Autonomous vehicles leveraging continuous learning to improve navigation capabilities as they encounter diverse driving conditions.

Benefits of Continuous Learning:

- Reduces downtime required for retraining.

- Ensures models stay aligned with current realities.

- Enhances adaptability to dynamic environments.

2. Regular Retraining Schedules

Establishing regular intervals for retraining ensures alignment with contemporary realities. Determining appropriate frequency depends on specific application domains; some environments demand frequent recalibrations, whereas others remain stable enough for less frequent updates.

Example: Email spam filters requiring constant updates to keep pace with evolving phishing techniques.

Considerations for Retraining:

- Balance computational costs with model accuracy.

- Use incremental updates to minimize disruption.

- Incorporate feedback loops for continuous improvement.

3. Feature Engineering Enhancements

Refining feature engineering practices contributes positively toward resilience against drift. Incorporating domain knowledge during preprocessing stages aids in creating robust features less susceptible to environmental perturbations. Additionally, dimensionality reduction techniques help eliminate noise-prone attributes prone to erratic behavior over time.

Example: Financial institutions enhancing fraud detection models by integrating external macroeconomic variables known to influence transaction patterns.

Feature Engineering Techniques:

- Principal Component Analysis (PCA): Reduces dimensionality while preserving variance.

- Domain-Specific Features: Leverages expert knowledge to create meaningful representations.

- Time-Series Features: Captures temporal dependencies in sequential data.

4. Ensemble Methods

Utilizing ensemble methods combines multiple base estimators to improve generalization capability. Such architectures tend to exhibit greater stability compared to single-model counterparts because individual weaknesses get compensated collectively.

Example: Stacking classifiers trained on different subsets of data to yield superior results compared to relying solely on a single model.

Ensemble Techniques:

- Bagging: Reduces variance by averaging predictions from multiple models.

- Boosting: Focuses on difficult-to-predict instances iteratively.

- Stacking: Combines predictions from multiple models using a meta-classifier.

Case Studies Illustrating Model Drift Prevention

Examining real-world examples reinforces theoretical concepts discussed above. Consider the following scenarios demonstrating successful implementation of anti-drift measures:

a. Retail Sector Example

An e-commerce giant faced challenges maintaining accurate inventory forecasts amidst fluctuating customer demands caused by seasonal sales events. By implementing automated retraining schedules aligned with promotional calendars alongside enhanced feature selection incorporating external macroeconomic variables, they managed to restore desired accuracy levels.

Lessons Learned:

- Align retraining schedules with business cycles.

- Incorporate external factors influencing demand.

- Monitor performance closely during peak periods.

b. Healthcare Domain Application

A hospital network sought to enhance sepsis prediction accuracy using electronic health records. They adopted a hybrid approach combining traditional supervised learning techniques with unsupervised anomaly detection algorithms to capture latent patterns indicative of impending concept drift, subsequently refining their primary classifier periodically based on identified discrepancies.

Key Takeaways:

- Combine supervised and unsupervised methods for comprehensive coverage.

- Regularly validate models against clinical benchmarks.

- Collaborate with domain experts to refine feature sets.

Advanced Techniques for Addressing Model Drift

While the strategies outlined above form the foundation of drift prevention, there are additional advanced techniques worth exploring:

1. Transfer Learning

Transfer learning involves leveraging pre-trained models to adapt quickly to new tasks or datasets. By fine-tuning existing models instead of building them from scratch, organizations can save time and resources while maintaining accuracy.

Example: A pharmaceutical company using a pre-trained drug efficacy model to predict outcomes for newly developed compounds.

Advantages of Transfer Learning:

- Accelerates development timelines.

- Improves generalization across domains.

- Reduces data requirements for new tasks.

2. Domain Adaptation

Domain adaptation focuses on transferring knowledge from one domain to another, allowing models to generalize better across different contexts.

Example: A speech recognition model trained on formal language being adapted to recognize colloquial speech patterns.

Challenges in Domain Adaptation:

- Identifying relevant transferable features.

- Handling domain-specific noise and biases.

- Evaluating performance in target domains.

3. Active Learning

Active learning prioritizes labeling the most informative samples, reducing the amount of labeled data required for training while improving model performance.

Example: A fraud detection system selecting transactions with ambiguous patterns for human review, thereby enhancing its ability to detect novel threats.

Benefits of Active Learning:

- Optimizes labeling efforts for maximum impact.

- Reduces dependency on large annotated datasets.

- Improves model robustness to edge cases.

Challenges in Managing Model Drift

Despite the availability of tools and techniques, managing model drift comes with its own set of challenges:

1. Resource Constraints

Implementing continuous learning frameworks or regular retraining schedules requires significant computational resources, which may not always be feasible for smaller organizations.

Mitigation Strategies:

- Leverage cloud-based infrastructure for scalable computing.

- Optimize model architectures for efficiency.

- Prioritize critical use cases for resource allocation.

2. Data Quality Issues

Poor-quality data, including missing values, outliers, or imbalanced classes, can exacerbate drift and lead to inaccurate predictions.

Data Quality Best Practices:

- Implement rigorous data cleaning pipelines.

- Monitor data quality metrics regularly.

- Incorporate synthetic data generation for rare cases.

3. Ethical Considerations

Ensuring fairness and transparency in model updates is imperative, especially in sensitive domains like healthcare or finance, where biased predictions can have serious consequences.

Ethical Guidelines for Model Updates:

- Conduct bias audits during retraining.

- Document changes and rationale clearly.

- Engage stakeholders in validation processes.

Best Practices for Long-Term Success

To ensure long-term success in managing model drift, consider adopting the following best practices:

- Establish Clear Monitoring Protocols: Define key performance indicators (KPIs) and set up automated systems for tracking them.

- Invest in Model and Data Governance: Implement robust data management practices to ensure high-quality, representative datasets.

- Foster Cross-Functional Collaboration: Encourage collaboration between data scientists, engineers, and business stakeholders to align technical solutions with organizational goals.

- Stay Updated on Industry Trends: Continuously educate yourself and your team about emerging technologies and methodologies in the field of AI and ML.

How AI Regulations Shape Model Drift Management

As governments and regulatory bodies introduce stricter guidelines for AI, model drift management is becoming a compliance issue rather than just a technical challenge. Emerging regulations such as the EU AI Act and the U.S. AI Bill of Rights emphasize the need for continuous monitoring, explainability, and accountability in AI systems.

In industries such as finance and healthcare, where AI models are used for risk assessment and patient care, respectively, regulators are mandating regular audits to ensure models remain fair, unbiased, and accurate across diverse populations. Neglecting model drift can lead to compliance violations, legal risks, and reputational damage.

To stay ahead, organizations should integrate model monitoring into their AI governance frameworks. This includes keeping detailed documentation of model updates, conducting regular audits, and ensuring transparency in decision-making processes.

As AI regulations continue to evolve, companies that take a proactive approach to drift management will be better positioned to meet compliance standards while maintaining trust with stakeholders. Here at Lumenova AI, we help businesses implement robust monitoring strategies, ensuring their AI systems remain accurate, transparent, and compliant with evolving regulations.

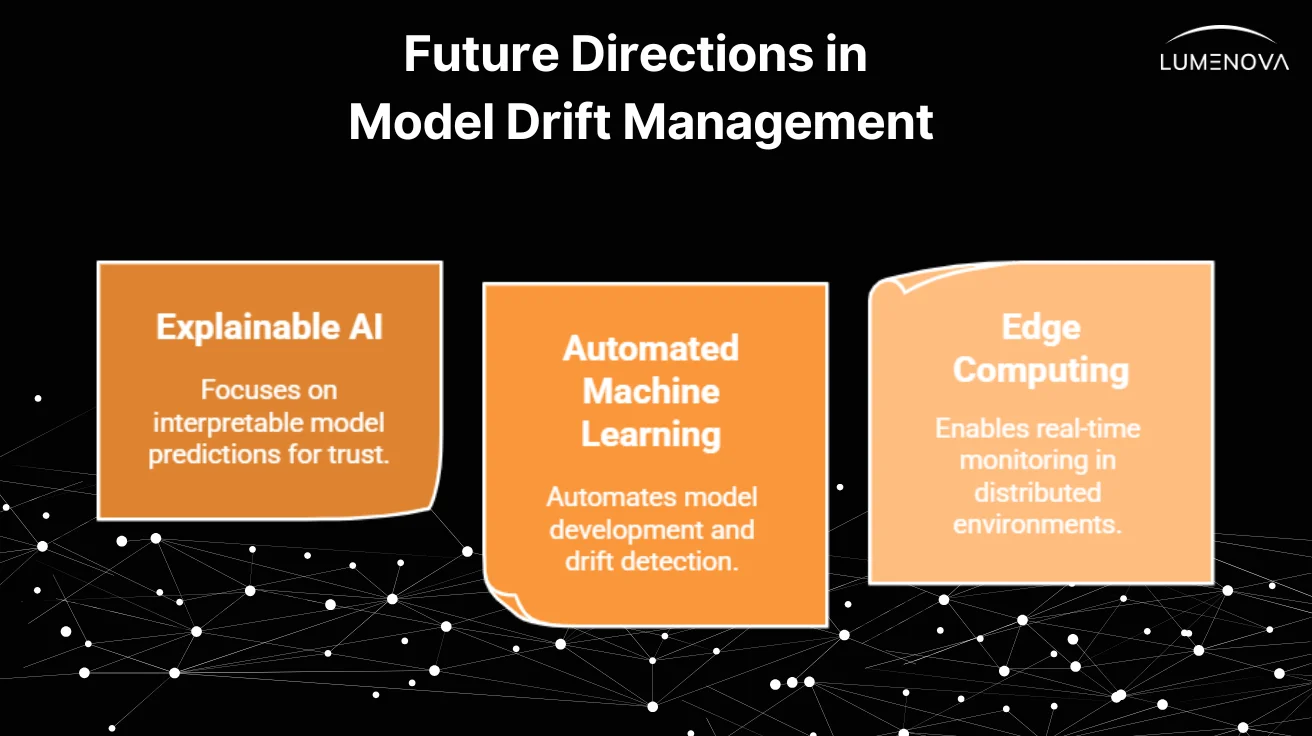

Future Directions in Model Drift Management

As AI and ML continue to evolve, new techniques and tools will emerge to address model drift more effectively.

Some promising directions include:

1. Explainable AI (XAI)

Explainable AI focuses on making model predictions interpretable, enabling stakeholders to understand and trust the reasoning behind decisions. This can help identify and address drift more transparently.

2. Automated Machine Learning (AutoML)

AutoML platforms automate many aspects of model development, including hyperparameter tuning, feature engineering, and drift detection. These tools can streamline the process of maintaining models over time.

3. Edge Computing

Edge computing brings computation closer to data sources, reducing latency and enabling real-time monitoring of models in distributed environments.

Conclusion

Managing model drift isn’t a one-time project; it’s a cultural shift. By embedding practices like regular retraining, cross-functional collaboration, and ethical audits, businesses transform drift from a threat into an opportunity for innovation. Case studies show that companies embracing continuous learning frameworks or transfer learning don’t just maintain accuracy but also unlock new efficiencies and markets.

The future of AI governance lies in transparency and adaptability. Tools like AutoML and edge computing will soon make drift management faster and more intuitive. For leaders, the takeaway is clear: invest in proactive strategies today or risk playing catch-up tomorrow. As stewards of AI, our goal is to prevent drift and build systems that evolve as boldly as the world around them.

Gain valuable insights into AI governance and risk management by exploring our blog. By contrast, if you’re interested in exploring lesser-known territory, consider checking out our AI experiments, where we evaluate frontier AI capabilities via complex prompting. If you’re looking to enhance your AI strategy with proactive monitoring, book a demo today to see how Lumenova AI can support your model drift prevention strategy.